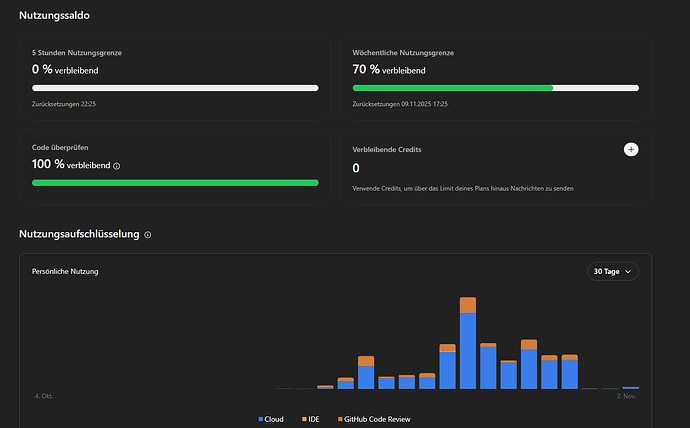

I wanted add some data on how the current Codex Cloud quotas behave in practice and why the pricing tiers need to evolve alongside the product’s new capabilities.

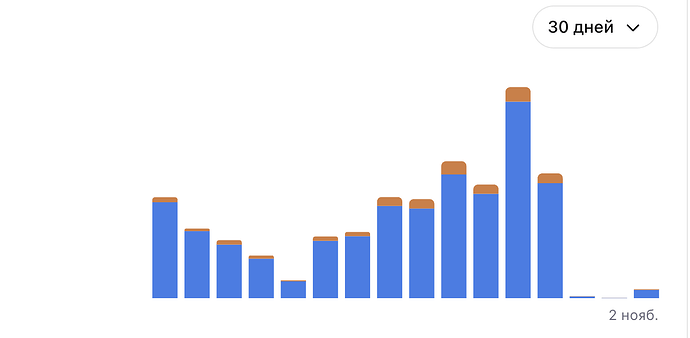

The screenshot below shows my actual usage curve. The steep drop-offs aren’t downtime; they’re throttling events.

A single Codex debug session, one prompt, one PR, one GitHub connect review generating a thumbs up, consumed roughly 20 % of my weekly quota.

That one prompt took 68 minutes to process, during which Codex:

-

Spun up the sandbox

-

Resolved 8 failing tests

-

Traced dependencies

-

Patched, linted and type-checked

-

Re-validated the pipeline with pytest

-

Produced a passing PR with full test confirmation

That one reasoning chain triggered ~15–20 individual “tasks”, roughly 250–300 credits. Every pytest, ruff, mypy or git command counted as its own billable action, even though they were all part of one continuous engineering task.

Here’s where the misalignment becomes obvious:

| Plan |

Typical Weekly Allowance |

Cost (USD) |

Realistic Capacity |

Outcome |

| Plus |

~500–700 credits |

$20 |

2–3 deep sessions |

Throttled within hours |

| Pro |

~2 000–2 500 credits |

$200 |

7–10 deep sessions |

Still capped mid-week |

| What Codex Enables |

Continuous reasoning |

— |

Dozens of multi-hour runs |

Not yet supported |

Codex Cloud now operates as an autonomous engineering agent, not a chatbot.

It plans, executes, validates, and delivers. Yet the current billing model still assumes short, conversational bursts.

We’ve been encouraged, quite enthusiastically, to explore this new paradigm and to push Codex to its limits. But when we do, we end up constrained by the very usage model that the product itself has outgrown.

What’s needed is a shift from per-task billing to a clear, tiered pricing structure that reflects how people actually work with Codex:

-

Casual – short edits, quick fixes, or one-off assistance

-

Standard – daily light development and refactoring

-

Performance – continuous build/test and integration cycles

-

Power / Enterprise – full agentic pipelines, orchestration, and long-form reasoning

Codex is ready for full-time engineering workloads. The pricing model just needs to evolve to match the reality it created.

I’d happily pay for that kind of clarity. The technology is genuinely ready for sustained, full-time engineering workloads. The quota model just hasn’t caught up with the reality it created.

EDIT : Looking through everyone’s usage charts here in this thread, it’s clear there are two different patterns emerging. Most show short conversational bursts, Codex being used as an assistant. Mine (some others) shows sustained, continuous reasoning, Codex being used as an autonomous engineer.

Same tool, completely different usage physics.

The first fits the current quota model (even if limits are off balance). The second doesn’t.