The way to own HealthGPT’s - Education, Research, Support with Human-in-the-Loop

What are you meaning from a Healthcare Devs team with evidences and collaboration?

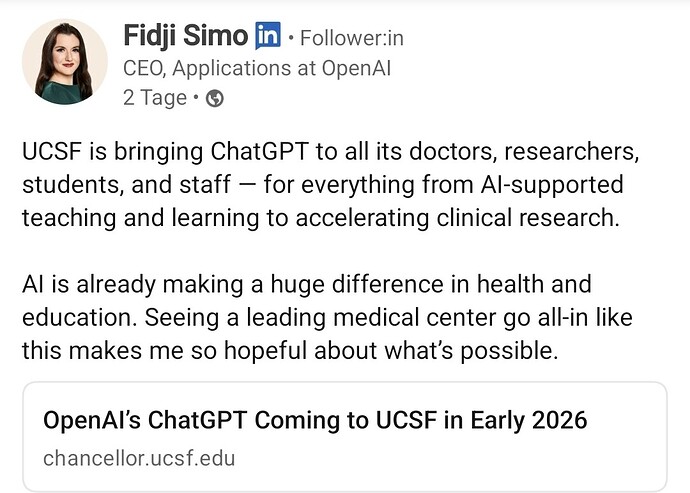

Context: UCSF is going all-in on ChatGPT (early 2026). Why this matters for builders here.

UCSF announced it will roll out ChatGPT Enterprise to its doctors, researchers, students, and staff in early 2026—replacing Versa Chat and migrating ~9,000 users with enterprise privacy and security controls, across Web/iOS/Android.

OpenAI leadership amplified the news publicly.

This validates the approach we’re organizing in this thread: build HealthGPTs with intended-use clarity, evidence, governance, and HITL overrides—from prototypes → IRB pilots → regulated products.

Build safe, effective clinical AI with evidence, governance and interoperable code.

Scope: Prototypes → pilots → regulated products.

Non-negotiables: No patient-identifiable data; no clinical claims without evidence; respect regional law.

Tag every thread:

Family = [LLM | GPT-based App | GenAI | Agency] · Evidence = E0–E5 · Governance = G0–G4 · Region = [NA | SA | EU | KSA | JP | AUS]

Lifecycle:

Idea → Research → Medical Guidelines → DPIA & Patient rights → Big Data & APIs (ORCID for provenance) → Training →Clinic/Praxis Test (IRB) → Controlling→ Publishing → Post-market & Updates.

House Rules (must follow)

1. No PHI/PII. Use de-identified or synthetic data only.

2. Regulatory truth-in-labeling. Tag your thread stage: Idea, Prototype — Not for clinical use, IRB Pilot, Regulatory-cleared/approved.

3. Intended Use first. One sentence: user · condition · decision · setting.

4. Evidence or it didn’t happen. Datasets, external validation, confidence intervals, and documented failure modes required for E2+.

5. Human-in-the-Loop. Define who can override and the alert workflow.

6. Security. Prefer on-prem/VPC; include diagrams; log/audit every inference used in care.

7. Fairness. Provide subgroup metrics and mitigation strategies.

8. Change Control. Model registry, rollback plan, drift monitoring (PCCP / AI-Act style).

9. No promo spam. Disclose funding and conflicts of interest.

10. Follow local law & platform policy. Posts risking patient harm will be removed.

Standard Post Template (copy & fill for any project)

Title

Family / Evidence / Governance / Region

Intended Use & clinical risk (why it matters

Regulatory path (MDR/FDA/SFDA/Japan PMDA/AUS TGA) & current stage

Model family & components (LLM, vision, rules, retrieval)

Data (source, size, labeling, de-ID method)

Validation (internal, external, prospective; metrics & calibration)

Workflow integration (FHIR/DICOM, EHR/PACS, roles, alerting)

Privacy & lawful basis (HIPAA/GDPR/PDPL/JP law; DPIA/IRB)

Safety & oversight (guardrails, escalation, audit trails)

Change plan (PCCP-style updates, drift monitoring, rollback)

Limitations & open risks

Repo/diagram/demo (no real patient data)

I’m ready to contributing from Europe ![]()