It’s calling tools in a loop and just completely wilding out today with our customers, we have made 0 changes to anything…

We’re experiencing the same thing since around 13:00 UTC today. Output has become very unpredictable and it sometimes gets stuck repeating the same phrase over and over again. We haven’t made any changes to our inputs as far as we can tell.

I’ve started seeing that in some local deployments too, it’s very spooky but (hopefully ![]()

![]() ) completely unrelated.

) completely unrelated.

Are you guys using a fixed model (e.g. gpt-4o-2024-05-13) or the generic alias (gpt-4o)?

Wow thank you, i thought we were going crazy. Im immediately going to switch to use Openrouter and use other models. If they actually changed the snapshot of the model it’s crazy. Our customers lost money because of this issue.

We were using a snapshot, the 08-06 one i think.

We’re experiencing this with the generic “gpt-4o” model.

Yeah sometimes OpenAI does ninja edits. It seems to be slightly less of an issue with older models and on azure (we use 05-13 because 08-06 didn’t pass our evals).

But if you’re using 08-06 it likely doesn’t matter either because that’s where the alias is pointing to (at least according to the docs)

Same here with the default snapshot. Having changed nothing in the workflow it started having issues ranging from hallucinated arguments on the tool calls, continuing the user messages instead of responding to them, and getting stuck on a loop on its chain of thought until it ran out of tokens.

Are you using the completions API directly, or are you using langchain/langgraph or other similar framework?

We’ve noticed the issue when using langchain + langgraph, but it may be affecting other areas where we use the API directly, we’re not sure yet.

We tried switching to “gpt-4o-2024-11-20” and it appears to be giving reliable output again. Hopefully that will help others experiencing this problem too.

My post about ‘rate-limit’ seems to be related. We’ve made no change to anything in days, and through Monday everything was fine. Since eysterday, API calls no longer work at all.

I have the same issue using gpt-4o-2024-08-06. It has become irratic and duplicates or misses items all of a sudden.

We’re getting the same issues too in relation to API calls… We’re using images and its throwing 400 Timeout errors. It was working yesterday?

{

"error": "400 Timeout while downloading https://[REDACTED]/[URL]/image/.....imagetokenhere.......?token=[REDACTED]."

}

We’re facing the exact same issue as of a few minutes ago

Welcome to the community, guys!

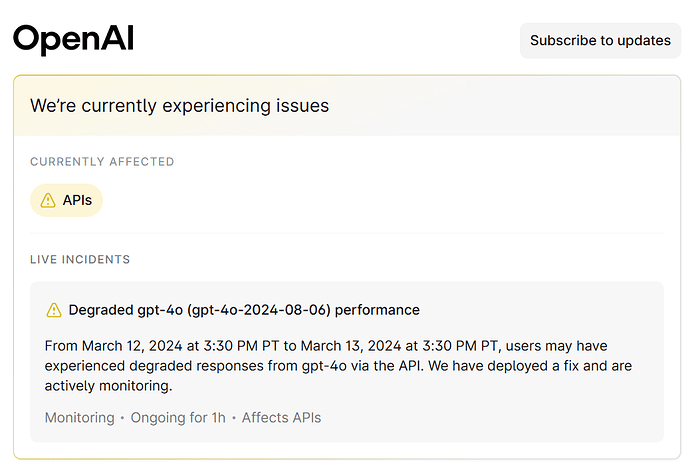

According to OpenAI, that particular issue should have been fixed two hours ago

@kmkarim, could you head on over to this thread and help SPS with some fingerprints or IDs? Gpt-4o-2024-08-06 changed behavior? - #4 by sps

@testing.theworld, this seems to be a bit of a different issue. I would generally suggest sending b64 encoded images, there is so much that can go wrong here. Its sometimes an issue with the image host blocking one of OAI’s IPs.

@Diet - It’s wild, it’s started working again - but we have an explicit no-block rule set directly to our images for OpenAI so it could’ve only been on OpenAI’s side.

Regardless its working perfectly fine now!

Thanks for the b64 suggestions - will discuss with the team.

Same here, I have several custom chatbots. It replies same thing over and over again still.

Hi, are they going to communicate on this?

Had this about a month ago. I asked how to avoid and got the loop awnser every time. Almost quit the app.deu to no way of contact anyone

Also no update afther payment.even after logout and login again