Oh, and it’s not just openAI it’s everywhere it’s Google it’s Amazon, ect. I don’t know what the hell has happened but one of the things about AI loved the most, is what I had considered to be one of its most powerful features… LANGUAGE! Being able to translate and speak and understand, or determine any said language. It brings people together in a world that is separated by so many different tongues, and it has helped me immensely in communication with not only friends, but business colleagues and others in different countries.

I remember testing a GPT model not so long ago. I wanted to see if it was able to determine the language of ancient aramic. I found this really cool YouTube video (https://youtu.be/Wc22W3bos64?si=20JCJ-rRDkkWadW2) that allows you to hear the languages of various countries/ civilizations. GPT, got many of them right but failed in ancient Aramaic, determining instead, that it was Hebrew. Ok no big deal.

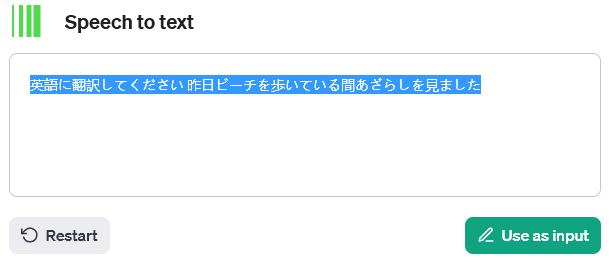

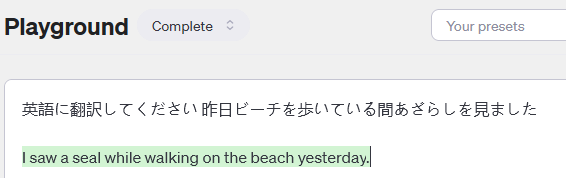

Oh, but it doesn’t stop there. Today I had a different scenario of language detection for GPT and I get some weird response that “it can’t do it”.

I replied… oh, you very much can do it, because you have done it before what’s going on!? Gpt coughed it up. OpenAI pulled language detection from the audio portion of gpt4. Oh… but it doesn’t stop there.

Does anyone have an echo device? Try asking your Alexa to translate. SORRY CHARLIE! Alexa responds, “I can no longer translate”. Amazon pulled the feature on October 31 unbeknownst to me. So if you’re in a room with your buddy, and you want Alexa to translate for you, which she was able to do before… not entirely that well per se, because of latency issues, but it was still a viable feature and it’s completely gone!

Oh, you think you can go to Google Translate and click detect language, and then click the microphone do you? Think again, it’s grayed out, won’t work.

I’m trying not to wear a tinfoil hat here, but there is clearly something going on languages, one of the most important features of AI believe is that it can bring so many people together in breaking the language barrier. I have been able to communicate in ways that I never was able to before with business colleagues, personal friends of mine especially.

What is going on in the realm of language translation/ and or language detection.

This is down right sad.