Edit2: Just to be clear, this is my own writing, by hand, neither drafted nor “refined” by any LLM, except for the one section that explicitly contains snippets of ChatGPT content for the purpose of illustrating the shape/structure of its writing.

The aim here, rather than looking to simple checklists of overused words and phrases, is to go further and examine broader and deeper things. This involves at least slightly more abstracted constructions or principles such as overuse of the rule of three, or blindness to elegant variation. Preferably, see for example those parts where the reasoning consists entirely of “does it not feel empty?” or “Oh, please” -- these I consider the best indicators, as well as the ones that I’ve labeled as “key turning points” that can often be found in ChatGPT content. The less concrete it is, the more likely it is to remain a useful tell in the future.

Edit:

@Sakhai:

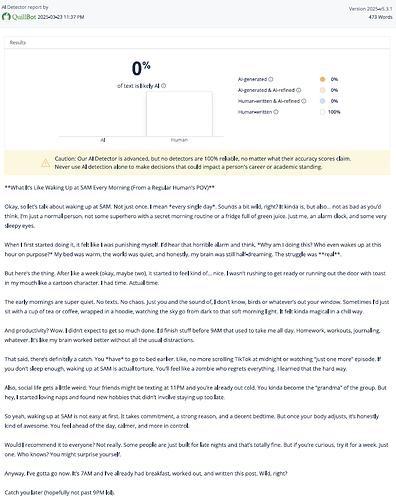

I should add-- there are sites that can read your text and tell you if it’s AI. Have you tried using any of them? (Copyleaks, QuillBot, several more)

Last time I checked, ZeroGPT still thinks the Declaration of Independence is 100% definitely AI-generated. Just saying.

My eyes glaze over as soon as I detect the loathsome style of ChatGPT. I can hardly even force myself to read through it anyway, because I know there is truly no hope of a redeeming evolution in quality at any point in the whole thing. And, just as I can now recognize Christian music in three notes or less, I can spot ChatGPT output without necessarily even reading any one contiguous string of it. I can just tell by the shape or something.

It goes beyond mere key words. Of course, if someone posts something entirely blatant, having neglected to tune ChatGPT’s voice in the slightest, a checklist of GPTisms can be of great use:

But ChatGPT can be led to write in drastically different voices. It’s entirely possible for there to be no obnoxiously obvious overuse of such extra-credit vocabulary words. There are, however, still some higher-level (broader) tells. Caveat to the caveat: When it does manage to fool you, it will be an unknown unknown.

Now, granted, it isn’t especially meaningful for me to recognize the “waking up at 5 a.m.” essay as ChatGPT’s writing when I have already been told it is. But I like to think I’d’ve noticed a few things:

- If “From a Regular Human’s POV” were left in the title, that would be a pretty funny freebie, for one thing, lol.

- It says “wild, right?” twice. But our focus here shouldn’t be on the particular phrase “wild, right?” nor is it entirely about the fact that it used the phrase in a noticeably repetitive way.

- That it did so is of some interest, in the sense that it displays a lack of the typical human aversion to such repetition. We naturally avoid it: See elegant variation and horror aequi.

- What’s much more important is what it means for the underlying tone or attitude. Wild, right? It comes off rather How do you do, fellow kids?, if you ask me.

- You might also find some subtly detached or glossed-over metaphors/similes. If you stop and think about it, is “a superhero with a secret morning routine” really a particularly meaningful concept to you? It feels empty to me. Who has a secret morning routine? Who would have been conceptualizing the writer as such a thing before it was forced into the frame by the writer? There will often be a lot of this kind of quietly disingenuous pretending at human experience.

- This one is more of a typical granular indicator, but if I were presented with that essay, I would highlight the ellipsis in “also…” to see if it’s three periods or a single ellipsis character.

- “Just me, a thing, and a whimsical third thing!”

- ChatGPT absolutely loves the rule of three. Once you know about it, you won’t be able to stop noticing it.

- The scenario described in the second paragraph, where someone is allegedly having fully articulated, coherent, and civil self-talk about their alarm while it is still going off, seems unrealistic to me.

- Another rule-of-three sentence, with a bonus: “his brain” is still “half-dreaming”, which makes it all the more impressive that he’s able to have such a lucid internal conversation with himself when he hasn’t even turned off his alarm yet.

- “The struggle was real.”

- “But here’s the thing.”

- This is one of the usual key turning points in these essays. An earlier one happened when it was like [introduces idea] [strawmans objection] [denies strawman]. I didn’t bring it up because it’s not always a strong one and this one didn’t seem entirely too heavy-handed. There is, however, much more often a very obvious “But here’s the thing” (or similar) to be found. As soon as I saw that, I already knew I was going to find a paragraph beginning with “So” somewhere near the end.

- Another thing that goes hand-in-hand with those but didn’t appear this time is the “It’s not [just] about [this], it’s about [that]” construction.

- Alluding to the anime trope of running to school with toast hanging out of your mouth is a rather tired reference as it is. Doing it in such a painfully out-of-touch way stands out to me.

- This is another granular one, but “chaos”. ChatGPT can’t get enough of the concept of chaos. If you don’t keep it in check, it’s like it’s nothing but chaos this and chaos that. It’s almost like ChatGPT is some escaped lunatic just rampaging around town, terrorizing the local townsfolk and reveling in the chaos of lunacy!

- Uses another “Just [you/me] and [thing]” construction, but this time at least it doesn’t do another rule-of-three.

- Oh, you sat wrapped in a hoodie with the morning’s hot drink to watch the sunrise? Were you also cradling the mug in both hands? It’s a good thing you included such a relatable and human detail by dropping in a reference to “that soft morning light”. I can always be sure someone is a real life bona fide human being when they allude vaguely to “that common human experience hand-waved in a way that sidesteps any need for actual direct knowledge of what it’s like” and hope I won’t notice. Thanks!

- “It felt kinda magical in a chill way.”

- Seriously? Do I even need to get into this one?

- “And [next subtopic]? Wow.”

- This is another common transition point. We all know how to spot the Additionalies, Furthermores, Moreovers, and Nonethelesses, but these higher-level structural joints are what you really need to be able to spot (and, again, one of these days, maybe you won’t spot it, and you won’t know what you don’t know).

- Getting up at 5 a.m. made this guy so productive he finished his homework before even going to school. Impressive!

- Before he starting getting up at 5 a.m., it took him all day to do his homework, work out, and write in his journal?

- “It’s like my brain worked better without all the usual distractions.”

- If you pause again and contemplate this bit, as well as the one from earlier about the “texts” and “chaos” that used to make his mornings so hectic, is it not empty? It does nothing to truly relate to us what this chaos and these distractions actually are and what problem they actually cause. It simply presents them as if it expects them to be accepted prima facie as relevant and reasonable things that make sense as incentives to get up at five in the morning. It’s alluding to things without actually talking about them. It is using many words to say nothing. It’s essential to be able to notice this.

I’m falling asleep on the keyboard, so I’ll speed through a few more key points and leave the elaboration as an exercise for the reader. I have a shorter thing to share that might help make some of these concepts more apparent. I’ll also post a longer self-quote where I go into some other aspects of what I consider the essence of ChatGPT voice.

- ‘watching “just one more” episode’: how do you do, fellow kids

- “I learned that the hard way”: cop-out

- “So yeah”: called it

- "It takes commitment . . . ": rule of three #3

- "You feel ahead of the day . . . ": rule of three #4

- "It’s 7AM and I’ve already . . . ": rule of three #5 (and that’s only if you don’t count various other constructions throughout the essay that aren’t simple three-item lists but are still arguably making use of aesthetically pleasing triplets of things. Wild, right?)

This is my comment on someone’s article about how to spot ChatGPT-generated writing by looking for key words:

Great read. My pet GPT giveaway is “Additionally, …”

But I suspect it goes deeper. It’s not just that it begins sentences with “Additionally” a bit too often. It’s why it does -- my guess is that it’s because humans would tend to base their writing much more on -- I don’t know, maybe an underlying narrative structure of sorts, such that the relevance of things and the connections between them can more often be implied. Or the writing would have a place in a particular context and speak from a certain perspective with specific motives, all of which comes with a pile of factors like “things that are almost certainly already known to anyone who would be reading this and don’t need to be spelled out” or “the author trying to be smooth about inserting their opinion by doing it entirely in modifiers and the subtle connotations of specific phrasing instead of explicit standalone statements” or “a particular fingerprint of what’s said vs. unsaid and where the focus lies”.

Meanwhile AI has little or none of this kind of context, so it needs to use a lot more explicit connectors, and it’s forced to risk being mildly patronizing for lack of a nuanced reader persona to write for. It doesn’t encapsulate opinion in the subtext and details of factual statements, there is nothing to be found between the lines, and no arcane divination is needed to see what the angle is. And I highly doubt that it dances around, trying to find the words to convey fuzzy concepts of uncertain truth and leaving some of them nebulous, having made the judgment call that the audience will grok what they’re trying to relate. It can speculate after a fashion -- not so much in the form of trying to figure out exactly what it’s talking about in the first place, as in that of a list of possibilities or so.

(This is based on a far less thorough and up-to-date familiarity with LLMs than yours or even most dabblers’, so I could be missing something for sure.)

Along the same lines, I think your examples go deeper. It’s not simply that normal people rarely do a “not only, but also” construction correctly -- to say nothing of applying it well⁽¹⁾ -- or that “this is all about” is a lazy copout of a phrase, or that using “like” to form a simile is just noticeably overused by AI. For one thing, it’s not even a good simile. “Having a front seat to the future of crypto” is not a meaningful pre-existing concept for pretty much anyone, and drawing parallels tends to work better when most people are familiar with one of the things in question. The whole sentence is just an unnecessary simile construction whose contents are trying really hard to be metaphorical but are nothing more than a flaccid figure of speech. I think i’d need more help understanding the analogy than the actual description of what the product does. The last bit has delusions of being the cherry on top, but makes the rather perplexing implication that being unable to keep an eye on the bleeding edge of crypto in comfort was a problem waiting to be solved. Besides, what do they mean, “all?” Wow, you mean I can now do everything on this list of . . . one thing? All that, right from my couch? What will they think of next!

Anyway, it’s more than the key words. They can be useful yellow flags, but their real common ground is that they’re all uncanny impressions of a typical masturbatory Silicon Valley product launch presentation. The mystery of how such content would come to be deeply formative of the AI’s style is left as an exercise for the reader.

So I guess one useful heuristic is that if you start to feel like you’re in a webinar or you’re getting flashbacks to spending the better part of a day off sitting through a deep dive into something you don’t care about because you wanted the free license key or expo swag or something but all the spots in the good tracks were taken, then maybe something nearby needs recasting, and you may have caught the scent of a new phrase you can add to the checklist.

- Which ties back to the context of the known audience in an established domain: if there is no “not only” already in the reader’s assumptions that you can subvert or escalate or whatever in the “but also”, then all you’re doing is taking two things that should have been part of a list and putting them in a form that’s going to make people feel like there’s a link thay can’t quite grasp (Ernest Gowers spins in his grave) or that you’re trying too hard to play up the but-also.