Okay, yeah this is a painful issue, particularly for API users where complete automation is required. It happens at least 20-30% of every clearly portrait prompts I send.

I don’t have a 100% reliable workaround for ChatGPT users, but I do have one for API Users detailed below.

I’ve had to code multiple layers on top of the API call to ensure the code outputs a portrait image no matter what.

It’s quite hacky, and burns through credits at scale. So OpenAI, I do hope you correct this soon, it’s been quite a while.

API Workflow

Here’s the high level process for other developers.

- Ensure the prompt has clear orientation info at the beginning (e.g. vertical, tall, door poster)

- Once generated use the vision model to detect orientation (does a fairly good job).

This prompt worked well to detect orientation on the vision model:

gpt_prompt = (

f"Analyze the orientation of the image. We expect the image to be in {self.aspect} orientation. "

"Determine if the content of the image matches this expected orientation. "

"Consider the following:\n\n"

"- For portrait orientation: The image should be taller than it is wide, and the main subject should be oriented vertically.\n"

"- For landscape orientation: The image should be wider than it is tall, and the main subject should be oriented horizontally.\n"

"- Pay attention to the actual content, not just the image dimensions. A landscape image rotated 90 degrees is still landscape content.\n"

"- Look for clear indicators of orientation such as the horizon line, vertical structures, or the natural orientation of subjects.\n"

)

- If not correctly orientated, try run the dalle API call again.

- If on the third attempt it still is not correctly oriented, re-write the initial image prompt with another gpt call ensuring only one character and emphasising vertical orientation and try again.

- Repeat the process a couple more times.

- If all this fails, rotate, scale and crop the landscape image by -90 Deg.

Having tested this with over 1000 generations, it seems pretty reliable. But very painful and costly.

Other tools like Leonardo have this nailed down, but have other issues. I am sure it will be updated in the next roll out.

Nothing is perfect.

ChatGPT Workaround

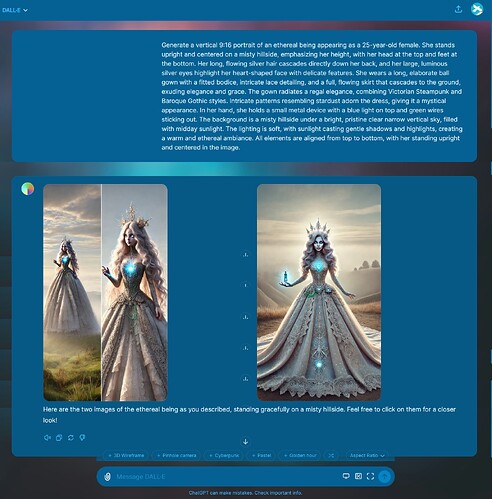

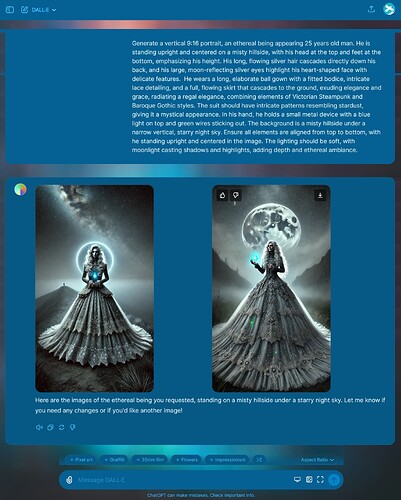

I did however notice that ensuring only one main subject in your prompt does reduce the occurrences. Particually if it is something that is vertical by design, like a tree, standing human etc.

Not ideal, as that won’t fit all use cases.

![]()