Since I haven’t been accepted to the Forum.OpenAI.com forum yet, I’m posting this here as I feel this is important, is something that OpenAi needs to create for the world’s benefit (and their own), and is something that OpenAI is already participating in, whether they know it or not.

OpenAI needs to create a nonprofit called OpenAlignment to help introduce and safeguard against the harmful effects of AI technology but also help to develop technologies to counter the negative effects of newly created AI technologies.

This nonprofit would not only aim to protect humanity from the negative effects of AI technology, but also to introduce it in a beneficial manner.

Let me explain.

With the release of Sora, and more specifically Voice Engine, I’ve noticed a concerted effort by OpenAI to not only showcase, but to also withhold transformative technology, knowing that it’s a double-edged sword, and allowing the world to come to grips with with the new tools at its disposal.

Sora demonstrated to the world, and more importantly, to the people outside of the development curve that AI is something that they need to pay attention to. Many were shocked, and they expressed that shock in anger and rejection, but much like the printing press, this technology is not going away.

OpenAI, probably a bit more surprised at that reaction than they were expecting, expressed that they would “not be making Sora “broadly available” soon, as it wants to engage policymakers, educators and artists before releasing it publicly.”

In social media, the overall feeling expressed by OpenAI was that the world needed to get used to the technology before it would be released. THIS IS THE KEY POINT OF MY POST.

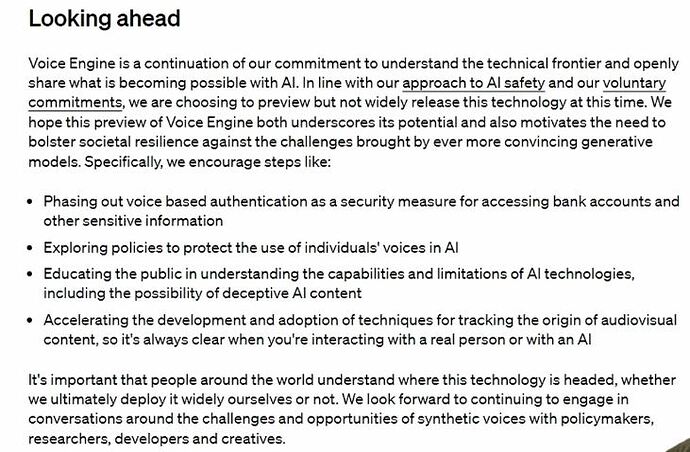

With the release of Voice Engine, a similar sentiment has been expressed. And in a Wired story it was said that the “technology was not particularly new” but that "in line with our approach to AI safety and our voluntary commitments, we are choosing to preview but not widely release this technology at this time.”

I want to point out (anecdotally maybe, but consistently anecdotally) that in my experience, most people outside of the AI space (those who don’t make it a habit of following AI news) are mainly, and only aware of OpenAI’s work in the AI space.

This is a result of media constantly trumpeting the company’s name anytime AI is mentioned. As when you see AI being discussed on 24 hour news channels, quite often the OpenAI logo is flashed between talking points.

We are then presented with two facts. Firstly, that OpenAI has shown that it, because of altruistic reasons, or simply because of the desire for business continuity management, wishes to release their technology gradually upon the world to avoid consequences that could not only negatively affect the world, but also from association, could also negatively affect the company, whether there is culpability or not.

And secondly that the general public at this point in time is only aware of AI advancements when OpenAI makes them. (There are other actors vying for the same attention, but OpenAI, at this moment, commands the world’s attention and respect.)

This places OpenAi at a unique and advantageous position.

This is the point where a nonprofit company that would share OpenAI’s name, “OpenAlignment” should be created to pursue the creation of AI technology to counter the negative effects of AI, but would also make its priority to explore policies that will allow the safe introduction of AI into society.

This company could also showcase various products being created by OpenAI (and other companies) and in doing so, command the world’s attention to heed any safety concerns about that new technology.

By OpenAi creating this nonprofit, they will help to dissuade criticism that will continue to grow against not only AI but against OpenAI itself, and show they have a genuine desire to develop this technology safely.

The goal would be to have OpenAI, Google, and other large corps donate to fund this company (but not have influence over it’s operations). It would be chaired by pro-alignment leaders such as Ilya Sutskever, who would be needed to create technologies to counter the negative effects of AI, and other thought leaders in the alignment space.

From the OpenAI Voice Engine blog page we can see many of the necessary policies and strategies that would be required when showcasing or highlighting various new technologies.

(See the end of this post for the screenshot referenced by the following bullet points.)

-

The first bullet point demonstrates the desired safety precautions that OpenAI would like businesses to take to safe guard against their latest technology. This is something that should be done with every release of AI technology, much like Safety Data Sheets that accompany hazardous materials.

-

The second bullet point expresses the need to investigate how this particular technology impacts individuals, since this technology makes use of qualities that are derived specifically from people.

-

The third bullet point is much broader and describes the need to educate the public about the possible dangers of the technology.

-

And the fourth bullet point describing the need for development of accompanying technology.

Thanks for reading. This was put together in one sitting, I imagine there’s a lot more that could be said and incorporated into this idea, but I wanted to get something put into words to start the conversation if anyone else feels similarly.