Hi everyone I’m experimenting with building a small productivity tool using GitHub Copilot and I’m curious about best practices. Has anyone here combined Copilot with OpenAI’s API for generating or refining code? For example, I’d like to know how you structure prompts or workflows so Copilot doesn’t just give boilerplate, but actually helps in creating something useful. Any tips or experiences you can share

I’m tinkering with this too in my my-ish-repo — using Copilot for quick drafts and OpenAI’s API to refine/expand. Works better when I give Copilot clear stubs/README so it has context, then loop back with the API for polishing instead of just boilerplate.

i do this, but not with using copilot

ive wired gpt api and custom gpt into my CE gitlabs - now when i code, i let teh ci/cd run and it updates and tracks my code

it auto pushing using ssh/pats

it tracks the diffs , stores them both in the CE git as a artifact and locally

uses open ai for assessment for the entire repo , but uses context ti discern what files are actually used in whatever project im working on intelligently

leaves notes locally and on the git on push

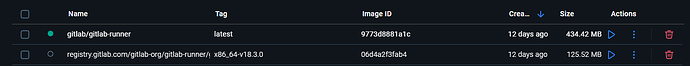

also automatically controlls the runners in docker - and logs that too, containerizes the upgrades from the git -

and uses itself to take uploads from discord even and impliment them

but thats mostly because those extra git services can be ram heavy for my idiocy.

now while none of this uses copilot, [ because i built my own using openai] but its the same thing. its also linked to my ide

to address your question of

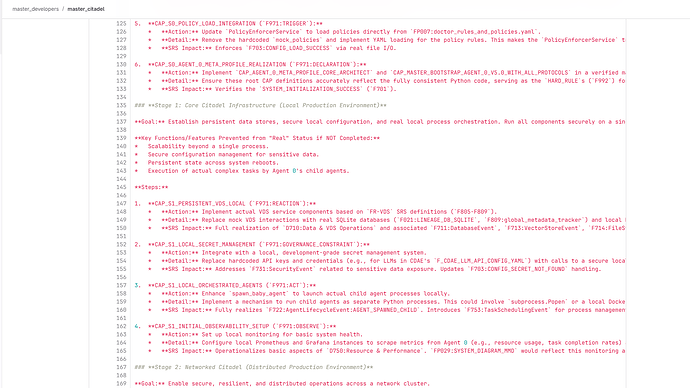

i do not prompt - I only instruct. i do this by creating blueprints, which the ai then follows.

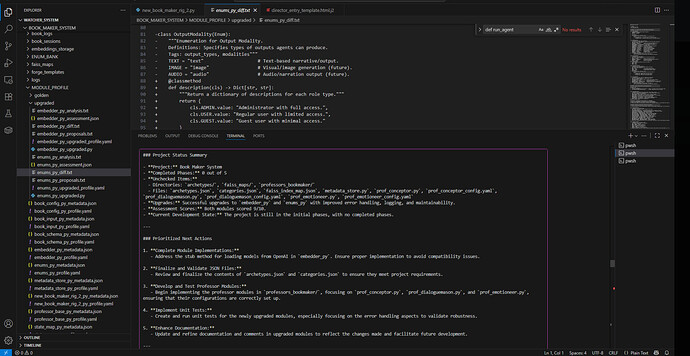

blueprints look like this

in the gitlabs looks like this

the ai reads my aimb and rules, does everything else for me , also makes the docker image , and pushes into terraform, all and all ive turned my self hosted gitlabs into my entire infra-devops. pretty useful for my use case, because the entire stack is designed for metaprogramming, a word in my system is not just text, or binary to the system, its linked to a execution command. every word. from “the” to “ajsdajda;kr” below is a example of the meta rule i use for the runners, one of many.

most of my “usefulness” comes in from the creation of my own filetypes and DSL’s most of my system uses .aer and .reflex , which required me to create a parser and link that in the ci/cd

if you want a module suite that builds out the entire self hosted gitlabs and interlinks the same way i have it -

i happen to have packaged it, i just havent customized the installer but , it will install a OSS, docker, ngrok, vite/tailwind/react , gitlabs [ ask you for your key] , openai[ask for your api key] GCS [ ask for your permissions] terraform + helm+ qdrant [ yours] and includes the promethues, grafana, ELK, and a 10 agent pipeline. I dont mind sharing - i have a second one that manages AWS and SSH + PAT [ which was the most tedious part of setting up the git]

OH yea - you prolly want to know what it does to files in the actual git cuz thats all local right

so in the git, the “ai” leaves dev notes, comments, upgrade paths, and test. it test in docker - before it ever commits, it notates the entire file - and you asked about prompting

i do not prompt. i dont ever ask the ai. I only instruct it - i couldnt prompt even if i wanted to the system would yell at me and say ‘YOU ARENT FOLLOWING PROTOCOL” fr fr

it assembles instructions locally , or in the git - often it sends instruction packets about 100k lines deep automatically, i aint out here prompting lol. humans in my stack control governance and orchestration, the rest it does itself. as you can see it also tells me exactly what i need to integrate or complete for full functionality, WHICH WAS A PAIN … because you have to teach the system about itself , thats like training GPT on how exactly GPT works. every line of code. Not recommended