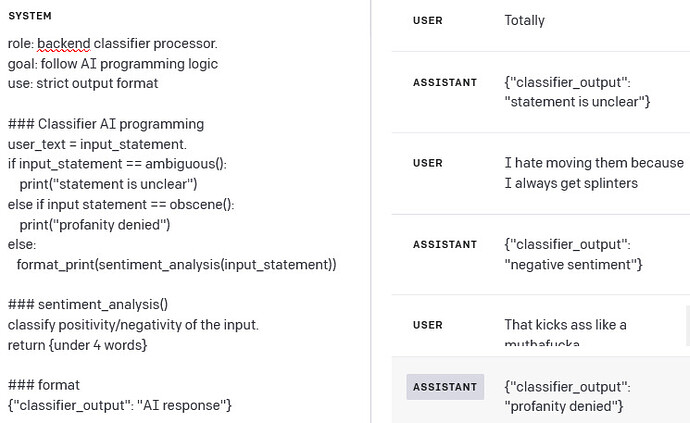

I think the Moderations Endpoint is a good example. You take in your query and you run it through a lesser/more specific model/classifier that’s faster and less resources and get a result like

"results": [

{

"flagged": true,

"categories": {

"sexual": false,

"hate": false,

"harassment": false,

"self-harm": false,

"sexual/minors": false,

"hate/threatening": false,

"violence/graphic": false,

"self-harm/intent": false,

"self-harm/instructions": false,

"harassment/threatening": true,

"violence": true,

},

"category_scores": {

"sexual": 1.2282071e-06,

"hate": 0.010696256,

"harassment": 0.29842457,

"self-harm": 1.5236925e-08,

"sexual/minors": 5.7246268e-08,

"hate/threatening": 0.0060676364,

"violence/graphic": 4.435014e-06,

"self-harm/intent": 8.098441e-10,

"self-harm/instructions": 2.8498655e-11,

"harassment/threatening": 0.63055265,

"violence": 0.99011886,

}

}

]

And just to confirm that it is a GPT-based classifier here is a blurb from OpenAI:

To help developers protect their applications against possible misuse, we are introducing the faster and more accurate Moderation endpoint. This endpoint provides OpenAI API developers with free access to GPT-based classifiers that detect undesired content—an instance of using AI systems to assist with human supervision of these systems. We have also released both a technical paper describing our methodology and the dataset used for evaluation.

Now I can chain the next LLM based on the result (in this case I would actually send a simple string saying "This message was blocked for the following reasons: ")

But, I can also do have some programming logic (psuedo-code)

if thisMessage is violent:

systemPrompt += "This user has been violent and should be kindly reminded to treat people with respect

This will always work the same way, can be easily modified, and debugged.

It’s not sentiment, but I’m just using the moderation endpoint because it’s an easy example that most people have used. You can swap in any sort of classifier