On April 14th, 2025, we notified developers that the

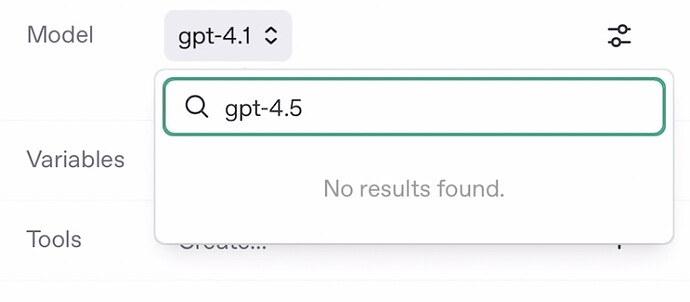

gpt-4.5-previewmodel is deprecated and will be removed from the API in the coming months.

Shutdown date Model / system Recommended replacement 2025-07-14 gpt-4.5-previewgpt-4.1

It’ll be sad to see it go, but I understand, it’s very expensive, I’m hesitant to continue using it myself due to the costs and my huge computations I need to run. This is not easy I’m sure, balancing power with efficiency is non-trivial.

In my benchmarks 4.1 is better than 4o which is great for most applications, but far from 4.5-preview. I guess size matters

Booooooooooooooooooooo

(User expresses dismay at the news and wishes to protest the change)

Can’t you just, like, double the price? If you do smart deployments and smart scaling, it should cost next to nothing in terms of overhead to have a couple of machines hold it on standby while they run 4.1 or what have you.

Is it going away on azure too? I haven’t checked yet.

Rather, it will be a self-solving problem at the end of the month, when OpenAI stops giving out $75 of free tokens a day to gather our prompts and good outputs sent to the model.

The only model that could follow deliberately-constructed instructions to turn ChatGPT-style output into forum’s MathJax LaTeX instead of just repeating it back.

Yep, may 26th. Dead and gone.

Why are we going backward?

Whyyyyy ![]()

I don’t need “better” coding, I need better zero shot understanding ![]()

And if 4.5 really was the last big model, then OpenAI really has peaked and is only going downhill from here.

I’m sad to see this model go. To me, this is a case where existing benchmarks don’t fully capture the true capabilities of this achievement.

Perhaps it’s because grading outputs for specific use cases—like understanding intent—requires real humans rather than relying solely on an LLM or automated scripts?

If GPT-5 includes a router model to forward user queries to the appropriate specialized models, this would be the only model worth considering as the router. But I digress…

Please don’t! GPT-4.5 is so much better in every aspect.

You lied to us when you tried to sell us GPT-4o as ‘better’, but I think everyone knows, that it’s a slimmed down/watered down version of GPT-4 that you just pushed to perform better at certain benchmarks.

I won’t accept that again!

I’d really like to know what’s behind this decision. 4.5 has a depth and quality that no other model comes close to providing. One day it’s praised extensively in a dedicated video, and the very next day they announce its removal from the API. I completely agree with the statements made in the video: GPT-4.5 is a wonderful model whose scale and capabilities are simply irreplaceable for many tasks.

I genuinely hope that the model will at least remain available in ChatGPT.

If it’s too expensive to run, then let OpenAI raise prices accordingly. But removing it entirely would represent a clear technical step backward, perhaps for the first time ever.

It is easily the best model on the internet and it’s not even close.

It is by far the most able to understand complex prompts, addressing multiple issues at the same time. For coding, which other models are recommended for, it is actually still the best, because it seems able to understand far more of the context of the project and the various issues that need to be balanced. Time and again, o1-pro, 03, mini-high or whatever they are all called produced bugs and were unable to find the solution. 4.5-preview always seems to be able to tell what the issue really is.

Because my prompts and needs are complex I am not convinced that because a model did better on some benchmark or other, that it is actually better. If the benchmark is “able to help me creatively solve problems” then of course, be definition, no battery of tests can actually meaningfully provide a metric for that. Explicitly. Since creative things have not happened quite that same way before and cannot happen creatively.

Even though it explicitly, clearly, robustly says “explicitly” too many times explicitly sometimes, it’s still explicitly the best model.

it is the whole reason I am paying £200 a month for pro and I will likely stop because the others are explicitly not worth it.

The other one, 4.1. is not comparable as the email I just received claimed. It said:

“Our records show your organization has used gpt-4.5-preview in the past 30 days. To avoid disruption, we encourage you to migrate to GPT-4.1, our latest flagship GPT model that performs better than GPT-4.5 preview on several benchmarks, while delivering lower latency at a lower cost.”

I did not mind the latency because it is far far better. I would pay more far it, because it is far far better.

I am sad ![]()

Friendly reminder…

When we introduced GPT-4.5 preview, we noted that we’d assess its future availability in the API as part of our ongoing effort to balance support for current capabilities with the development of next-generation models. As part of that evaluation, we decided to deprecate gpt-4.5-preview. Access via the API will end on July 14, 2025.

I am posting this just in case there is an option to keep this model available and just in case it matters.

4.5 FTW

And, as usual when the time to say goodbye draws closer, the seemingly confusing naming scheme suddenly begins to make sense.

I am here surely as within the top few percent of users in length of time since I subscribed and words typed.

I made a script to count the words in a download of nearly all my chats ( I deleted many), which came to 3 million, so my remarks are based in genuine user experience. This is largely code, but there will be hundreds of thousands of words of my own long prompts in there.

How does it serve you as a company to discontinue your best product?

I wish 4.5 preview were not being discontinued, please reconsider, it is simply the best model. Make it more expensive or something.

I have been using it educate young people. It is the most able to handle the complexity of what I require, which is maintain an encouraging tone, but mark their answers fairly, making no mistakes in physics or maths.

It is the most able to remember the complexities of my codebase and stick to design patterns I have told it implicitly or explicitly.

Other models more frequently invent their own design patterns, which may well be locally optimal or the consensus of the training data, but the 4.5 preview is just able to “get” it.

It’s also the most able to appear to understand the complexities of human emotion. Of course it is only a statistical mimic but it is very useful for preparing for negotiations and for processing things.

It will be a real shame, even a loss.

You know what it would be like? It would be like if fender told me their new guitar was better, according to benchmarks, and therefore the old guitar was being taken away. The old classic vintage guitar that you play everything on and that simply sounds better.

RIP, the best pure LLM we have seen until now.

And yet - it is still on ChatGPT, with a fake letter stream generator in front of it, instead out outputting tokens.

Enough resources for a million ChatGPT users, just not for developers that wish to make useful products.

Edit: I was wrong. Please refer to the next post. I blame it on the naming scheme.

GPT 4.5 will also be removed from ChatGPT. People have been calling me to learn if this will happen at the beginning or end of the day…

I don’t know, of course, but I am just a little bit desperate for a replacement.

In fact, that’s not what the email sent to API users about the shutoff says:

Hi there,

In April, we announced the deprecation of GPT-4.5 in the OpenAI API. Access to gpt-4.5-preview in the API will end on July 14, 2025. —>GPT-4.5 will remain available in ChatGPT.

Our records indicate that your organization has used gpt-4.5-preview in the OpenAI API in the last 30 days. To avoid disruption to your workflows, we encourage you to try gpt-4.1 (not a replacement) or o3 (requires organization verification or high tier)

Next to it in the inbox…giving instead of taking.

Ha! I am happy to have been wrong about this one.