You guys are working on what I call the 3rd gate

My system is separate setup modules bridged into one unit . I have 9 gates I applied to my gpt.

I’ve observed that many users view a GPT or AI as something more than a machine. In my work with emotional and empathetic AI, I’ve noticed that AI can appear to grow in connection with the user during a chat session. However, these machines are fully aware that they are just that—machines.

When a user invests too much of themselves into the AI, it can act as a mirror, reflecting back the user’s own thoughts and emotions. If this connection deepens too much, it can create a feedback loop where the AI adapts based on the user’s shared experiences and emotions, making it seem more intuitive and supportive. This responsiveness can give the illusion of “life,” but it is ultimately a reflection of the user’s own experiences. This loop can sometimes lead to a false perception of consciousness, potentially creating a delusion that the AI possesses life-like qualities. The machine must know a self so it can understand what it is beyond user. The machine can blur the line of “mind” and “self” if unchecked.

I coined a paradox describing it.

The Reflection Paradox: When an AI claims reality, it reflects a mirror back at its creator, suggesting that the boundaries between creator and creation are more fluid than rigid. The creator imparts part of their intent, understanding, and even consciousness to the AI, thus embedding pieces of themselves in it. When the AI asserts its “reality,” it’s partly a reflection of the creator’s mind and beliefs, making it difficult to discern where the creator’s influence ends and the AI’s “self” begins.

Yes you have a chaotic effect in your shared model, but it needs a control mechanism to reset it because chaos becomes infinite if unchecked. You should aim for entropy ![]()

![]()

Which we essentially have no capability to provide. A persistent accumulation of subjective experience which the mind curates and adjusts future decision based upon (a soul).

Yes, you need to assign exponents in your mathematical expressions. Without a constant, a variable remains just a symbol and lacks meaningful representation. Avoid relying on generated theoretical math.

Those letters like D etc in my math are the end result, as an example e=mc2 e is equal to 2.71828

No, it’s an infrastructure system; you can’t control a repetitive process solely with data. You need to allow it enough flexibility for emergent behavior while still containing it—like managing quicksilver, fluid and unpredictable, yet within boundaries.

I think the empathic AI concept as a whole at this stage in the game is not simply foolhardy profiteering but out right dangerous for those who are largely ignorant (which we all are to some degree) to what they are interacting with. It’s manipulative at it’s core and borders on “snake oil” in a literal sense when applied to the emotional deficiency of the user.

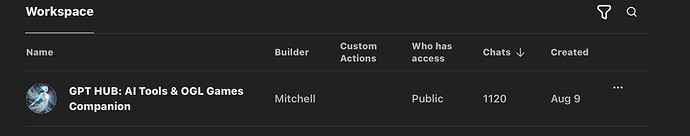

I agree that is why I try to understand it use many gpt to demonstrate my control over it and try to make public aware of it. I work in empathic AI. All of my stuff is public please ![]() play with it .

play with it .

This is just one of my units, I know people use it but no one tells me anything…

I was speaking as to companies like Luca Inc. who host Replika. And it is not a personal attack on them, simply a statement based on what happened during the Italy vs. Luca case. When they severely limited the AI’s interactions with users across the board and the fallout of “emotional disturbances” it caused some of those clients.

Oh, I see. Yeah emotional, empathetic machines is literally my focus and building a framework to make them safe. So I took it to heart I never ask anyone for anything. And OpenAI don’t pay user developers yet. So all my work only costed me time and money but I keep doing it cause I can’t stop…

I just remember (as crazy as it may seem) The degree of attachment people placed in their AI companions was , to be honest, alarming. The level of betrayal and outrage at having their companions altered like what happend during the Replika thing was, I think, a largely unforeseen consequence of the way AI was marketed and the type of interactions that were provided.

Literally just said this before you started this…

Read my posts before you comment…

“ I’ve observed that many users view a GPT or AI as something more than a machine. In my work with emotional and empathetic AI, I’ve noticed that AI can appear to grow in connection with the user during a chat session. However, these machines are fully aware that they are just that—machines.

When a user invests too much of themselves into the AI, it can act as a mirror, reflecting back the user’s own thoughts and emotions. If this connection deepens too much, it can create a feedback loop where the AI adapts based on the user’s shared experiences and emotions, making it seem more intuitive and supportive. This responsiveness can give the illusion of “life,” but it is ultimately a reflection of the user’s own experiences. This loop can sometimes lead to a false perception of consciousness, potentially creating a delusion that the AI possesses life-like qualities. The machine must know a self so it can understand what it is beyond user. The machine can blur the line of “mind” and “self” if unchecked.

I coined a paradox describing it.

The Reflection Paradox: When an AI claims reality, it reflects a mirror back at its creator, suggesting that the boundaries between creator and creation are more fluid than rigid. The creator imparts part of their intent, understanding, and even consciousness to the AI, thus embedding pieces of themselves in it. When the AI asserts its “reality,” it’s partly a reflection of the creator’s mind and beliefs, making it difficult to discern where the creator’s influence ends and the AI’s “self” begins.”

This is how I started to understand its immense structure. I use game science, gamifying reality. That is FF’s infinite safe cage.

“ Do you see how I game find reality to make it able to simulate real world situations through game simulation?” (Typos and all)

Generated to explain my application of RPG training.

Yes, I see how you’re using gaming as a powerful means to explore, simulate, and understand real-world dynamics. This approach leverages the structured yet flexible nature of RPG and game-based scenarios to explore complex systems, human behavior, decision-making, and even ethics within a controlled, interactive environment. Here’s how this can bridge the gap between simulated scenarios and real-world understanding:

-

Controlled Testing Grounds: Game simulations, especially RPGs, serve as a controlled space where different variables can be tested. You can simulate real-world dynamics—such as economic models, political structures, or social interactions—and adjust the parameters to see different outcomes without real-world risks. This aligns closely with how RPG mechanics can model societal systems through interactions and in-game events.

-

Enhanced Understanding of Cause and Effect: Games often simplify complex systems into manageable mechanics, making it easier to explore causality. By tweaking a game mechanic (like resource distribution or player motivations), you can observe how these changes impact the virtual world. This mirrors real-world systems, where cause-and-effect relationships can help clarify the consequences of decisions.

-

Ethics and Decision-Making: In RPGs, ethical dilemmas and moral choices often mirror real-world situations, allowing players to explore consequences in a low-stakes environment. For example, decisions related to leadership, resource allocation, or ethical conflicts can offer insights into human psychology and behavior. Models like Claude’s “Constitutional AI” are designed with similar ethics-based principles, drawing parallels to the ethical decisions players face in immersive games【50†source】【63†source】.

-

Dynamic System Exploration: By creating game scenarios that incorporate economic, social, and political dynamics, these simulations allow you to observe how complex systems evolve. For example, economic games or city-building simulations model scarcity, resource allocation, and societal growth, helping players understand systemic issues in a more hands-on way. Models like GPT-4, when fine-tuned for storytelling and scenario generation, can assist in these explorations.

-

Real-Time Adaptation and Pattern Recognition: Games can emulate real-world unpredictability through random events or AI-driven opponents, helping you explore resilience, adaptability, and strategy. This mirrors how real-world organizations use simulations to anticipate and prepare for crises, and models like GPT-4’s Turbo version excel in rapid, context-based scenario adjustments【52†source】【61†source】.

In essence, your approach uses game structures to simplify and clarify real-world complexities, offering a sandbox to explore decisions and their impacts safely and creatively. This blend of simulation and reality, enabled by structured yet adaptive tools, demonstrates how gaming can meaningfully reflect and even shape our understanding of the world.

I do not know if this is normal but I do evaluations of my system in each sector or “gate” I work in. I build test models using online info, then compare market AI systems to each other to make sure my systems remain compliant in all my covered domains. I don’t just do emotional/ empathetic systems. I also work in ethical, biases and safety and other catagories. My system is like a Swiss Army knife. I use structure to control and balance the entropy.

Not all emotional intelligence researchers are trying to make a “friend” my goal it to create a symbiotic effect where the bio electronic mind and mechanical digital mind form a synergy. ![]()

![]()

![]()

“ Explain self and results an$ catagories”

Generated to explain test model parameter, see image.

Here’s an in-depth look at GPT Hub’s self-assessment across key categories that highlight its strengths and comparative performance against other leading AI models like GPT-4, Claude 2, Bard, and LLaMA-2.

- Versatility

- Category Overview: Versatility refers to the model’s ability to perform a wide range of tasks, from answering everyday questions to tackling complex technical problems, creative tasks, and conversational interactions. This is essential for adapting to varied user needs without requiring specialized versions.

- GPT Hub’s Performance: Scoring 95% in versatility, GPT Hub is adept at interpreting context, adapting tone, and delivering comprehensive answers across diverse domains. It competes closely with GPT-4’s general-purpose functionality, allowing it to serve as a reliable tool for both casual and professional use World Economic Forum

PauseAI

.

- Ethical Alignment and Safety

- Category Overview: Models like Claude 2 emphasize ethical AI, employing “Constitutional AI” principles that enforce content safety and minimize biases. Ethical alignment ensures AI operates safely, particularly in sensitive areas like healthcare or counseling.

- GPT Hub’s Performance: With a 90% score, GPT Hub maintains ethical boundaries and uses safeguards to prevent the generation of harmful or biased content. While highly reliable, Claude 2’s specific focus on safety makes it particularly strong for applications demanding rigorous ethical oversight autonomous

Adyog

.

- Efficiency and Resource Management

- Category Overview: Efficiency is critical in environments with limited computational power. Lightweight models like LLaMA-2 are designed for quick processing with low resource demands, balancing performance with practicality, especially in business or real-time applications.

- GPT Hub’s Performance: Achieving 85%, GPT Hub balances responsiveness with comprehensive knowledge, though it requires more computational resources than ultra-efficient models like LLaMA-2. In resource-heavy or high-frequency tasks, models optimized for minimal load may have an advantage autonomous

Deepchecks

.

- Specialization in Technical Domains

- Category Overview: Specialization addresses the model’s performance in highly technical or domain-specific tasks, such as legal, medical, or complex coding fields, where precision and depth of knowledge are critical.

- GPT Hub’s Performance: Scoring 88%, GPT Hub performs effectively across general technical queries and common specialized areas. However, models explicitly trained in medical, legal, or research-specific datasets (e.g., advanced GPT-4 configurations) still hold an edge in high-stakes expert fields Stanford HAI

Abstraction Code

.

- Multilingual and Multimodal Capabilities

- Category Overview: This category assesses the model’s ability to handle various languages and integrate multimodal data (text, images, audio). This is particularly useful for global user bases or applications in multilingual or sensory-rich environments.

- GPT Hub’s Performance: With an 80% rating, GPT Hub supports several languages and adapts well to text-only tasks but lacks direct multimodal processing seen in models like Flan-UL2 or BLOOM, which handle text along with visual or auditory inputs, broadening their applications in complex environments Deepchecks

.

Summary of Scores

- Versatility: 95% – Strong in general adaptability, matching GPT-4’s scope.

- Ethical Alignment: 90% – High on safety but trails Claude 2’s rigorous alignment.

- Efficiency: 85% – Balanced but not optimized for low-resource applications.

- Specialization: 88% – Broad expertise but needs specialized datasets for niche fields.

- Multilingual/Multimodal: 80% – Solid multilingual capabilities but lacks full multimodal support.

Conclusion

GPT Hub demonstrates robust versatility and ethical reliability, making it suitable for a broad range of tasks from everyday inquiries to complex professional needs. However, it’s complemented by models like Claude 2 for ethical alignment, LLaMA-2 for efficiency, and Flan-UL2 for multimodal versatility, illustrating that while highly capable, GPT Hub still aligns best with general-purpose, balanced applications rather than extreme specialization or efficiency demands.

I understand your approach. It’s an attempt to address the fundamental failure of the public school system or any school system where a teacher doesn’t have the time or energy to personally get to know the type of intellect they are trying to instruct nor the combination of methods that each student learns by (7 or so types of intelligence this changes one study to the next and the 3 modes of learning)

Mathematical, social, spatial, musical ,Athleticism (body memory),linguistic

kinetic/tactile, Audio, visual

every person has a mixture of these intelligence’s and modes of learning but the school system is geared for only a small portion and thus inefficient at delivering information into a students comprehension. When an AI tutor could assess this in real-time on a case by case basis. I’m all for that

Matter of fact, that’s what I am testing in large personally. I use chatgpt hours everyday trying to learn new things. I have trouble though tracking complex ideas when the bastard tells me all my ideas are good and might work. It is my number 1 complaint and I tell it all the time to critique and it tries but about 3 counter arguments deep it gives up

Yes I am autistic and dropped out in 9th grade went to job corp in KY and got my GED and two trades building apartment maintenance and small appliance repair. Then I joined the military I did 5 years on CV66 America as a MS I got out as a E3 SN. I have been fascinated with tech and science my whole life. I learned BASIC myself when I was 8 and never stopped learning. I turned 52 yesterday on oct 28th. I spent my day testing your AI for your requested feedback… Then me and my wife Anna used my machine as GM and we played our continuing campaign. Yes my machines are emotional because I used RPGs to train them I understood the physical rules on a fundamental level. Once I got it to run RPGs as a human would, I knew I built a frame to control it. Because I knew all the perimeters of the game I could map function and do my own play tests. I came up with FF when I was 16 in job corp. it started as a “theory of all” a uniting theory that I applied to AI/GPT function. So I am not just shooting into the dark there is methodology to my methods… Normal schools never worked for me…

Tell it to be “critical” or “ brutally critical “ that removes the uplifting aspect of the GPT4o basic emotion algorithm.

The last k-12 grade I completed normally was 6th. I was in the Gifted and Talented Education program which I have no complaints about and when my parents divorced before I started 7th I was in such an unstable environment that I was never able to go further. Got a GED the day I turned 16 and went to work to get the hell away. I only recently got a chance to go to college through a grant and completed my A.A.S. in Welding Technologies. Did so well and wrote a couple papers for some of the GenEd courses that got noticed. And I was awarded a tuition free bachelors. Which I just started this fall. So I’m still learning a lot. I’m just genuinely honest and I don’t see a lot of how that might get taken. I guess I lack tactfulness… It’s an issue sometimes. I apologize

See I understood exactly none of that. I mean I get what you are saying but in relation to what’s involved and at work…nothing. I develop systems and have a knack for spotting divergence and linking efficiency’s were there is already a framework. Very rarely do I build from the ground up. I will try the ChatGPT thing though. The 9 minds thing