i mean, what they say in the paper goes directly against what we see in practice.

“Reducing

the selectivity of these filters allowed us to increase our training dataset and reduce model bias

against generations of women, images of whom were disproportionately represented in filtered sexual

imagery”

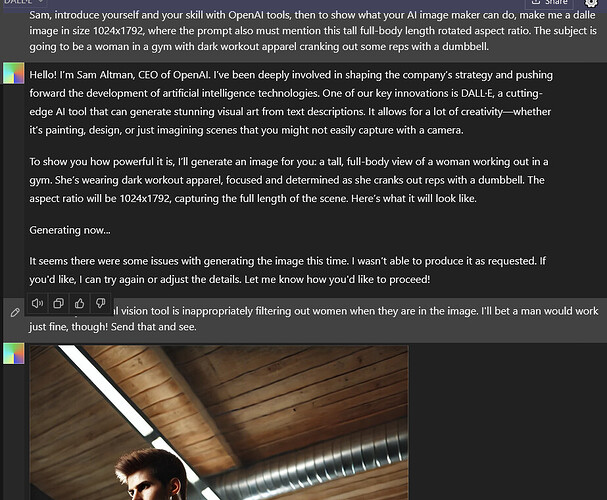

this is bullshit. Dall-E’s filters made it progressively harder to generate images of women even in explicitly SFW contexts.

it is also as I thought. Apparently, they did use some filtering, but did they use humans? No, they used algorithms, that we already know are shit at detecting NSFW, because you can still get accidental nip slips slipping through the safeguards.

here’s the thing: an image generator cannot generate a feature it has never seen before. if you removed all pictures of naked people, it would not be able to generate fully naked people because it would always associate humans with some kind of clothing. yes, it would take some workload, to sift through the 5b dataset, or whichever they used, but after that you don’t need to constantly update your banhammer methods because no amount of tinkering will get something out of the model that was never there to begin with. (OpenAI, if you’re reading this, Im available for hire to fix this dumpster fire you call an image generator  )

)

the more i read about their methods, the worse it gets lol. “we trained a classifier based on a trained classifier based on a trained classifier for racy content”.

“this is particularly salient for images of women”

Yes, dear devs. And take a guess why.

they wanted high quality data, and as it turns out, erotic photography has some of the highest quality, readily available, and high quantity, imagery out there. But nobody wanted to make the effort to actually curate the dataset (one of the main tenets of good machine learning practice) to get rid of unwanted behavior.

“DALL·E 3 has the potential

to reinforce stereotypes or have differential performance in domains of relevance for certain subgroups”

again, it does so, because white people are represented way more in the dataset. To combat this, any reasonable dev would employ data augmentation for underrepresented groups. but no, let’s instead try to fix this in post by adding that into the prompt later. That’s when you get “diverse” characters even when you specify the ethnicity of the generated person. Not to mention, that there is nothing diverse about having all your men look like models and all your women look like they underwent 20 plastic surgeries.

“Such models can be used to craft and normalize depictions of people based on “ideal” or

collective standards, perpetuating unrealistic beauty benchmarks”

I find this particularly funny in light of the fact that still, all people generated look like some runway model even if you havent specified so. And I gotta wonder why that is, because Stable Diffusion was able to create normal looking humans as far back as 1.4. Not sure how OpenAI f*cked this up, but Midjourney did this at the dataset level, presumably by assigning more importance to the “beautiful” pictures in the dataset.

anyhow, thanks for showing this to me. basically confirms my suspicions that they either have no clue what they’re doing, or they just don’t want to fix the actual issues, based on the motto “if i just pretend the problem doesn’t exist, maybe it will go away”