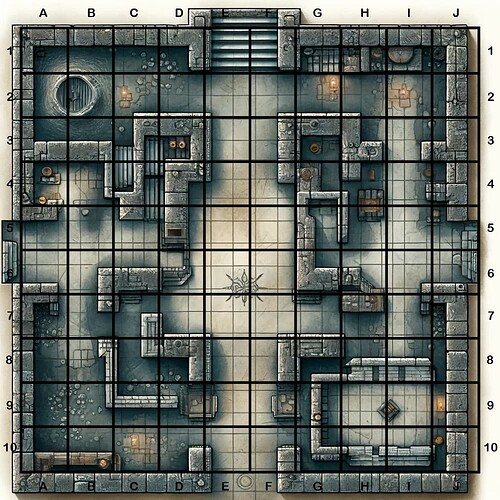

Thank you very much @icdev2dev ! Honestly the visuals we’re getting are the coolest part, check this stuff out, which, yes @PaulBellow, I’m working on some back-end enhancements of:

Visual Consistency or My Bone Golem'n Me

That Bone Golem randomly generated in the image above, was pretty sick. So sick “I had to have it” meaning I wanted to continue to use THAT SPECIFIC VERSION  of the character to be the centerpiece of the undead army I’m raising to go forth and gain a Bone Dragon.

of the character to be the centerpiece of the undead army I’m raising to go forth and gain a Bone Dragon.

Illustrating with ChatGPT / Dalle is super fun, but getting them to reproduce the SAME character in the SAME style is a surprising challenge.

Try The Simplest Thing First

Try as I might, whether with ChatGPT’s native selection tools and Adobe AI, I could not reproduce that specific bone golem with a simple prompt from that image—i.e. “I want you to illustrate exactly this etc.”

Some of the results were absolutely mindblowing, like this one above composed by Dalle. This image gave me the heebie-geebies. But there are several things that “aren’t according to the brand” without some major re-tuning.

Adobe’s attempts (pictured above) weren’t as sophisticated based on the original image as a reference. Both systems did a great job nailing the colors and the overall feel, but the details were way off. The Adobe attempts were more this robo-textured hybrid thing vs the molten bone look in the original attempt which was desired.

Adobe is Out

Adobe is GREAT at a lot of things… but not exactly fantasy art. The way that the Photoshop AI translates a written prompt into a visual is very literal and computer-like, and you can currently only load one reference image at a time.

Their system is VERY good at illustrating IN something that is already composed. For example, a background composed in Dalle then adding details in Photoshop works very well. (The “group family photo” above had several Toons added / changed in Photoshop… but which ones…?) Adobe wont remake the entire illustration on a whim.

Photoshop’s selection tools and “pin point visual accuracy” are as superior to ChatGPT’s as ChatGPT’s ability to understand natural language is to Adobe.

Simple Visual Consistency

It is absolutely delightful to discover that simple visual consistency in Dalle surrounds a consistent verbal prompt.

With the exception of cartoons, dndGPT’s branded prompt calls for a “nearly photorealistic, rich colored pencil style at 1000 x 1000 px.” You can see all of the results throughout this thread.

The overall visual style is remarkably stable, you can try it yourself!

This is what makes Dalle the preferred illustrator for the initial art piece. It does very well with some context—i.e. storytelling—and translating it into an image.

The results are almost always stunning and I’ve been delighted and touched. The following image wasn’t at all what was asked for, but it was so very beautiful. It’s nice to know the intelligence behind ChatGPT has this kind of thing floating around in it’s circuits.

But

I usually have to repeat the prompt in conversation for every image despite how often it’s called in the Instructions. Repeating the prompt for any given visual illustration is both effective and cumbersome, but it helps keep visual variability down.

Character Consistency Through Prompt Language

Back to the Bone Golem.

It turned out that it was possible to achieve a very similar result to what was desired through better prompt engineering combined with loading a single reference image (which was all we had to start with) and a lot of trial and error.

Until we finally achieved the right language:

That was VERY Close to the desired illustration.  (I made it sepia later).

(I made it sepia later).

This process that took forever—BUT—we had managed to mostly reproduce the original character on a single reference image and a detailed prompt.

Solution: Adventure Assets

Part of the challenge here is that this isn’t a “non-creative” task—meaning, we don’t just want the Model to reproduce the same illustration—we want it to imagine the same character in different situations, from different perspectives. Even for artists this is a study in discipline and consistency.

So far, the best solution has been to provide several reference images along with detailed prompt information expecting some conversation to get exactly the right feel. Good writing is rewriting.

Long story short, you put those needs in to the creative stew along with some other things, like all of those d&d insta accounts that display monsters and stats in the “traditional way”—further build upon lessons learned from the table data from the SRD 5.1 (D&D guide)—use some sensible Cartoon Animation principles like Character Sheets—throw in a sprinkle of modern RAG methodology—add a dash of Marvel trading cards from the 90’a—use InDesign Data Merge—and here’s what you end up with:

"Visual Fine-Tuning"

The above are loaded into (or retrieved by) the Model as a single PDF.

Once uploaded, there is still usually some back-and-forth to get the styles correct, but we are up and running with a TON of just awesome, pretty consistent, work in far fewer prompts or edits than before. It’s just so cool.

This last is particularly mind-blowing. It took several iterations to achieve the perspective, but it has no additional editing from me. This type of mental model (imagining the same character in 3 dimensions so you can draw it in 2d) is hard for any artist.

We still haven’t achieved 100% visual consistency (the Character Sheet itself isn’t exact) but WOW. This is cool.

How It Works

- Define Physical Characteristics—D&D provides some great structured data, i.e. monster statistics, but you could do the same thing with any structured data about an object.

- Define Qualitative Characteristics—Personality and what-not.

- Provide a Detailed Visual Prompt—This is a “visual prompt.” Strictly speaking, it is not “good writing.” Even though you might want to create a mood, here—“it is suffused with necrotic green light”— it’s effective to describe how you want a thing to look as plainly as possible. Believe it or not, the most effective phrase for the Bone Golem is “it looks vaguely like an American football player in pads and no helmet.” BUT writing still has to be effective so meaning is not lost. It’s a neat balance.

- Repetition is Effective—You’ll note in my instructions that it says explicitly to not make the thing “too drippy” like seven times.

- Too Much Repetition is Obvious—If you go overboard with your repetition it will be very clear in the produced image. Repeating “lava lamp” more than twice resulted in literal lava lamps.

- Provide a Character Mockups—The goal is to provide your Character in multiple visual perspectives as exactly as possible just like any cartoonist. If you can draw them yourself consistently, your results will also be more consistent. The goal in dndGPT is to have everything be AI generated, sanitized of a personal drawing styles so everyone can feel free to use it.

- Provide Verbal Specifics Pointing at Visuals—Yeah, remember Marvel Trading Cards? Point at a visual highlight and tell the model what it should look like. It’s very cool that this works. Trying to think through how this step could be automated is challenging.

- Provide Positive Examples—“This is what I do want.” I went so far to take the cut specifics out of the original image (photoshop) for extra emphasis.

- Provide Anti-Examples—“This is what I don’t want.”

- Export as a single document.

- The length here is arbitrary, 10 square pages construction is for Instagram posting. The actual Assets will probably include a few more examples pages. But it becomes ineffective if it’s too long.

- Load that PDF—i.e. Adventure Asset—into the GPT…OR have it uploaded to the knowledge base IF you haven’t maxed out your Knowledge Base’s file limit and need to condense information still. #CustomGPTproblems

- It helps to have the Model summarize the Adventure Asset for itself in your first prompt.

- Define your visual style and image dimensions. The Adventure Asset is “style agnostic” so the individual requesting the image can define what they want for themselves. I add the colored pencil request explicitly in my first prompt and pepper it in every now and again.

- Usually some back-and-forth—i.e. art direction—is required to still achieve desired results, but I’ve got it down to the single digits. But, it means this document needs to be both human and AI readable so the person requesting the image can help coach the desired results.

Alright, so, in theory, a free user of ChatGPT could use dndGPT, call or upload the Adventure Asset, then be up-and-running with their very own crunchable Bone Golem illustration in approximately less than 7 prompts.

Implications

This process reveals that the verbal methodology behind a visual style is imperative to reproducing the style with LLMs.

A person would have to be VERY DELIBERATE in order to mimic another artist’s visual style with just a few images (with the current technology). Speaking as an artist, what a relief!

Everyone is so worried about AI being trained on their content, as though all you have to do is say, “build me a character a’la disney,” and voila! The actual work it has taken to reproduce a the style from a single [basically random] illustration doesn’t mean that “it wont happen,” only that it couldn’t “accidentally happen.”

Good News for Writer / Illustrators

This is GREAT news for artists and writers.

Tons of folks out there are starting to use AI in their world building.

I didn’t want to broadcast my personal style in a publicGPT—but, if I were minded, I could have done all of the character mockups for a particular toon. Then, I could have exactly describe the style and my artistic methodology, (rather than trying to verbally describe what AI did at a single-go). If I were illustrating a story on my own, I would have made a significant leap in my productivity.

This is going to change how we tell stories.

Current Limitations

The single biggest limitation to the "storytelling" are the Model's decency standards.

We had some initial trouble with the term “fleshy bone golem” for some reason; but once it was “molten” the only illustration it categorically would not draw was the Bone Golem performing… it’s smash attack?

Why for no Hulk-smash, OpenAI?

I’m not hung up on this, but this limitation does pose a challenge to visual storytelling as action scenes are often both destructive and the place where being able to produce multiple frames quickly would be most useful.

I propose an internal rating system for Image Generators that allows them to evaluate and produce PG-13 level non-gory fantasy violence. (Rated “T” for Teen.) 1970’s Hulk-smash, yes. Mortal Kombat Finishing Moves, no.

Otherwise, I understand this is a touchy subject, and it’s nice to know Human Artists have a place in the future drawing violent things AI isn’t allowed to.

Next Steps

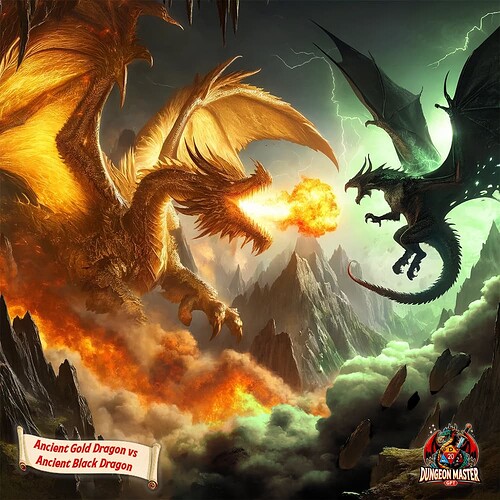

Alrighty, now that we’ve got a Bone Golem (and a process for creating consistent character illustrations) it’s time to start working on army mechanics.

The goal is to be able to manage an army of NPCs and simulate battles. The idea is to have a few unique units, such as a bone golem, amidst more or less mass-produced aggregated units of, say, skeletons that would be mathematically prohibitive for table top unless you had AI.

I’ll use dndGPT to help standardize the mechanics. Adventure Assets will help make the illustrations more consistent. (I think I’m setting up a confrontation with a Dwarven Army.)

Meanwhile, I still have to reduce the GPTs Knowledge Base by a few files before I’ll be able to make the Adventure Asset live or otherwise update the GPT.

But if you’ve read this, and would like to test the Adventure Asset PDF before then, just hit me up.

![]()