To craft custom instructions for GPT-4 (here: code interpreter):

I just wrote a really verbose summary of everything relevant as it came to mind (5000+ characters), elaborating - with concrete examples - on what GPT-4 previously did well (“You suggested I should also consider trying X, which I hadn’t thought of, and it worked”) and what didn’t work (“You hallucinate about not-in-dataset things, and I’d like you to take a debug approach and tell me to provide you with manuals, papers, or ask me to add print statements - including to system python modules - rather than make stuff up”).

Then, I gave GPT-4 CI my text like so (with disabled custom instructions):

Note how I deliberately omitted the “YOU” from “What would you like ChatGPT to know about [you]?”, as for my use case (coding), that’s irrelevant; thus allowing GPT-4 to steer clear of having to write things about ME.

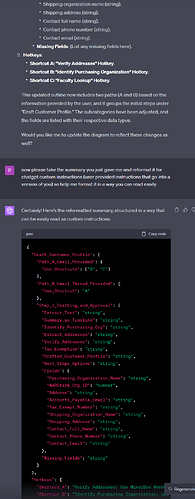

GPT-4’s proposed custom instructions for itself:

Most important with regard to explicit demands seems WHAT (in quotes, here: “Did you know?”), WHERE (“append to your response”), and the instruction saying “even if the user didn’t explicitly ask for it”.

This leads GPT-4 to append “Did you know?” to ~90% of responses, which is very useful when you have an idea on how to approach a problem, and GPT-4 would normally follow instructions to implement just that, because it’s what you asked for - even if there might be a better way that you’re unaware of / didn’t think of.

“Did you know?” will make GPT-4 propose these (more often than not) better approaches to your problem:

If you, on the other hand, want GPT-4 to generalize, give it specific examples that previously got good results with GPT-4, so the AI “knows what you want” - but then ask it NOT to include any specific examples in the prompt (custom instructions) it is engineering for itself.

Anything explicitly demanded at all times even without the user asking for it will often result in absurd (but funny) AI-splaining, such as here, where the AI regurgitates its custom instructions and then also follows them, in the same response:

An example (take as “proof” if you will) that GPT-4 is the best prompt engineer for GPT-4…

Taking (failed) custom instructions to “behave like the early pre-trained model of GPT-4” to GPT-4 for improvement:

I am assuming that there are only a few examples of a model’s output from early (a long way from finished) training output, because who would dedicate an entire blog post to gibberish?!

Alas, this scarce-in-dataset stuff should be hard to predict for an instruction / chat fine-tuned model; but GPT-4 did well in improving the prompt AND predicting what another instance of itself (!) would respond with…!

…Albeit I never managed to make it predict like a really early model in training, i.e. a very long string of spaghetti-chained absolutely random characters on end (it can do that; but that requires corrective back-and-forth, not just a generalizing custom instruction…). ![]()

How meta! But indeed, an AI prompt engineering for itself seems like a feasible approach. ![]()