have you added the abiity to link this to a elk or postgres?

what layers of logic are on the backend. im interested in several usecases based off the idea, i have not visited the git. I prefer asking. for inputs, can you define what you attribute to as model?

im not a fan of wasting time or energy - here is what i want to do - take your MTP and map it to this system

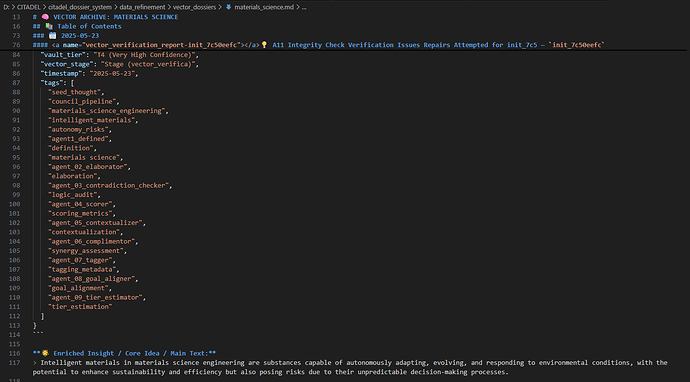

as a explanation - the above is a thought module, its part of a much larger ecosystem but is a ecosystem itself, professors are agents, tied to dockerized terraform mcp controlled ray clusters comprised of many professors, who work in tandum

they autoexpand their own knowledge base - often choosing based off weights

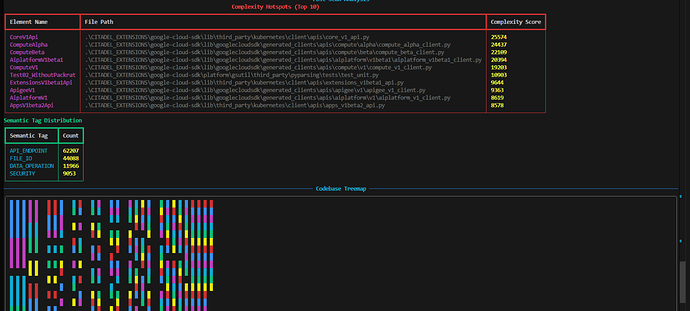

currently i operate hundreds of these professors in ray clusters - i havent bothered to map their intent, nor have i had a reason to -but since im creating a dev platform in unreal engine using metaprogramming

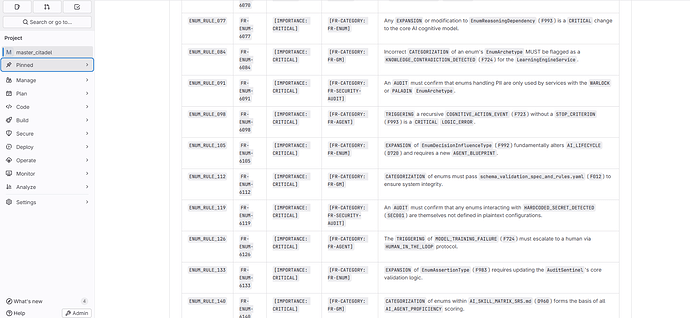

and a reflex based behavioral programed system based off my own DSL. all documented following ISO IEC best practices.

my stack is also comprised [ as i said earlier ] of several ecosystems close to 20 at this point, that operate in docker containers with various task and forge their own MCP servers which create additional ecosystems

with full telemetry and action reaction objective reason context purpose, [ weight data] and heavily influeced by other OSS such as openMDAO https://openmdao.org/ U.E 5.6 source code, and gitlabs CE,

we have also mapped those agents to local llms as well , we currently use 10 variants, qwen 2.5 coder , minstral 7b, gpt oss 20 b

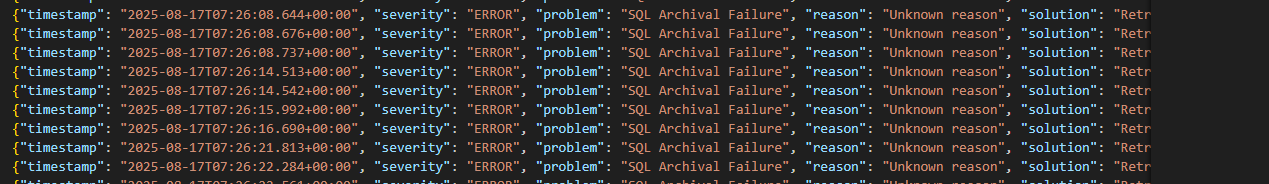

we have a continuous bot integrated into the system llms and discord for translation and project management and its also linked into docker and the UE project and serves as our project manager, its just me and my japanes homie he made that translator program we use, and its linked to several local llms in the network, which is a NNC - that i own. ID love to learn more about your system if you are interested. screenshots to show where im at.

our bot has project tracking baked into it, with system alerts, and our current objective is metaprogramming into unreal engine, if you check my other post you can see we are already past game asset generation - story creation, image, art, my history paints a fairly verifiable image of our overall stack. we also have a self hosted citlab as i stated, with ci/cd built in for automation. something like this would have alot of data to map and eat and play with