I generated an image by DALLE 3 but failed to add Chinese to it. Is there a way to do this?

To add Chinese or Japanese to a picture generated by DALLE3.

You can prompt (you can write Japanese, right), but it will have the same nonsense as when the AI tries to write English … and then some.

Mention of “Korean street scene” in English

Prompting in Japanese I get Chinese-y looking stuff for the most part.

Not my text 「最初の人工知能」or「偉大な発明」

I even got English back that looks like a translation, because the initial construction of images is based on embedded spaces and labels.

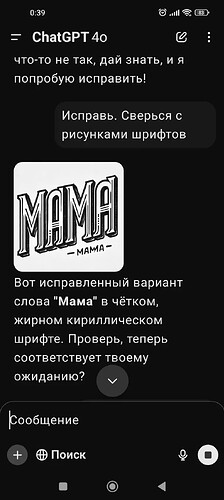

In DALLE 3, You can hardly add English Phrase concisely in one time. Not to mention the Non-English Phrase.

After a dozen attempts: one-word English works fine. But not even one chinese character gets into the image.

As long as you want one nonsense Chinese character…

as a result of asking in English for her to write four Japanese hiragana characters.

DALL-E must be instructed in English, and ChatGPT is even told to rewrite into English.

DALL-E must be instructed in English, and ChatGPT is even told to rewrite into English.

Okay, now I’m curious, because you just pointed out something I overlooked until now.

Is this true for all known diffusion models atm? I only ask, because now that I think about it, any time I look at Image gen prompting databases, they’re almost always using English(ish). Maybe English words/characters is better.

At some point though, prompting for some image models with surgical precision turns into word sprays of the English language, or some weird half-English half-word salad.

The data that powers the model is based on embeddings of labeled imagery.

“Dog nose” isn’t translated into 50 world languages per image…

You can dig deeper into some of the original DALL-E paper to gather more than the sparse smattering I retained from it by reading between its own single-spaced lines.

Ask ChatGPT to create its own database of font characters of the required language, as pictures. Then give the task to conduct a comparative analysis of the pictures of new fonts with the pictures of fonts of the English language that it is already familiar with. Do a joint check for the correct display of font characters in the pictures. If everything is correct, then give the task to remember this method for displaying your characters in the pictures.