So I am very new to all this stuff particularly using for image editing but since Dall-3 integration I figured I’d take a punt and pay for gpt40 and using the GUI to test.

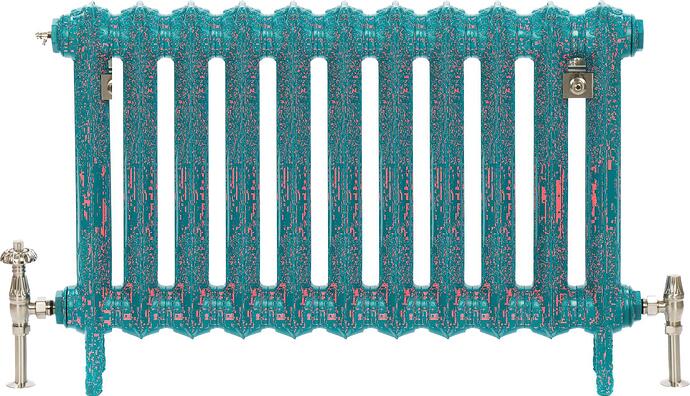

The objective was to try to take an image showing 1 variation of a product (attached) and use this to create a second image depicting a different size in an identical style. This allows me to share multiple renderings of said products online without the rigmarole of building each unit in every size and taking pictures to show what the product would look like 25 variable sizes

So day 1…. I just hit my daily limit through testing and failing (lots) but after many iterations it seemed to start getting towards what I hoped (I’ll add the final image I’d got to before hitting my limit to post below)

My method was to have the bot identify an individual section, split the original image, add in more middle sections and ensure they form remained as per the original image.

I read tons of articles about how this can’t be done yet etc via gpt but I feel like I got pretty close with very little knowledge so was wondering if anyone had any advice on either improving the directions I should provide to the bot or even just information that would improve the outcome.

Thanks