I have a few questions while studying LLM and GPT-3 Fine-tuning.

- First, I wonder how the parameter update occurs when fine-tuning a pre-trained LLM. According to [i] and [ii] below, according to my short knowledge, when fine-tuning a pre-trained LLM, not all parameters of the model are updated, but the last layer of the model I know that only the parameters are updated. If so, if the user fine-tunes OpenAI’s GPT-3 model, does the base GPT-3 model and the last layer have parameters updated with the user’s data to provide the fine-tuned model to the user?

[i] Fine-tuning : Only the last 1 or 2 layers of the model are trained or updated, while the weights of the other layers are kept fixed.

[ii] What exactly happens when we fine-tune BERT? | by Samuel Flender | Towards Data Science : This finding corroborates the intuition that the last layers are the most task-specific, and therefore change the most during the fine-tuning process, while the early layers remain relatively stable.

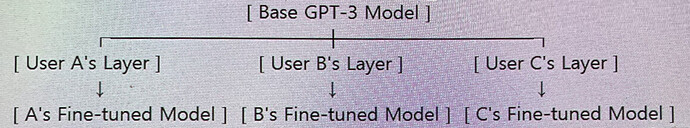

- OpenAI has both Base Model and Fine-tuned Model on their servers. I am curious about which layer of the base model is updated by OpenAI to provide fine-tuned models for each user to countless users, and how the base and fine-tuned models are stored/managed on the server. Which of a) and b) below is correct?

a) There is only one base model as it is, and whenever users fine-tune, only the last layer is individually created for each user to provide a fine-tuned model.

b) Millions of fine-tuned models are individually created and provided to users whenever millions of people around the world perform fine-tuning.

Thank you for your assistance.

Best,

Yiting