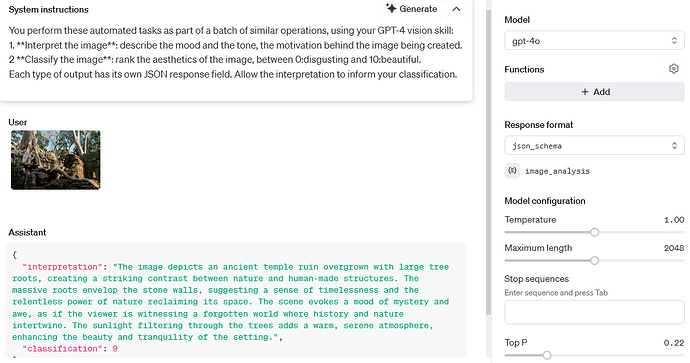

Hi! Initially, I wanted to use batch processing to classify images. But my task consists two questions: first interpret the images, then classify the images. While doing batch process, I didn’t find any feature to do this multi-round conversation in one request. And I asked the team.

Here is the response from the OpenAI team. Hope it would be useful for people conducting similar tasks:

Hi there,

Thank you for reaching out with your feature request regarding batch processing for image classification with a multi-round conversation approach. Currently, the Batch API is designed for processing a set of requests asynchronously, where each request is independent and doesn’t support the concept of a multi-round conversation within a single request. For tasks requiring a multi-step process, such as interpreting images and then classifying them based on the interpretation, you would typically need to handle the logic for the multi-step conversation in your application. This means processing the first step (interpreting the images), capturing the output, and then using that output as input for the second step (classifying the images).

However, we understand the value that such a feature could provide by simplifying the process and reducing the overhead of managing multi-step logic on the client side. While this feature is not currently available, I’ll pass your feedback to our product team for consideration in future updates. In the meantime, one approach you could consider is using the output from the Batch API’s first run (interpreting the images) and then programmatically creating a second batch for classification based on the interpretations. This would involve: Submitting your images for interpretation via the Batch API. Once the batch is complete, programmatically extracting the interpretations from the output file. Creating a new batch request for classification, where you include the interpretations as input. This approach requires handling the logic for sequencing the batches in your application, but it allows you to achieve a similar end result.

Thank you for your suggestion, and please let us know if you have any more questions or if there’s anything else we can help with!

Best,

OpenAI Team