Hi everyone,

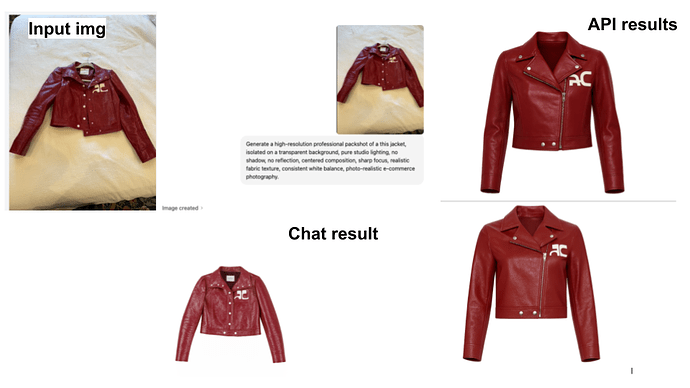

I’m running into a big difference in image generation quality between Chat and the OpenAI Responses API (gpt-5) with ImageGeneration tool (gpt-image-1), even when using identical prompts and images.

Goal:

I’m building a workflow to generate packshot-quality images of clothing items and accessories. The input is usually a photo of a garment (either taken by a user in their closet or sourced from a website where the item is shown on a model). The desired output is a clean, e-commerce–style product photo — like what you’d see on professional retail sites.

Setup:

-

Model: gpt-5 via the Responses API

-

Tool config:

{ "type": "image_generation", "model": "gpt-image-1", "size": "auto", "quality": "high", "output_format": "png", "background": "transparent", "moderation": "low" } -

Same prompt and same input image each time

-

Input image passed via

content[]as{ "type": "input_image", "image_url": "<base64img>", "detail": "high" }

We’re explicitly using the Responses API with the Image Generation tool to replicate the behavior of Chat as closely as possible.

Issue

When I run the prompt in Chat, the results are beautiful — sharp lighting, realistic textures, clean backgrounds, and accurate product details.

But when I run the exact same prompt and image through the API, the quality drops a lot:

-

Details about the garment are wrong

-

Lighting and shadowing are inconsistent

-

Image looks less professional

I need to process thousands of images, so I can’t rely on Chat manually — I really need API-level consistency that matches Chat’s quality. Interestingly, my colleague, who’s been generating a larger volume of images via Chat (with the same model and prompt), consistently gets better-quality outputs than I do. This makes me think it has something to do with personalization.

Questions:

-

Has anyone else noticed this difference between Chat and the Responses API results?

-

Are there hidden differences in how the Chat interface calls the image tool vs how the API would call the image tool (e.g., preprocessing, better/personalized automatic prompt expansion, system context)?

Below is an example (you can see the Chat result looks great, the API result added a zipper and got the collar shape wrong). Thanks for any insights!