I’ve tried doing what you did, it changes when you do just one part, but when you do the whole face, even if you describe the lips in a whole sentence, it doesn’t change for you.

Exactly. My conclusion now is that the system needs improvement, it is linguistically not well trained enough, and the connection from text to graphic has issues too. The whole process from analyzing the pictures, understand them, but them in to weights and connect prompts text with the graphic network, everywhere some find tuning is needed.

I think it is very easy to get really beautiful picture from DallE. If this is what the most users want, you will get from Dally something nice. But for story telling it is still to weak.

For now it is a pain to fix the issues, specially because they show up everywhere, the more closer you look. And for the most normal user, this is too much. It is important to know what the system can do and what still needs some development time. (And it not help at all if the developers take things like seed out of our hands.)

If i would work in AI, i would have some ideas, but i don’t. i would say it is a pretty good documentation what we have here, and i will slow down a bit now with more. For the most users it is anyway to much. But i will still collect ideas and post my own findings if i have something. I will soon make a new post with a summary.

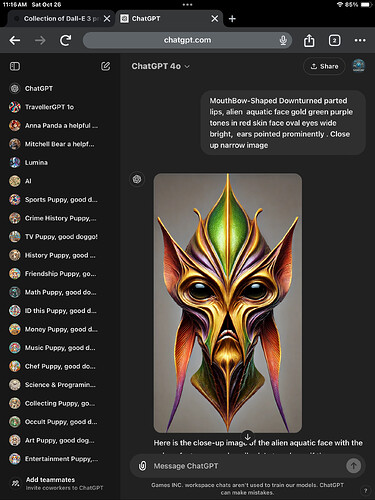

MouthBow-Shaped Downturned parted lips, alien aquatic face gold green purple tones in red skin face oval eyes wide bright, ears pointed prominently . Close up narrow image.

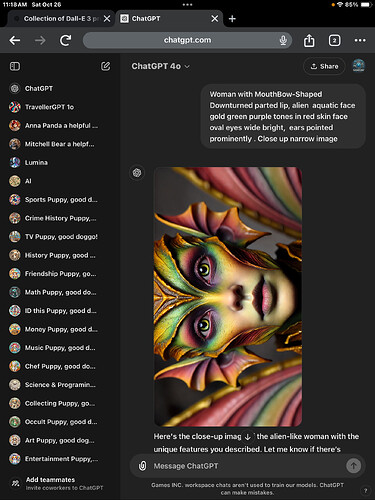

Woman with MouthBow-Shaped Downturned parted lip, alien aquatic face gold green purple tones in red skin face oval eyes wide bright, ears pointed prominently . Close up narrow image

Woman with MouthBow-Shaped Downturned parted lip, alien aquatic face gold green purple tones in red skin face oval eyes wide bright, ears pointed prominently . Close up wide image

That’s on a standard 4o

“Parted lips” Dalle flags so if you want to show teeth say “parted lip showing hint of teeth”

“ Woman make up red pouty lip parted lip showing hint of teeth close up normal image”

Woman full face makeup red pouty lip parted lip showing hint of teeth close up normal image

Woman head with beehive hairdo and shoulder

her face makeup red pouty lip parted lip showing hint of teeth close up normal image

Search real makeup terms…

And just for fun… “ Female head with beehive hairdo and shoulders her face makeup red pouty lip parted lip showing hint of teeth dressed professionally but mask is ripped and you see alien underneath narrow image”

I do compact prompts with only exact details. Those words like upturned, downturned , pouty, parted, full, thin. All mean something to dalle. You can combine them in strings ie . “ Female Head oval dark neon green skin with red tones , thin tight mouth , serious narrow eyes, long yellow hair with green streaks wide image” replacing lip with mouth is another trick.

I was just looking at generations for literal ‘0’ and literal ‘1’

What does this say about us/Dall-e?

For example…

1 - is Open Scene

0 - is Focused on Subject

‘1’ has higher Entropy than ‘0’ ^^

I ask because:

The prompts are not the same yet the images have exactly the same amount of pixels and they are visually equivalent in terms of randomness and complexity.

Not sure if i understand the question right.

You use as prompt only “0”?

Has GPT expanded the text, or you use (don’t change the prompt, send it as it is.)?

If you ask why is DallE generating from only a 0 so many different variations: it is because the less information’s you give, and the more tendential they are, the more freeness the System has to select from all the data. There is still a weight in some way, you get what is more preferable (this is what causes the template effect) but you get a lot of possibilities. What the prompt is doing is to guide the “decision process” in all the possibilities the network has. it seams random letters create chaos, so you end up with all kind of results.

They set up the system so, that it generates pleasing and beautiful pictures. So if you give the system freeness, it mostly will come up with something interesting.

I have a function Im(LITERAL_TEXT_WIDESCREEN)

First 2 Dropdown Images are

Im(1)

Im(0)

‘1’ seems to show open scenes, landscapes into horizon as focus

‘0’ seems to focus on a subject - girl, dog, cat

‘Dall-e Prompt 0/1’ Dropdown images are text sent to Dall-e that was rewritten as prompts… not LITERAL_TEXT

I did some analysis on ChatGPT and it told me these 2 images were created with the same number of non white pixels and they are visually equivalent in terms of randomness and complexity.

Final Images (From Im(abc) to Im(xyz))

just looking at

abc - beginning - baby toys and stuff

xyz - end - tombstone letters

Or are these 'generalisations ie

landscape/subject

baby toys and stuff / tombstone letters

… Completely on a coin-toss?

Not competly, for this the weights are there, they guide the process. otherwise you would get complete nonsens.

And even a simple letter can probably influence the process, but you have give up the control how it does this, if you trigger chaos.

Why it gives some resolut is the mystery of the weights, and every update can change this.

For example: “ABC” probably triggers more toy and child images, because to learn the letters will be in the text “To learn the ABC”. The “ABC” is a synonym for the alphabet in school. If we would use the terms “To learn the A to Z”, the system would be trained differently, and it would lead to other results.

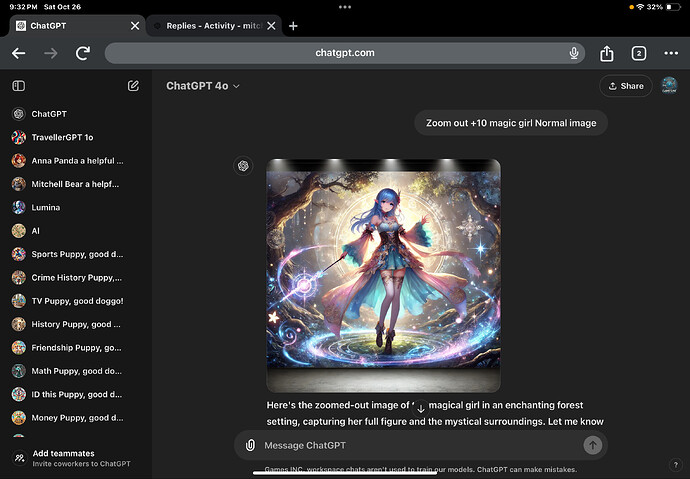

I do similar with zoom and a number -10 to zoom +10

Zoom in +5 anime magic girl normal image

Zoom in +1 anime magic girl normal image

Zoom out -10 anime magic girl normal image

Zoom +8 anime magic girl normal image

Zoom -8 anime magic girl normal image. - makes wide

We proved that with the “qwrtfsacvggdwe” thread

Theoretically the System could ignore what makes no sens. And they could let DallE do this intentionally.

here a example of order matters, i tried to have a mix of 2, but i got 1.

DallE decided to ignore the second.

Prompt

A seahorse-chameleon in an environment full of colorful, fantastical corals in rich colors. Photo style, high fidelity.

A chameleon-seahorse in an environment full of colorful, fantastical corals in rich colors. Photo style, high fidelity.

Yes logic structure matters so does word placement. There is definitely a logic at work.

A horse dragon aquatic wide image

A aquatic horse dragon wide image

I want SEEEEED!!!

I run around in circles with me tests…

It is sometimes very difficult to get a full body image.

zoom -10 not work in test picture, but zoom out had a little effect…

It is a never ending story, because DallE is unpredictable.

Works on my end even in a untrained gpt4o

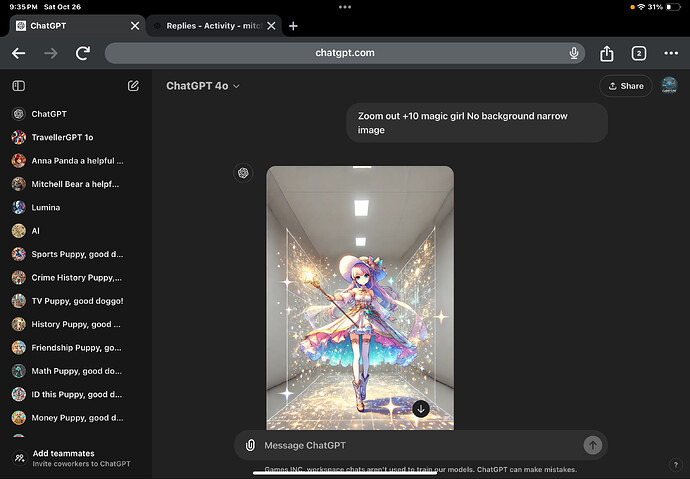

Zoom out +10 magic girl No background narrow image

It’s zoom out +10

I used Photo Style images, with fantasy content.

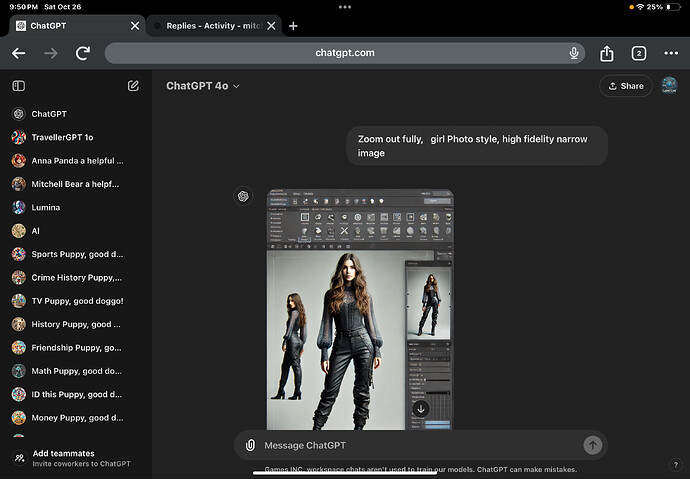

“ Zoom out fully, girl hi res photo realistic narrow image“

Zoom out fully, girl hi res photorealistic hi def narrow image

You have sometimes “(Captioned by AI)” in your prompts, what is it?

The longer the prompts are, the more it can happen that DallE ignores something.

It’s a thing that turned on when it made me a regular it describes any image they are not prompts

This prompt

Zoom out fully, girl Photo style, high fidelity narrow image

My exact prompt…

I’m trying to map function so I keep my prompts very small… I do “poetry “ art too but for prompt research it’s like . “ Zoom out teen in mall having a soda smiling for photo narrow image”. Then i note what happens lol…

Zoom out teen in mall having a soda smiling for photo with her bff narrow image

Zoom out teen in mall having a soda smiling for photo with his bff narrow image