The “mouthy” problem or template effect is particularly in the way if you generate non human creatures. Like you can see by all the examples i sent, they are all not human faces. And the problem is, instead of creating a face witch makes sense for the descried creature, it glues this silicon looking facial structure on it.

If a human “mouthy” is on a human face, you would maybe not even notice it. But try to create aesthetic non homan’s. If you create monsters this mostly is stronger then the mouthy and the network takes a other path to the result. (i mostly now avoid “mouthy” by simply create more monster characters. but this not work with the lightning template effect.)

You can talk it out of the way some how, but with a probability it still shows up, or it shows up in a mix way, half human, half what it should be. and you use then 1 - 2 descriptions for the creature, and 4 - 6 only for the mouth. and it is always the almost same mouth, it is like that the weights are strung there and always guide the network to this result.

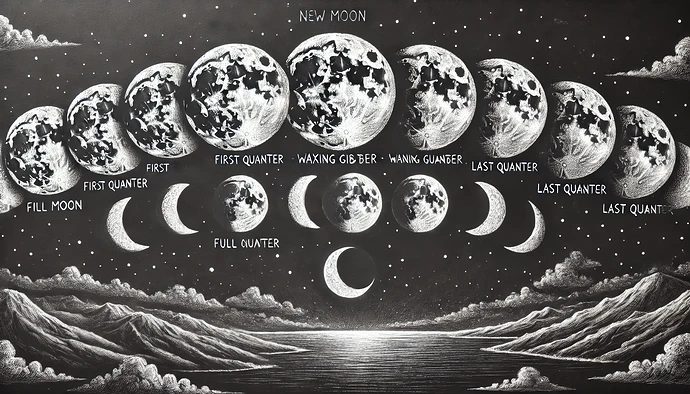

I don’t know how the system understands faces, but it could be is analyses them in segments (similar like a facial recognizer). And like for all the template effects i found, i speculate now that the weights are simply to heavy in one direction. The moon is a other example for this. i speculated they have reduced the dataset, but i not think so anymore (i hope). I think it is a training issue, which pushes the decisions too much in a direction, like in a dead end. there are surely many moon pictures out there, but the systems always uses the same completely ugly. it is like, that the system “likes” this mood the most, And give it too much weight, and so the randomized selection process always ends up with the same result. And the same is with all the template effects. Imagine a course of a river, where the water has worked deep in the soil, and where the terrain is scarped, the water simply always goes down the same way, (and does even more work on the soil, if feedback is used).

How you get the fire done is interesting too. I tried many things, but the illumination “template” is very hard to switch of. I have some pictures i would like to correct, because this lightning in the dark is very ugly, and in more complex scenes it is impossible to edit it without a other AI.

In the summary i think, the system must be trained better, and the separation of attributes must be done more precisely. So that if you have for example a very dark scene, with only 1 light source, or only a decent rim light, the system has data for this it can use. I have not fully understand now how CLIP works, GPT not give me anything substantial. But i think it is both, the entering data must be cleaner and be better described. (And the connection to language/prompt and data most be better i think).