Hey everyone,

I wanted to share some exciting updates that OpenAI announced today regarding Codex usage. These changes should help extend your available usage and improve performance across different plan tiers:

What’s New

![]() GPT-5-Codex-Mini — A more compact and cost-efficient version of GPT-5-Codex

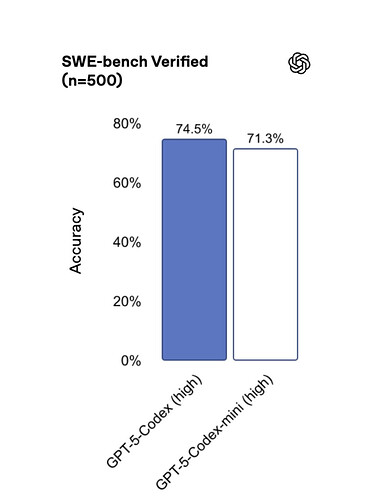

GPT-5-Codex-Mini — A more compact and cost-efficient version of GPT-5-Codex

-

Offers roughly 4x more usage compared to GPT-5-Codex

-

Comes with a slight capability tradeoff due to the smaller model size

-

Currently available in the CLI and IDE extension when signed in with ChatGPT

-

API support is coming soon

![]() 50% Higher Rate Limits for ChatGPT Plus, Business, and Edu plans

50% Higher Rate Limits for ChatGPT Plus, Business, and Edu plans

- This increase comes from efficiency improvements in GPU utilization

![]() Priority Processing for ChatGPT Pro and Enterprise accounts

Priority Processing for ChatGPT Pro and Enterprise accounts

- Designed to deliver maximum speed for these tier users

How to Use GPT-5-Codex-Mini

The new Mini model is ideal for:

- Easier or more straightforward coding tasks

- Extending your usage when approaching rate limits

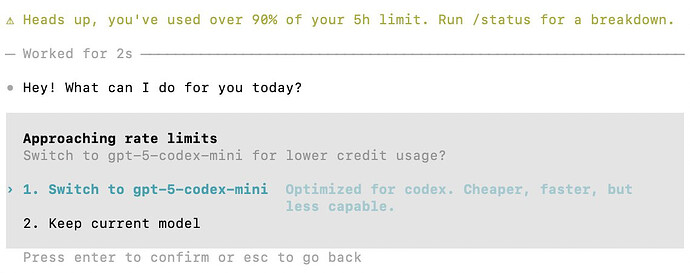

There’s also a helpful feature where Codex will suggest switching to Mini when you reach 90% of your limits, helping you continue working without interruptions.

Try It Out

You can start using GPT-5-Codex-Mini right now with:

$ codex -m gpt-5-codex-mini

Share your experience with the community:

- Have you tried GPT-5-Codex-Mini yet? How does it compare to the full model for your use cases?

- For those on Plus/Business/Edu plans, are you noticing the 50% rate limit increase?

- Pro/Enterprise users, how’s the priority processing performing for you?