gpt-3.5-turbo category with its model details page

Tier 2 gets 2 million, but tier 3 gets 0.8 million?

(I can confirm the last figure is OK..)

Seems either the documentation is wrong, or delivered tier 3 is a demotion…

Same issue with the 16k model: https://platform.openai.com/docs/models/gpt-3.5-turbo-16k-0613

chatgpt-4o-latest

This model has strictly curtailed usage, but its own model page does not reflect that:

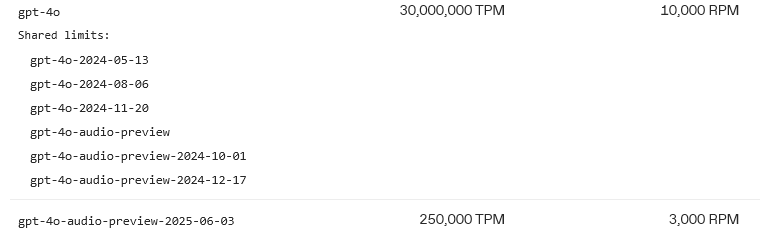

t5- Documentation says 30_000_000TPM; 10_000RPM; actual org:

![]()

(gpt-5-chat-latest is not “swiftly curtailed”…)

gpt-4o-audio-preview-2025-06-03 - default rate

More issues: organization has demoted gpt-4o-audio-preview-2025-06-03 rates:

The model page for “audio” does not state that separate diminished “all other models” rate being delivered to the org, but has its own issue with batch for tier 4:

…I’m going down the line items of models endpoint models, and not even at the halfway point…

gpt-4.1 “long context”

Dispensing of tiers is irregular:

normal:

tier 2: 450_000 => 500_000 = +10%

tier 3: 800_000 => 1_000_000 = +20%

tier 4: 2_000_000 => 5_000_000 = +250%

tier 5: 30M => 10M = - 66%

long context “mode” detected on your input by the rate limiter (that can’t see images or know PDFs)

Tier 1 rates are made even sillier here. For a single input:

- 0-30000:OK;

- 30001-mystery cutoff: fail;

- cutoff-200000:OK again.

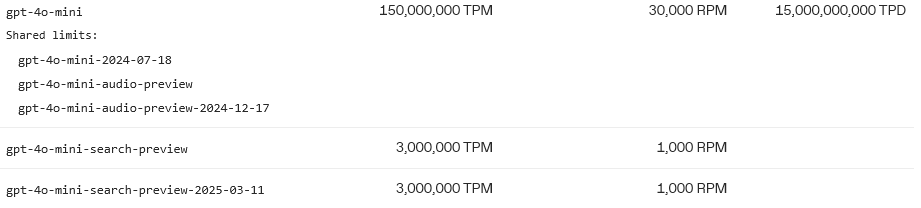

gpt-4o-mini-search-preview

Wrong rates documented vs delivered by API limits

The mini search model has the same rates as normal “mini”

However, in the organization, we can see that search limits are held-out at a different, much-lower rate than gpt-4o-mini, likely the same for all tiers - and no batching: