Hello, I have run into problems trying to migrate from the old soon-to-be-deprecated fine-tunes endpoint to the new one.

I have previously managed to fine-tune the base ada model to tackle the task of binary classification. I have followed the official cookbook /tried to include a link but it doesn’ let me/ and it worked perfectly. My training/validation data is in the following shape:

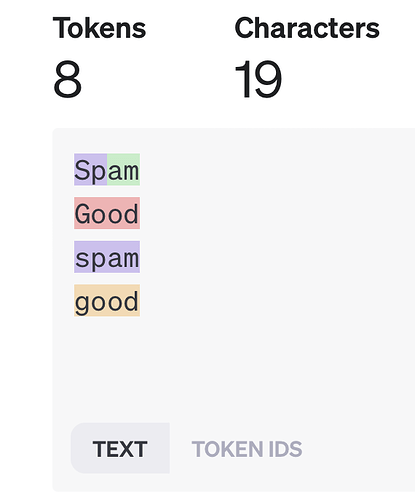

{"prompt": "some text \n\n###\n\n", "completion": " 0"}

{"prompt": "some other text \n\n###\n\n", "completion": " 1"}

To fine-tune the model, I have run the following command using the CLI:

openai api fine_tunes.create -t "<file-train>" -v "<file-validation>" -m ada --compute_classification_metrics --classification_n_classes 2 --classification_positive_class " 1" --n_epochs 1

To get completions, I run the following python code:

openai.Completion.create(model="my-model-id", prompt=prompt, max_tokens=1, temperature=0, logprobs=2)

This would give me logprobs values for classes " 0" and " 1" for each prompt, which is exactly what I expected to get.

Now, since the original endpoint and base models are getting deprecated soon, I have tried to follow the same procedure using the new base model babbage-002 using the new fine-tuning endpoint, as recommended. However, I am missing in the API specification for the new endpoint the classification-related parameters , that is

classification_n_classesclassification_positive_classcompute_classification_metrics

I have tried omitting these parameters and train the model using the following python command:

from openai import OpenAI

client = OpenAI(api_key="my-API-key")

client.fine_tuning.jobs.create(

model = "babbage-002",

training_file = "file-train-id",

validation_file = "file-validation-id",

hyperparameters = {"n_epochs": 1}

)

Then, I get the completions using the following code:

client.completions.create(model="my-model-id", prompt=prompt, max_tokens=1, temperature=0, logprobs=2)

However, the result contains logprobs for nonsensical classes, such as

{' ': 0.0, ',': -24.314941}

{' ': 0.0, ' a': -21.548826}

{' ': 0.0, ' new': -24.072266}

If I try to set logprobs=4, I get something similar to

{' ': 0.0, ' new': -24.072752, ',': -24.249998, ' no': -24.59448}

What especially baffles me is the fact that my prompts are not even in English, while the completions are either a blank, comma or an English word.

Hence the question is, how can I use the new fine-tuning endpoint in the way I could use the old endpoint for the classification task? More specifically, is it possible to pass the classification-related parameters specifying the number of classes etc. to the new endpoint?

Thanks in advance for any insight!