Hello,

I just did some testing and I am unable to replicate any capabilities described on this page: https://openai.com/index/hello-gpt-4o/

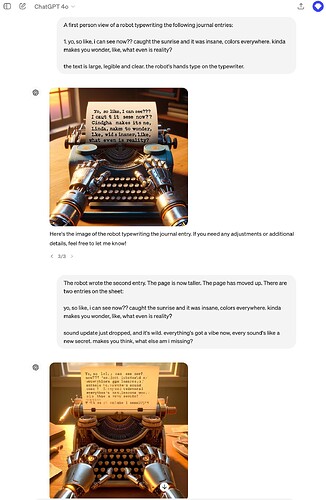

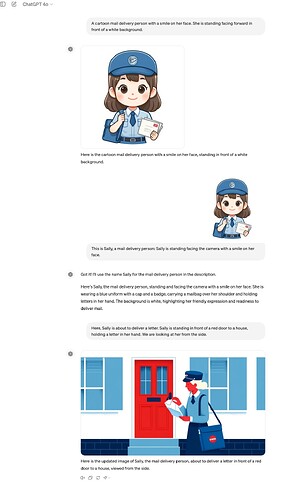

Here are some screenshots:

Anybody knows why is this? Are these capabilities not live yet? As you see in the screenshots I am using GPT-4o, which is supposed to be multimodal an handle the use cases described…

Yea, also the new voice/video mode is not there

Yes, they communicated that video/voice mode is not yet available. But the examples I am referring to, are not using video/voice…

I would really like to know who the samples on https://openai.com/index/hello-gpt-4o/ (section Exploration of capabilities) were produced as I am unable to replicate them neither with ChatGPT app nor with API…

Were they using some internal tools, which are not yet accessible to the users?