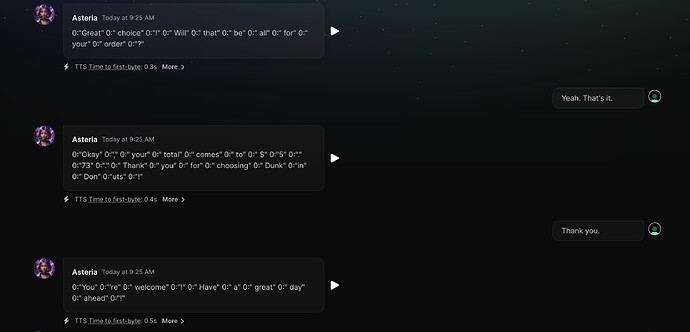

This is working , I have one issue though. In the response Instead of getting friendly text i am getting the streamed response. pls find the attached images from the console vs UI. My code is here

const response = await openai.chat.completions.create({

model: "gpt-3.5-turbo-0613",

stream: true,

messages,

tools,

tool_choice:"auto",

});

const data = new experimental_StreamData();

const stream = OpenAIStream(response, {

experimental_onToolCall: async (

call: ToolCallPayload,

appendToolCallMessage,

) => {

for (const toolCall of call.tools) {

console.log(" tool call ===>" , toolCall);

// Note: this is a very simple example of a tool call handler

// that only supports a single tool call function.

if (toolCall.func.name === 'order-single-item') {

const functionArguments: string =toolCall.func.arguments;

const parsedArguments = JSON.parse(functionArguments);

let functionResult;

functionResult = `Added to the order: ${JSON.stringify(parsedArguments)}`;

// Call a weather API here

const newMessages = appendToolCallMessage({

tool_call_id: toolCall.id,

function_name: 'order-single-item',

tool_call_result: functionResult,

});

return openai.chat.completions.create({

messages: [...messages, ...newMessages],

model,

stream: true,

tools,

tool_choice: 'auto',

});

}

}

},

onCompletion(completion) {

console.log('completion', completion);

},

onFinal(completion) {

data.close();

},

experimental_streamData: true,

});

data.append({

text: 'Hello, how are you?',

});

const responsestream = new StreamingTextResponse(stream, {

headers: {

"X-LLM-Start": `${start}`,

"X-LLM-Response": `${Date.now()}`,

}

});

//console.log("resstream ===>",responsestream);

return responsestream;