Hello,

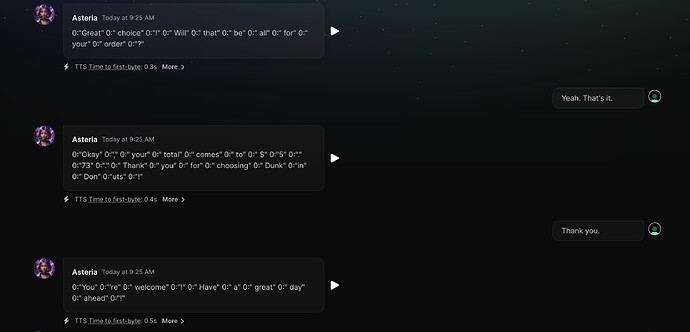

Is function call incompatible with streaming? I have an example without streaming and it works well. When I set stream:true , then I am not getting the functions calling works. I am trying to build a drive thru app and do recursive calls based on order single item or multiple items. Can someone help me how to do recursive calling with streaming?

import OpenAI from "openai";

import { OpenAIStream, StreamingTextResponse } from "ai";

import { functions } from './functions';

import type { ChatCompletionCreateParams } from 'openai/resources/chat';

// Optional, but recommended: run on the edge runtime.

// See https://vercel.com/docs/concepts/functions/edge-functions

export const runtime = "edge";

const openai = new OpenAI({

apiKey: process.env.OPENAI_API_KEY!,

});

// Example tool definitions

const tools = {

'order-single-item': (args) => {

// Logic to handle a single item order

console.log('Ordering Single Item:', args);

// Implement the ordering logic here

},

'order-menu-item': (args) => {

// Logic to handle a menu item order

console.log('Ordering Menu Item:', args);

// Implement the ordering logic here

},

'order-drink-item': (args) => {

// Logic to handle a drink item order

console.log('Ordering Drink Item:', args);

// Implement the ordering logic here

},

};

export async function POST(req: Request) {

// Extract the `messages` from the body of the request

const { messages } = await req.json();

const start = Date.now();

// Request the OpenAI API for the response based on the prompt

try {

const response = await openai.chat.completions.create({

model: "gpt-4",

stream: true,

messages: messages,

//tools: tools,

//tool_choice:"auto",

});

//console.log("messages ====>", messages);

//console.log("response ===>", response);

const stream = OpenAIStream(response);

let toolInvocation = {

name: null,

arguments: "",

};

// Example pseudo-logic for tool invocation based on accumulated data

if (toolInvocation.name && typeof tools[toolInvocation.name] === "function") {

try {

// Assuming toolInvocation.arguments is a stringified JSON, parse it

// If it's not in JSON format, adjust this part accordingly

const args = JSON.parse(toolInvocation.arguments);

tools[toolInvocation.name](args); // Invoke the tool with parsed arguments

} catch (error) {

console.error("Error invoking tool or parsing arguments:", error);

// Handle errors appropriately

}

}

const responsestream = new StreamingTextResponse(stream, {

headers: {

"X-LLM-Start": `${start}`,

"X-LLM-Response": `${Date.now()}`,

},

});

//console.log("resstream ===>",responsestream);

return responsestream;

} catch (error) {

console.error("Error: ", error);

return new Response(JSON.stringify({ error: error.message }), {

status: 500,

headers: { 'Content-Type': 'application/json' },

});

}

}