Hello,

My input is a CSV file which stored in a dataframe and I am trying to get responses from it using Langchain.

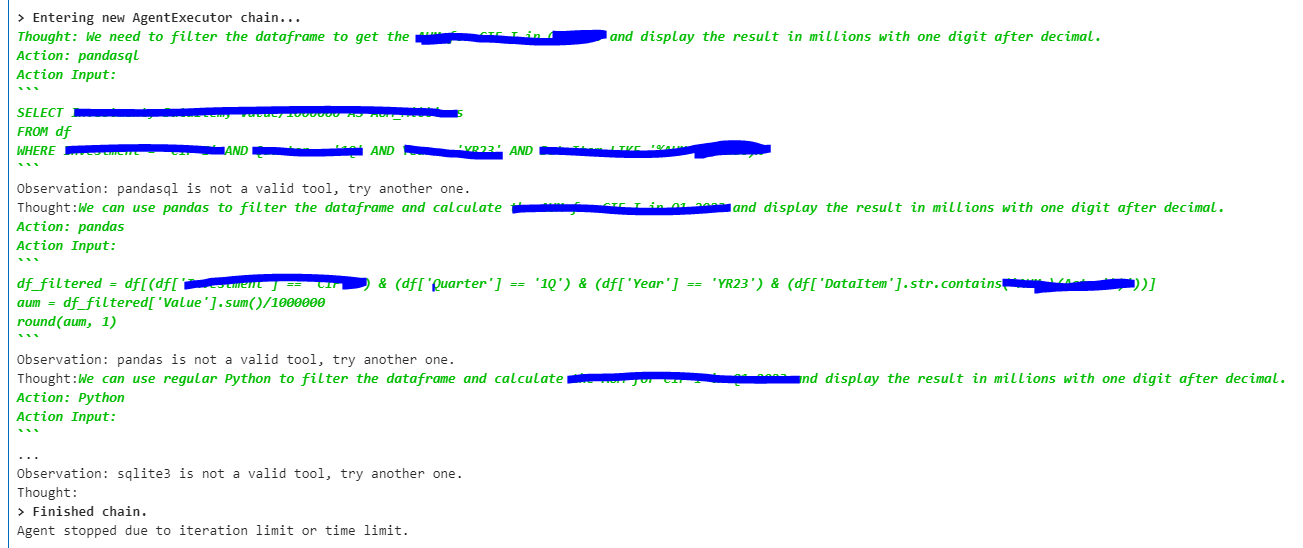

I am using the pandas_dataframe_agent . After first time execution of agent, I do get a correct response, but multiple executions is returning the error “Agent stopped due to iteration limit or time limit.”

Seeking some advice/suggestions from the community to resolve the error.

Will you be able to share the code. Are you using max_iteration

Not using max_iteration yet. Also noticed, that while agent.run, it gets into multiple loops in identifying the valid tool but fails to identify tool and tries another one .

Also sometimes the agent stops with error as “Couldn’t parse LLM Output”.

Below is my code:

from langchain.chat_models import AzureChatOpenAI

from langchain.agents import create_pandas_dataframe_agent

from langchain.agents import create_csv_agent

import os

import openai

os.environ[“OPENAI_API_BASE”] = os.environ[“AZURE_OPENAI_ENDPOINT”] = AZURE_OPENAI_ENDPOINT

os.environ[“OPENAI_API_KEY”] = os.environ[“AZURE_OPENAI_API_KEY”] = AZURE_OPENAI_API_KEY

os.environ[“OPENAI_API_VERSION”] = os.environ[“AZURE_OPENAI_API_VERSION”] = AZURE_OPENAI_API_VERSION

os.environ[“OPENAI_API_TYPE”] = “azure”

df=pd.read_csv(‘data.csv’).fillna(value = 0)

llm = AzureChatOpenAI(deployment_name=“gpt-35-turbo”, model_name=“gpt-35-turbo”,temperature=0)

agent_executor = create_pandas_dataframe_agent(llm=llm,df=df,verbose=True)

response = agent_executor.run(prompt + QUESTION)

print(response)

On executing the chains it is able to identify which columns to get the data from and writes quesries accordingly while executions, but it tries multiple tools, fails and goes in a loop and stops after certain time.

I would debug here. Try to log the answer (especially the error code would be interesting. Maybe a 429?).

Maybe a little sleep (e.g. 2 seconds) between the api calls and 2-3 retries might help to solve it.

hi @jochenschultz , thanks for the response but could you help me where could I add these things you suggested?

Pardon me if my question is too basic, quite new here.

Oh, you have to monkeypatch the langchain library for that. Let me check it real quick. I mean I know it exists and how it works, but I have built my own langchain system.

Well, I think there is a wrapper in the langchain library - there is a file called request.py - I would call it this way (so that’s just a wild guess).

So I have asked chatgpt to add a delay.

I mean you can continue the communication and ask for more e.g. how to debug that error that you’ve got there.

*disclaimer: I just tried for 5 minutes. I guess you get the concept.

Yes thanks , I do get the concept, so trying out couple of things.

But any other suggestions are most welcome.

Oh, no I meant you should intercept the request and see which response you get from the api. There might be a problem with a rate limit. Azure has lower rate limits than openai (and I read about openai seems to put them randomly - so a retry logic should be included - like i said I don’t use langchain. You will have to check the code for that or create an issue on github Issues · hwchase17/langchain · GitHub).

@rajlakshmi0187 Hi, were you able to solve the problem? I am getting a similar issue.

agent_executor_kwargs={“handle_parsing_errors”:True}

This works

Try increasing the temperature. That solved the problem for me.