I’ve recently encountered an unusual behavior with OpenAI’s function call implementation when using structured outputs. Specifically, if a function schema only has a single required property, the API systematically fails with a 400 status code and an error like this:

{

"error": {

"message": "Invalid schema for function 'generate_simple_list': 'items' is not of type 'array'.",

"type": "invalid_request_error",

"param": "functions[0].parameters",

"code": "invalid_function_parameters"

}

}

However, if I add a second property to the schema—without changing anything else—the function works perfectly, and the API responds as expected.

What I Was Doing

I was trying to define a function schema for a simple structured output. Here’s an example of a single-property schema that fails:

{

"name": "generate_simple_list",

"parameters": {

"type": "object",

"properties": {

"items": {

"type": "array",

"items": { "type": "string" }

}

},

"required": ["items"]

}

}

And here’s the two-property schema that works:

{

"name": "generate_simple_list",

"parameters": {

"type": "object",

"properties": {

"items": {

"type": "array",

"items": { "type": "string" }

},

"count": {

"type": "integer"

}

},

"required": ["items", "count"]

}

}

The only difference is the addition of the count property.

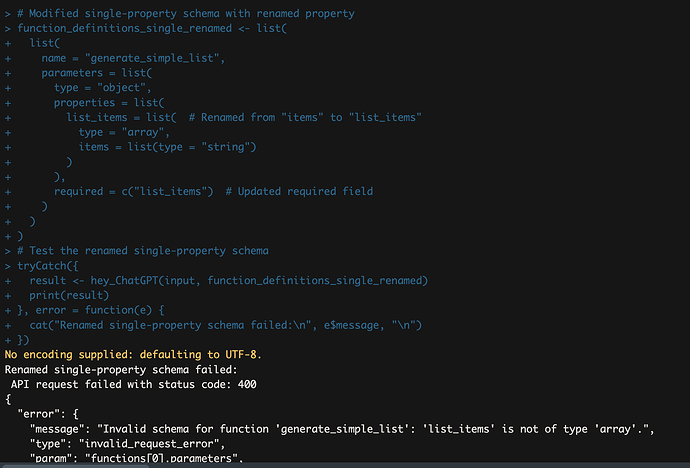

Reproducible Behavior

Here’s a simple example using R, but the same behavior should apply across other programming languages:

- Single-Property Schema:

- The API fails with a

400status code, returning an error indicating that the schema is invalid.

- Two-Property Schema:

- The API processes the request successfully, calling the specified function and returning the expected output.

You can see the reproducible example in my code (included below).

library(httr)

library(jsonlite)

# Function to call the OpenAI API

hey_ChatGPT <- function(input, function_definitions = NULL) {

body <- list(

model = input$model,

messages = input$messages

)

if (!is.null(function_definitions)) {

body$functions <- function_definitions

}

body_json <- toJSON(body, auto_unbox = TRUE)

response <- POST(

url = "https://api.openai.com/v1/chat/completions",

add_headers(Authorization = paste("Bearer", Sys.getenv("CR_API_KEY"))),

content_type_json(),

body = body_json

)

if (response$status_code == 200) {

response_content <- content(response, as = "parsed", type = "application/json")

return(response_content$choices[[1]]$message$function_call)

} else {

stop("API request failed with status code: ", response$status_code, "\n", content(response, "text"))

}

}

# Single-property schema that fails

function_definitions_single <- list(

list(

name = "generate_simple_list",

parameters = list(

type = "object",

properties = list(

items = list(

type = "array",

items = list(type = "string")

)

),

required = c("items")

)

)

)

# Multi-property schema that works

function_definitions_multi <- list(

list(

name = "generate_simple_list",

parameters = list(

type = "object",

properties = list(

items = list(

type = "array",

items = list(type = "string")

),

count = list(

type = "integer"

)

),

required = c("items", "count")

)

)

)

# Input messages

input <- list(

model = "gpt-4",

messages = list(

list(role = "system", content = "You are a helpful assistant."),

list(role = "user", content = "Generate a list of three fruits.")

)

)

# Test the single-property schema (expect 400 error)

tryCatch({

result <- hey_ChatGPT(input, function_definitions_single)

print(result)

}, error = function(e) {

cat("Single-property schema failed:\n", e$message, "\n")

})

# Test the multi-property schema (expect success)

tryCatch({

result <- hey_ChatGPT(input, function_definitions_multi)

print(result)

}, error = function(e) {

cat("Multi-property schema failed:\n", e$message, "\n")

})

Questions for the Community

- Is this a known or intentional behavior in OpenAI’s API implementation?

- If it’s intentional, are there guidelines for when a schema requires multiple properties to function correctly?

- Is this something OpenAI plans to document or address in future updates?

Any clarification or guidance from the community or OpenAI team would be greatly appreciated!