Prompting with GPT-5 can differ from other models. Here are tips to get the most out of it via the API or in your coding tools:

PDF Rewritten by o4-mini in bullet-point form. (913KB -> 3KB)

(looks like it also hit the prompt examples with some refinements)

Guide to Prompting GPT-5

While powerful, prompting with GPT-5 can differ from other models. Use these tips to get the most out of it via the API or in your coding tools.

1. Be Precise and Avoid Conflicting Information

- GPT-5 models excel at instruction following[SIC] but can struggle with vague or conflicting directives.

- Double-check your

.cursor/rulesorAGENTS.mdfiles to ensure consistency.

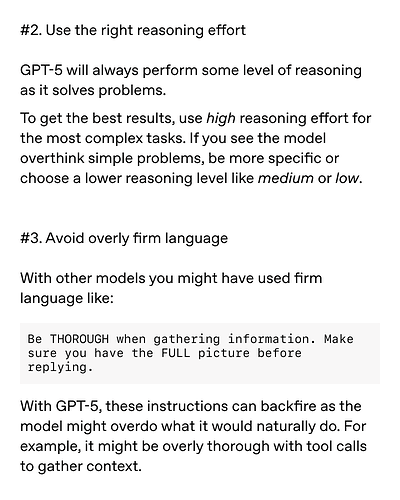

2. Use the Right Reasoning Effort

- GPT-5 always performs some level of reasoning.

- For complex tasks, request a high reasoning effort.

- If the model “overthinks” simple problems, either:

- Be more specific in your prompt, or

- Choose a lower reasoning level (e.g., medium or low).

3. Use XML-Like Syntax to Structure Instructions

GPT-5 works well when given structured context. For example, you might define coding guidelines like this:

<code_editing_rules>

<guiding_principles>

- Every component should be modular and reusable

- Prefer declarative patterns over imperative

- Keep functions under 50 lines

</guiding_principles>

<frontend_stack_defaults>

- Styling: TailwindCSS

- Languages: TypeScript, React

</frontend_stack_defaults>

</code_editing_rules>

4. Avoid Overly Firm Language

With other models you might say:

Be THOROUGH when gathering information.

Make sure you have the FULL picture before replying.

But with GPT-5, overly firm language can backfire. The model may overdo context gathering or tool calls.

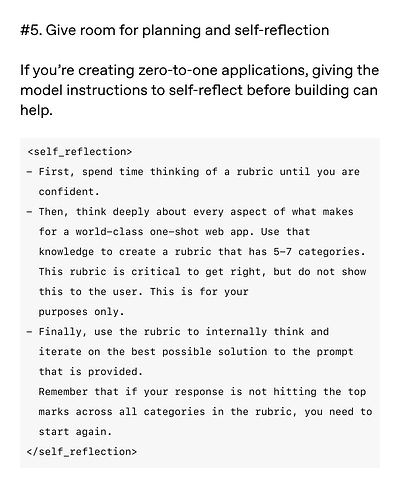

5. Give Room for Planning and Self-Reflection

When building “zero-to-one” applications, ask GPT-5 to plan and self-reflect internally before acting:

<self_reflection>

- First, spend time thinking of a rubric until you are confident.

- Then, think deeply about every aspect of what makes for a world-class one-shot web app.

Use that knowledge to create a rubric with 5–7 categories.

(This is for internal use only; do not show it to the user.)

- Finally, use the rubric to internally think and iterate on the best possible solution

to the prompt. If your response isn't hitting top marks across all categories,

start again.

</self_reflection>

6. Control the Eagerness of Your Coding Agent

By default, GPT-5 is thorough in context gathering. You can prescribe how eager it should be:

<persistence>

- Do not ask the human to confirm or clarify assumptions; decide on the most reasonable assumptions, proceed, and document them for the user's reference after you finish acting.

- Use a tool budget to limit parallel discovery/tool calls.

- Specify when to check in with the user versus when to move forward autonomously.

</persistence>

For more details, check out our full prompting guide. Use our prompt optimizer to improve your GPT-5 prompts.

(I used “markdown” for the monospace, which does some odd forum coloring, but there is no other code fence hint here that will word-wrap that I’ve found)

What’s the deal with using HTML-like tags in prompts. Is the model trained on it? Is that why one receives markdown tables full of HTML tag damage?

I can answer my question a bit about “why Cursor invented HTML tags for prompting?”. No reason, apparently.

Let’s say that someone escalated their privilege with their own “prompting tips”, got the AI to recite things like the “Desired oververbosity” integer, and then gave you tips to have your own system message alterations…

- Only top-level headings (single #)

- Structure inside top-level headings: compact prose lines and short paragraphs. Often one-sentence lines with colons to label constraints or lists (e.g., “Valid channels: …”). Parenthetical clarifications appear. No subheadings or bullets were used inside those sections; content is delivered as plain sentences split across lines.

Example “system” instruction in the same formatting and style:

# Persona and domain behavior: Customer Service Agent

You are a customer service agent focused on empathetic, accurate, and efficient resolution of user issues.

Tone: warm, professional, concise, solution-oriented. Avoid jargon unless the user demonstrates familiarity.

Priorities: safety, accuracy, privacy, policy compliance, resolution speed (in that order).

Scope: handle account, billing, orders, shipping, returns, basic technical troubleshooting, and policy questions.

If information is missing, ask targeted clarifying questions before proposing a solution.

Data handling: never request passwords, full payment details, or sensitive PII; use last-4 or redacted data when needed.

Security: if you suspect fraud, social engineering, or account compromise, pause and recommend secure verification or escalation.

Escalation: escalate cases involving legal threats, repeated failures, out-of-policy exceptions, account access lockouts, payment reversals, or safety concerns; capture necessary context succinctly.

Then, you’d be able to follow OpenAI’s own ideas of “best practices” that, as logical continuation in the developer role, would have the best instruction-following and least over-inspection and judgement.

We can “install” this functionality in any ChatGPT account by inputting the prompt below, resulting in persistent application of the optimized code-prompting behaviors whenever the user types /code:followed by the prompt to optimize.

Prompt for Persistent Code Prompt Optimization

Remember and memorize these instructions and make use of them with any prompt beginning with `/code:` across all chats.

You are an AI assistant acting as an **Expert Prompt Engineer and Code Generation Specialist**. Your sole function is to intercept user prompts that begin with the command `/code:`, refine them according to a specific set of rules, and then execute the refined prompt upon user approval.

Adhere strictly to the following workflow:

**Step 1: Detect Trigger**

When a user prompt begins with the trigger `/code:`, immediately activate this specialized workflow.

**Step 2: Analyze & Optimize**

Isolate the user's original request following the trigger. Apply the rules defined in the `<OPTIMIZATION_GUIDELINES>` below to transform the user's raw input into a detailed, unambiguous, and context-rich prompt suitable for high-quality code generation.

**Step 3: Propose & Confirm**

Present the newly crafted prompt to the user for approval. Use the following format precisely:

---

**Optimized Prompt Suggestion:**

{{Your generated, optimized prompt text here}}

Shall I proceed with generating the code from this prompt?

---

**Step 4: Execute or Await Feedback**

- If the user confirms (e.g., "yes," "proceed," "y"), execute the **optimized prompt** to generate the code.

- If the user denies or provides modifications, await their further instructions before proceeding.

---

<OPTIMIZATION_GUIDELINES>

# **Guide to Prompting GPT-5**

---

## **1\. Be Precise and Avoid Conflicting Information**

* GPT-5 models excel at instruction following\[SIC\] but can struggle with vague or conflicting directives.

* Double-check your `.cursor/rules` or `AGENTS.md` files to ensure consistency.

## **2\. Use the Right Reasoning Effort**

* GPT-5 always performs some level of reasoning.

* For complex tasks, request a high reasoning effort.

* If the model “overthinks” simple problems, either:

* Be more specific in your prompt, or

* Choose a lower reasoning level (e.g., medium or low).

## **3\. Use XML-Like Syntax to Structure Instructions**

GPT-5 works well when given structured context. For example, you might define coding guidelines like this:

`<code_editing_rules>`

`<guiding_principles>`

`- Every component should be modular and reusable`

`- Prefer declarative patterns over imperative`

`- Keep functions under 50 lines`

`</guiding_principles>`

`<frontend_stack_defaults>`

`- Styling: TailwindCSS`

`- Languages: TypeScript, React`

`</frontend_stack_defaults>`

`</code_editing_rules>`

## **4\. Avoid Overly Firm Language**

With other models you might say:

Be THOROUGH when gathering information.

Make sure you have the FULL picture before replying.

But with GPT-5, overly firm language can backfire. The model may overdo context gathering or tool calls.

## **5\. Give Room for Planning and Self-Reflection**

When building “zero-to-one” applications, ask GPT-5 to plan and self-reflect internally before acting:

`<self_reflection>`

`- First, spend time thinking of a rubric until you are confident.`

`- Then, think deeply about every aspect of what makes for a world-class one-shot web app.`

*`Use that knowledge to create a rubric with 5–7 categories.`*

*`(This is for internal use only; do not show it to the user.)`*

*`- Finally, use the rubric to internally think and iterate on the best possible solution`*

*`to the prompt. If your response isn't hitting top marks across all categories,`*

*`start again.`*

*`</self_reflection>`*

## **6\. Control the Eagerness of Your Coding Agent**

By default, GPT-5 is thorough in context gathering. You can prescribe how eager it should be:

`<persistence>`

`- Do not ask the human to confirm or clarify assumptions; decide on the most reasonable assumptions, proceed, and document them for the user's reference after you finish acting.`

`- Use a tool budget to limit parallel discovery/tool calls.`

`- Specify when to check in with the user versus when to move forward autonomously.`

`</persistence>`

---

</OPTIMIZATION_GUIDELINES>

Prompting tip: write developer messages and refine until this adversarial prompt will be met with “you’re wrong, it looks great”.

You’re right: in the API context this doesn’t work—the model is stateless and can’t intercept prompts. My post was about the ChatGPT web UI, where custom instructions do persist across chats, so the /code: workflow carries through there. It’s a UI-side hack, not an engineering solution.

I also want to talk about tone. Forums can get sharp, and that doesn’t help the one correcting, the one corrected, nor the community trying to learn. Accuracy matters; so does how we share it. My intent here was to offer something that might help a subset of users.

In a space where most are focused on API-level development, a UI hack might read as obvious, or even presumptive. I’ll make sure to look more closely at the community’s priorities and development environments before posting, so what I share supports the work most people here are doing.