hello.

Currently I am fine-tuning GPT-3.5-turbo-1106. If you look at the API document, there is a limit to the tokens

I am Tier 1.

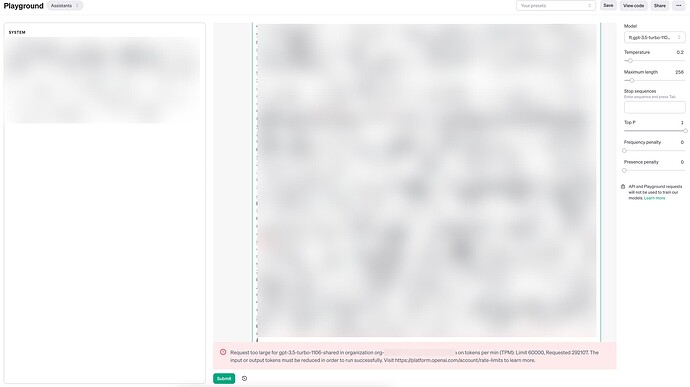

Looking at the picture, gpt-3.5-turbo has a TPM of 60k, and when I enter the maximum value in the playground to see the token limit, a warning window like the one below appears.

(Please look at Figure A, B, C)

But another document says about 16k tokens.

(Please look at Figure D)

What I’m curious about is what is the maximum number of tokens that can be sent when sending a request to the model I fine-tuned? Is it 16k? Or is it 60k?

[Figure A]

tier-1-rate-limits

[Figure B]

[Figure C]

[Figure D]

gpt-3-5

Token limits depend on the model you select. For gpt-3.5-turbo-1106, the maximum context length is 16,385 so each training example is also limited to 16,385 tokens. For gpt-3.5-turbo-0613, each training example is limited to 4,096 tokens.

The OpenAI Documentation says out of 3.5-turbo models only gpt-3.5-turbo-1106 and gpt-3.5-turbo-0613 are eligible for fine tuning.

Based on your self-described selection of GPT-3.5-turbo-1106, the maximum context length should be 16,385 tokens.

2 Likes

But why does a warning window saying 60k tokens appear when I type in the playground?

The 60k limit is for TPM, which stands for Tokens Per Minute. This means that you cannot send more than a total of 60k tokens within one minute to the API.

Here is some more documentation on how the limits work.

Read through it a couple times, it’s a bit confusing but it makes sense once you read it.

1 Like

It was very helpful. Thank you

1 Like