Hi all,

I’m working with the Responses API for my project and I’m encountering an issue after a function call.

Here’s how I’ve set up my tool definitions:

tools = [

{ "type": "web_search_preview" },

{

"type": "file_search",

"vector_store_ids": ["store_id_1"],

},

{

"type": "function",

"name": "xxx",

"description": "xxxx",

"strict": True,

"parameters": {

"type": "object",

"properties": {},

"required": [],

"additionalProperties": False

}

}

]

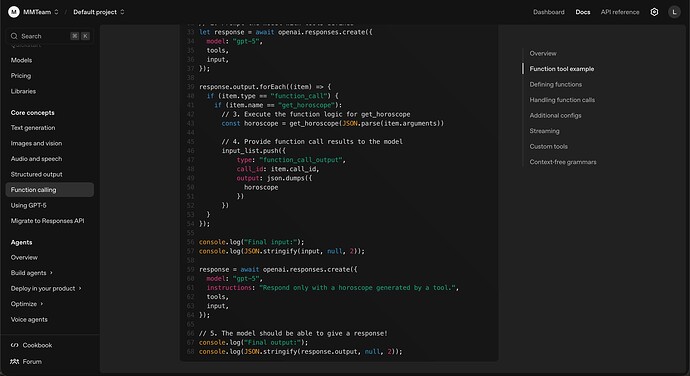

Here’s my API call logic:

api_arguments = {

"model": self.model,

"input": messages,

"temperature": self.temperature

}

if tools:

api_arguments["tools"] = tools

if previous_response_id:

api_arguments["previous_response_id"] = previous_response_id

response = self.client.responses.create(**api_arguments)

After a function call, I am appending follow-up messages like this:

# Append the model's function call message

follow_up_messages = [output_item]

# Append the function call output

follow_up_messages.append({

"type": "function_call_output",

"call_id": output_item.call_id,

"output": str(return_val)

})

logger.info(f"output_item: {output_item}")

# Request a follow-up response

follow_up_response = st.session_state.open_ai.get_llm_responses(

follow_up_messages,

nexi_tools,

None

)

The problem:

After the function call, when I try to input something new, I get this error:

*** Error occurred while question prompt: Error code: 400 - {'error': {'message': 'No tool output found for function call call_xxx.', 'type': 'invalid_request_error', 'param': 'input', 'code': None}}

It seems like the function call output isn’t being linked correctly, but I’m not sure what I’m missing.

Could someone please help me figure this out?

Thank you,

Binjan Iyer