Hello,

I am seeing unexpected behavior with the logprobs output when calling the chat completions endpoint in the API. Intermittently, the API response is returned with logprobs=None in the choices list (see sample output below). I cannot find any documentation or forum discussions describing a similar issue, so I would appreciate any insight about how else to debug this issue or if it is a bug in the API.

Simple Example for Debugging

Using openai Version: 1.97.0

from openai import OpenAI

client = OpenAI()

def call_openai(text):

messages = [

{"role": "system", "content": "Summarize the following text: "},

{"role": "user", "content": text},

]

response = client.chat.completions.create(

model = 'gpt-4.1-2025-04-14',

messages = messages,

max_tokens = 2000,

temperature = 0.5,

logprobs=True,

)

return response

And a sample response with missing logprobs output:

ChatCompletion(

id='chatcmpl-BvmYYGXtfrpvdA573fpv9SAtCtrYx',

choices=[

Choice(

finish_reason='stop',

index=0,

logprobs=None,

message=ChatCompletionMessage(

content='**Summary of the... {rest of summary omitted}',

role='assistant'

)

)

],

model='gpt-4.1-2025-04-14',

usage=CompletionUsage(

completion_tokens=879,

prompt_tokens=50019,

total_tokens=50898

)

)

Experiments

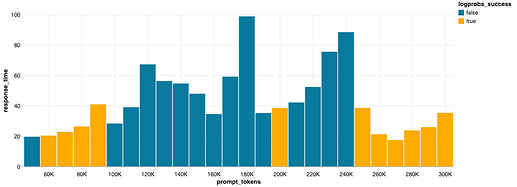

- I systematically varied prompt length and logged the presence of logprobs in the output and the response time.

- See the attached charts showing prompt length vs. response time, colored by whether or not logprobs were present in the output for various input texts

Questions

- Has anyone else seen this issue, and are there any workarounds?

- Is there any documentation I am missing about input text causing an issue with logprobs?

- Has anything changed recently that might have introduced this behavior?

Thank you for your help!