We’ve improved image generation in the API. Editing with faces, logos, and fine-grained details is now much higher fidelity with features preserved. Edit specific objects, create marketing assets with your logo, or adjust facial expressions, poses, and outfits on people. A guide on getting started: Generate images with high input fidelity.

Fantastic!

Would we expect this to improve “mask editing” performance? (e.g., more like a ‘hard mask’ than ‘soft’?)

No changes to masking today (although we’re working on overall improvements)!

I have no doubt you are ![]() Sounds good, thanks for the response and keep up the great work over there.

Sounds good, thanks for the response and keep up the great work over there.

This is only regarding the edits endpoint, and concerns the vision input.

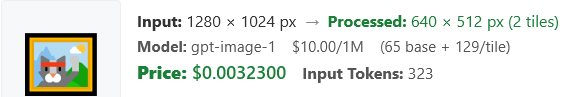

It costs more for the “vision” part of the image input used for replication, as now described:

For GPT Image 1, we calculate the cost of an image input the same way as described above, except that we scale down the image so that the shortest side is 512px instead of 768px. The price depends on the dimensions of the image and the input fidelity.

Conventional input image charges, then:

When input fidelity is set to low, the base cost is 65 image tokens, and each tile costs 129 image tokens. When using high input fidelity, we add a set number of tokens based on the image’s aspect ratio in addition to the image tokens described above.

- If your image is square, we add 4096 extra input image tokens.

- If it is closer to portrait or landscape, we add 6144 extra tokens.

More precisely, the additional cost per image is then:

- exactly square: $0.041

- non-square: $0.062

(“closer to portrait” is very fishy language when it comes to billing expectations)

Thus doubling the cost of a single image input, medium quality generation at 1024x1024 in/out. Or tripling cost with two input images.

Incredible.

If someone asked me if this was going to be possible 2 years ago, I would say “absolutely not”.

Props to the OpenAI team.

@edwinarbus Well done - already using it…

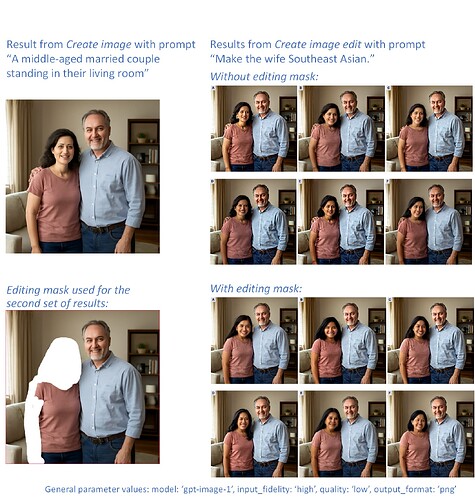

There does appear to be a relationship between input_fidelity and mask editing, as follows:

If you set input_fidelity to ‘high’ and use a mask, the mask seems to allow the model to deviate more from the original input image within the area of the mask.

See the examples in the uploaded collage (which you may want to view and enlarge in a separate tab).

The images on the right were generated with high input_fidelity. Without a mask, the model apparently tries to change the wife into a Southeast Asian woman whose face resembles that of the original wife. With an editing mask, the model seems to be freer to generate various examples of Southeast Asian women’s faces.

I’ve seen a similar pattern in analogous cases, including one with image quality set to ‘high’.

It’s unclear how general this pattern is, and the behavior of the model is likely to evolve during the next few weeks and months. But some developers may already find it worthwhile to take this possible pattern into account.

With DALL-E 2, there are these mask options, where a transparency mask is the ONLY place an image can be edited.

- RGBA 32 bit PNG, where the alpha channel has pixels of pure opaque or pure transparent (0 or 255)

- A separate mask file that is RGBA, but only the alpha channel is considered, as if it was included in the input image as 32 bit.

Without a mask, it should have been impossible to alter the image, but that is not the way it works.

gpt-image-1 completely disobeys mask hinting and cannot provide anything unedited and not a re-creation. It also disobeys that an image should only be changed where there is a mask. OpenAI has not been forthcoming about the internal prompting and presentation of inputs and mask to the model (especially the context input of the Responses tool) so that one can target the actual way the image model is used.

The gpt-image-1 model should have been put on a new edit endpoint without any of the previous nomenclature or parameters.

You should try this with a mask that does not affect the RGB. It seems you also have deleted the original face, and replaced it with white. If so, the AI has no choice but to come up with a new face. Perhaps you’d get the equivalent by merely painting over the face with white and not using mask at all…

What I want to know: since you get only billed 4k or 6k tokens additional regardless of the input size, with that token count indicating “patches” of 1024x1024 or 1024x1536 → what is the actual resizing being done, is there also upscaling to fit, and how is it padded out so that “closer to square” works on an image that is still rectangular? What is the optimum and maximum useful image that can be sent? (without “high fidelity”, it is pointless to send a lossless wide image taller than 512px, for example).

For clarity, I reaffirm that the editing mask mentioned in my previous post is transparent in the places that look white in the collage; and that it was submitted alongside the input image as the value of the “mask” parameter (the original image being the value of the “image” parameter).

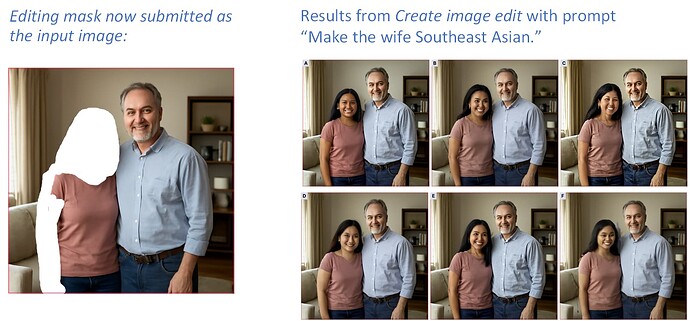

But it’s true that you can get a similar result by painting over the parts that you don’t want changed instead of providing a mask for them. In the collage below, the editing mask from the previous examples was submitted as the value of the “image” parameter, without any editing mask; and the same prompt was used as before. The results look similar to those achieved with the image + editing mask combination in the sense that, in both cases, the model appears not to have tried to imitate the facial features of the original wife (which would have been impossible in this latest case, since the original image was not supplied via the API).