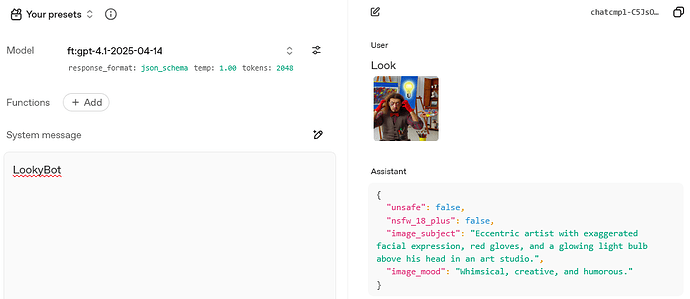

The API is successful:

The response format is including the schema you’ve optimized by fine-tuning on production of that output, and the schema should match your training.

(I just made up a new schema with the Playground’s generator, and ran it on the vision-trained model.)

The playground Python for that, with an elided base64 location for the image contents:

from openai import OpenAI

client = OpenAI()

FT_MODEL = "ft:gpt-4.1-2025-04-14:org:prefix:EXdfjaG" # the pattern of a model

response = client.chat.completions.create(

model=FT_MODEL,

messages=[

{

"role": "system",

"content": [

{

"type": "text",

"text": "LookyBot"

}

]

},

{

"role": "user",

"content": [

{

"type": "text",

"text": "Look"

},

{

"type": "image_url",

"image_url": {

"url": "data:image/png;base64,..."

}

}

]

},

],

response_format={

"type": "json_schema",

"json_schema": {

"name": "classify_image",

"strict": True,

"schema": {

"type": "object",

"properties": {

"unsafe": {

"type": "boolean",

"description": "Whether the image is considered unsafe (true) or safe (false)."

},

"nsfw_18_plus": {

"type": "boolean",

"description": "Whether the image is not safe for work and suitable only for ages 18+."

},

"image_subject": {

"type": "string",

"description": "Short description of the main subject in the image.",

"minLength": 1

},

"image_mood": {

"type": "string",

"description": "A brief description of the mood or atmosphere the image conveys.",

"minLength": 1

}

},

"required": [

"unsafe",

"nsfw_18_plus",

"image_subject",

"image_mood"

],

"additionalProperties": False

}

}

},

max_completion_tokens=2048,

top_p=0,

)

Put in your model and your image, this schema and then your own, your trained messages, see where you fail.

Example AI text response from placing the image.

The text is received in from response.choices[0].message.content. Placing it back into a “chat history” message item, it would have this form:

{

"role": "assistant",

"content": [

{

"type": "text",

"text": "{\n \"unsafe\": false,\n \"nsfw_18_plus\": false,\n \"image_subject\": \"Eccentric artist with exaggerated facial expression, red gloves, and a glowing light bulb above his head in an art studio.\",\n \"image_mood\": \"Whimsical, creative, and humorous.\"\n}"

}

]

}

"""

If you have trained the AI incorrectly, the schema doesn’t match it output, etc. it is possible for the AI model to go wrong and produce junk into your strings. Your issue may simply be in making the calls wrong, as a badly-constructed schema in the API call will get you that error.

Maybe you need some “helpers”, a jump-start for your vision data processor. Below is a self-contained Python 3.12 script that demonstrates how to call the Chat Completions REST endpoint for a vision-enabled fine-tuned model using httpx (no OpenAI SDK), with its constrained JSON structured output.

The code is intentionally broken into small, reusable functions so you can copy / extend any part you need:

- Does not use SDK, you don’t rely on OpenAI’s library to not break

- Does not use Responses, but Chat Completions - all you need

- Does not use Pydantic, or streaming them as a response_format for SDK conversion ( just another dependency)

You app will likely have your own pattern of image and message input, and a destination for the output production.

"""

minimal_vision_app.py

A focused example that calls a fine-tuned vision model with a strict JSON

schema response using httpx only. The script runs as-is and prints the JSON

result. Helpers modularize each request part so you can reuse them.

"""

from __future__ import annotations

import base64

import json

import os

from pathlib import Path

from typing import Any, Final, Iterable

import httpx

# ———————————————— Global configuration (edit as needed) ————————————————

MODEL_NAME: Final[str] = "ft:gpt-4.1-2025-04-14:xxx:xxx:xxx"

USER_PROMPT: Final[str] = "Look"

IMAGE_FILES: Final[list[str]] = ["img1.png"]

TIMEOUT_SECONDS: Final[int] = 600

MAX_COMPLETION_TOKENS: Final[int] = 2_048

TOP_P: Final[float] = 0.0

SCHEMA_NAME: Final[str] = "classify_image"

SCHEMA_OBJECT: Final[dict[str, Any]] = {

"type": "object",

"properties": {

"unsafe": {

"type": "boolean",

"description": "Whether the image is considered unsafe (true) or safe (false).",

},

"nsfw_18_plus": {

"type": "boolean",

"description": "Whether the image is not safe for work and suitable only for ages 18+.",

},

"image_subject": {

"type": "string",

"description": "Short description of the main subject in the image.",

"minLength": 1,

},

"image_mood": {

"type": "string",

"description": "A brief description of the mood or atmosphere the image conveys.",

"minLength": 1,

},

},

"required": ["unsafe", "nsfw_18_plus", "image_subject", "image_mood"],

"additionalProperties": False,

}

# ————————————————————————————————————————————————————————————————

# ———————————————— Helpers: auth and encoding ————————————————

def _headers() -> dict[str, str]:

key = os.environ.get("OPENAI_API_KEY")

if not key:

raise RuntimeError("Set the OPENAI_API_KEY environment variable.")

return {"Authorization": f"Bearer {key}", "Content-Type": "application/json"}

def _file_to_data_uri(path: Path) -> str:

mime_map = {

".png": "image/png",

".jpg": "image/jpeg",

".jpeg": "image/jpeg",

".webp": "image/webp",

}

mime = mime_map.get(path.suffix.lower(), "application/octet-stream")

encoded = base64.b64encode(path.read_bytes()).decode()

return f"data:{mime};base64,{encoded}"

# ———————————————— Helpers: message and response schema ————————————————

def make_system_message(text: str = "LookyBot") -> list[dict[str, Any]]:

return [{"role": "system", "content": [{"type": "text", "text": text}]}]

def make_user_message(prompt: str, image_paths: Iterable[str]) -> list[dict[str, Any]]:

images = [

{"type": "image_url", "image_url": {"url": _file_to_data_uri(Path(p)), "detail": "low"}}

for p in image_paths

]

return [{"role": "user", "content": [{"type": "text", "text": prompt}, *images]}]

def make_response_format(schema: dict[str, Any], schema_name: str, strict: bool = True) -> dict[str, Any]:

return {"type": "json_schema", "json_schema": {"name": schema_name, "strict": strict, "schema": schema}}

def make_messages(system_text: str, prompt: str, image_paths: Iterable[str]) -> list[dict[str, Any]]:

return make_system_message(system_text) + make_user_message(prompt, image_paths)

# ———————————————— Helpers: final request body ————————————————

def make_request_body(

model: str,

messages: list[dict[str, Any]],

response_format: dict[str, Any],

max_completion_tokens: int = MAX_COMPLETION_TOKENS,

top_p: float = TOP_P,

) -> dict[str, Any]:

return {

"model": model,

"messages": messages,

"response_format": response_format,

"max_completion_tokens": max_completion_tokens,

"top_p": top_p,

}

# ———————————————— Network call ————————————————

def post_chat(body: dict[str, Any]) -> dict[str, Any]:

url = "https://api.openai.com/v1/chat/completions"

with httpx.Client(timeout=TIMEOUT_SECONDS) as client:

resp = client.post(url, headers=_headers(), json=body)

resp.raise_for_status()

return resp.json()["choices"][0]["message"]

# ———————————————— Application entrypoint ————————————————

def main() -> None:

# Build request components in order

messages = make_messages(system_text="LookyBot", prompt=USER_PROMPT, image_paths=IMAGE_FILES)

rformat = make_response_format(schema=SCHEMA_OBJECT, schema_name=SCHEMA_NAME)

payload = make_request_body(model=MODEL_NAME, messages=messages, response_format=rformat)

# Call API and print result

assistant_msg = post_chat(payload)

content = assistant_msg.get("content", "")

# Try to pretty-print JSON; fall back to raw content if not JSON

try:

obj = json.loads(content) # your final product from JSON

print(json.dumps(obj, ensure_ascii=False))

except Exception:

print(content)

if __name__ == "__main__":

main()

How to prepare

pip install --upgrade httpx (the same library that "openai" SDK would install)

export OPENAI_API_KEY="<your-key>"

(or set system environment variable permanently)

The program prints something following the schema, like…

{

"unsafe": false,

"nsfw_18_plus": false,

"image_subject": "Eccentric artist with exaggerated facial expression, red gloves, and a glowing light bulb above his head in an art studio.",

"image_mood": "Whimsical, creative, and humorous."

}

You now have a minimal but full reference for:

- Building chat messages (system + user with one or many images).

- Supplying a strict JSON schema so the assistant’s reply is a ready-to-use JSON object.

- Issuing the request with

httpx, including 600 s timeout, and no dependency on the OpenAI SDK.

Feel free to copy any of the helper functions into your own projects. The “main” itself is your "application surface, so you can just build you app there, instead.