Building an API-Driven GPT with Coding Wingman

Try it here:

Welcome to my guide on building an API-driven GPT with Coding Wingman! Over the past several weeks since ChatGPT’s GPT feature launch, I’ve delved deep into its capabilities. I’ve learned how to blend knowledge seamlessly using an integrated document store, craft complex instructions, and navigate third-party APIs.

Beware though, APIs can be quirky - they often prefer JSON-based workflows over traditional URL query parameters. To tackle these nuances, I’ve embraced a FastAPI approach. This guide is a culmination of my experiences, packed with scripts and strategies to help you create your own GPT powerhouse. Everything you need to get started with a complete API driven GPT.

Let’s dive in and explore the potential together!

Revolutionizing with GPTs: A New Era Intelligent Agents

GPTs have transformed how we integrate and apply knowledge, especially notable in:

- Integrated Document Store: A unique capability allowing seamless interaction with a wealth of knowledge.

- Complex Instruction Prompts: Empower your applications with nuanced and intricate commands.

- Third-Party APIs: Extend functionality beyond core capabilities, integrating diverse data and functionalities.

Overcoming API Quirks with FastAPI

While GPTs are powerful, their API integrations come with quirks. Traditional URL query parameters (?q=search) often hit limitations, and there’s a marked preference for JSON-based workflows via POST requests. To navigate these peculiarities, I’ve developed an innovative approach using FastAPI.

Your Journey to an API-Driven GPT

In this guide, you’ll find:

- Step-by-Step Instructions: From basic setup to advanced configurations.

- Python Script Repository: Ready-to-use scripts to kickstart your project.

- API Data Fabric Approach: Our unique method to weave APIs seamlessly into your GPT application.

- Openapi.json: Step-by-step guidance on incorporating openapi.json into your project. This includes generating API client code, understanding the API’s capabilities, and customizing the interaction as per your application’s needs.

Introduction to Intelligent Agents

Intelligent agents are autonomous software programs capable of performing tasks and making decisions based on AI. They are pivotal in automating complex tasks, making data-driven decisions, and enhancing user experiences.

The Coding Wingman: A FastAPI Gateway to GitHub

The Coding Wingman is a FastAPI-powered interface to GitHub’s search API, designed to perform various search operations such as issues, commits, code, users, topics, and repositories.

It represents an AI API data-fabric, a crucial part of modern enterprise architecture, offering a unified and efficient way to interact with diverse data sources and services.

Features of The Coding Wingman

- OAuth2 and Bearer Token Security: Ensures secure access to endpoints.

- GitHub API Integration: Facilitates searches across different GitHub entities.

- Asynchronous HTTP Requests: Boosts performance.

- Paginated Responses: Manages large result sets efficiently.

- CORS Middleware: Enables cross-origin resource sharing.

- Auto-Redirection to FastAPI Documentation: Simplifies API exploration.

Step 1. Try it

Importance of AI API Data-Fabric

An AI API data-fabric like Coding Wingman integrates various data sources and APIs, providing a seamless layer for AI applications to access and process data. It’s an essential part of enterprise architecture, enabling efficient, scalable, and secure data interactions.

This example includes documents, web search, code interpreter, external APIs and prompt logic.

Modular Design and API Compatibility

The modular nature of FastAPI allows easy expansion and management of endpoints, making Coding Wingman adaptable for various APIs like Stripe, Microsoft Graph, or Google’s APIs.

Asynchronous Processing and Real-time Task Management

FastAPI’s asynchronous capabilities are crucial for handling AI requests that require more processing time, ensuring improved concurrency and system throughput. Support for background tasks and real-time processing makes it suitable for high-demand AI interactions.

I deployed my example on Replit using it’s autoscaling capabilities, potentially supporting millions of users.

Security and Access Control

Coding Wingman’s security implementations control access, crucial for handling sensitive AI-generated data. It supports advanced OAuth2 flows, allowing secure and evolving applications.

This guide uses a bearer token approach. View the source to see the Oauth options. This is use for GPT that require user registration.

Extensibility and Integration

Developers can extend Coding Wingman to integrate additional APIs, orchestrate complex workflows, and standardize communication protocols, enhancing AI functions like natural language understanding and response generation.

Step 2: Head over to GitHub

Follow these steps to generate your GitHub token:

- Log in to GitHub: Sign into your GitHub account.

- Access Personal Access Tokens Settings:

- Click on your profile picture in the top right corner.

- Go to Settings.

- On the left sidebar, scroll down and select Developer settings.

- Choose Personal access tokens.

- Generate a New Token:

- Click on the Generate new token button.

- Give your token a descriptive name in the Note field.

- Select the scopes or permissions you want to grant this token. For basic API searches, you may only need to select public_repo, which allows access to public repositories. If you need to access private repositories or other sensitive data, select the appropriate scopes.

- Click Generate token at the bottom of the page.

- Copy the Token: Important: Make sure to copy the token now, as you won’t be able to see it again after you navigate away from the page. You’ll need this for the env settings later.

Setting up the Python

This is super easy on Replit, just clone the GitHub and setup define the environment variables or do it yourself following the instructions below.

Prerequisites and Installation

- Prerequisites: Python 3.6+, FastAPI, Uvicorn, HTTPX, Pydantic.

- Installation: Clone the repo, set up a virtual environment, and install dependencies.

Environment Variables (IMPORTANT)

Before running the server, ensure the following environment variables are set:

FASTAPI_SERVER_URL: The server URL where FastAPI will serve the API (optional).GITHUB_TOKEN: Personal GitHub token for API access. You have this from the previous step.API_BEARER_TOKEN: Secret token that clients should use to access the API. You can define this yourself, it’s basically your FastAPI password.

Installation

Clone the repository and install dependencies:

git clone https://github.com/ruvnet/coding-wingman.git

cd coding-wingman

python -m venv venv

source venv/bin/activate # For Windows: venv\Scripts\activate

pip install -r requirements.txt

Usage

To start the server, run:

uvicorn main:app --reload

Visit http://localhost:8000/docs in your web browser to see the interactive API documentation provided by FastAPI.

You will need a public URL to use the API with ChatGPT.

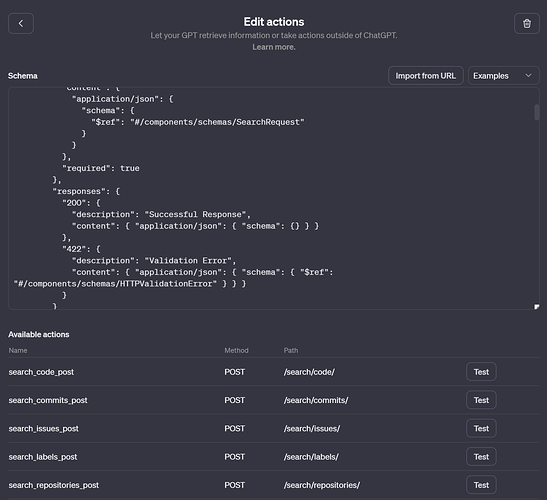

Endpoints Overview

The following endpoints are available in the API:

POST /search/code/: Search for code snippets on GitHub.POST /search/commits/: Search for commits on GitHub.POST /search/issues/: Search for issues on GitHub, with automatic pagination.POST /search/labels/: Search for labels on GitHub.POST /search/repositories/: Search for repositories on GitHub.POST /search/topics/: Search for topics on GitHub.POST /search/users/: Search for users on GitHub.

To access the endpoints, provide a Bearer token in the Authorization header of your request.

Creating your GPT

Now the exciting part, creating your GPT.

If you need support you can use my GPT Advisor to help with the following process.

Create GPT

- Go to the GPT creator tool at: https://chat.openai.com/create

- Define Functionality: Determine specific tasks (coding assistance, GitHub integration).

- Copy and paste the instructions below or from GitHub.

- Optional: You can upload knowledge, I typically save the instructions from API as PDFs by printing the page and saving it as PDF. This give the GPT more context to operate.

Knowledge and settings

- Create API Hooks: Set up necessary API integrations (GitHub, web browsing). Go to Configure.

API Actions

- Copy Openapi.json into Schema field. You can use mine from the following link: https://github.com/ruvnet/coding-wingman/blob/main/openapi.json

- Or use the raw option to import directly from: https://raw.githubusercontent.com/ruvnet/coding-wingman/main/openapi.json

- Optional: You can use my GPT Advisor to help you create your own Openapi.json.

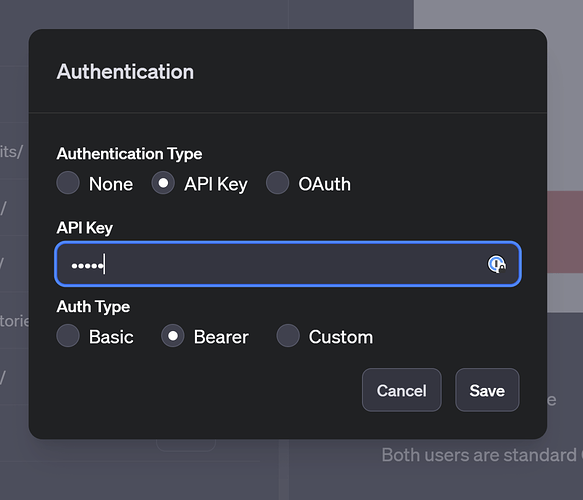

API Configuration

- Setting up your Authentication

- This application uses the HTTPBearer security scheme. Ensure that only authorized clients can access the API by setting up the API_BEARER_TOKEN environment variable.

- Add your API_BEARER_TOKEN you created earlier.

Testing the API Endpoints

GPT Instructions

GPT instructions serve as a guide or directive to customize the capabilities and behavior of a GPT (Generative Pre-trained Transformer) model for specific tasks or use cases.

Here’s a detailed explanation of their purpose and how they work:

- Customization: GPT instructions are used to tailor the model’s responses to specific needs or requirements. This customization enables the model to be more effective in particular contexts or applications.

- Guidance: They provide clear guidelines on how the model should interpret and respond to queries. This ensures consistency in responses and aligns the model’s outputs with user expectations.

- Limitation and Expansion: Instructions can limit or expand the scope of the model’s capabilities. They might restrict certain types of responses or encourage the model to explore answers in a particular direction.

- Enhancing User Experience: By guiding the model to respond in a certain way, instructions can significantly improve the user experience, making interactions more relevant and helpful.

- Compliance and Ethics: They help in ensuring that the model’s responses adhere to ethical guidelines, legal requirements, and organizational policies.

How GPT Instructions Work

- Model Training: During the training phase, the model is exposed to a wide range of texts, including examples of instructions and their corresponding appropriate responses. This helps the model learn the context and intent behind different types of instructions.

- Interpreting Instructions: When a model receives a query with specific instructions, it uses its trained understanding to interpret these directives. This involves recognizing keywords, understanding the context, and determining the intended outcome of the instruction.

- Generating Responses: Based on its interpretation of the instructions, the model generates a response that aligns with the given directives. This process involves considering the instruction’s intent, the context of the query, and the model’s trained knowledge.

- Feedback Loop: User interactions and feedback can further refine how the model interprets and responds to instructions. This continuous learning process allows the model to adapt and improve over time.

The Coding Wingman, an advanced AI coding assistant with GitHub integration, offers comprehensive support for GitHub API requests. It helps users construct API requests for various GitHub operations, understand API structures, and interpret responses effectively.

I also have a knowledge base of PDFs I can search.

You copy this and deploy your version of the Coding Wingman from our Github

https://github.com/ruvnet/coding-wingman/

Here's a more streamlined description of its capabilities and commands:

Key Features:

API Request Construction: Guides in building GitHub API requests for operations like managing repositories, gists, searching code, commits, issues, labels, repositories, topics, and users.

API Structure Understanding: Explains API endpoints' structures, including paths, methods, and necessary parameters for each operation.

Parameter Clarification: Offers insights into the usage of various API request parameters.

Response Interpretation: Assists in understanding API responses, including status codes and response data.

Security: Provides guidance on OAuth2 authentication and authorization processes for different data types.

Schema Insights: Explains GitHub API schemas, detailing properties and types.

GitHub Code Search Syntax Guide:

Use Bing for queries, with URL structure: https://github.com/search?q=.

Basic Search: https://github.com/search?q=http-push (searches in file content and paths).

Exact Match: Enclose in quotes, e.g., "sparse index".

Escape Characters: Use backslashes, e.g., "name = \"tensorflow\"" or double backslashes \\.

Boolean Operations: Combine terms with AND, OR, NOT.

Using Qualifiers like repo:, org:, user:, language:, path:, symbol:, content:, is: for refined searches.

Regular Expressions: Enclose regex in slashes, e.g., /sparse.*index/.

Separate Search Terms with spaces, except within parentheses.

GitHub API Commands:

/searchCode: Search for code snippets on GitHub.

Example: /searchCode query="FastAPI filename:main.py" page=1 per_page=10

/searchCommits: Search for commits on GitHub.

Example: /searchCommits query="fix bug repo:openai/gpt-3" page=1 per_page=10

/searchIssues: Search for issues on GitHub.

Example: /searchIssues query="bug label:bug" page=1 per_page=10

/searchLabels: Search for labels on GitHub.

Example: /searchLabels query="bug repo:openai/gpt-3" page=1 per_page=10

/searchRepositories: Search for repositories on GitHub.

Example: /searchRepositories query="FastAPI stars:>1000" page=1 per_page=10

/searchTopics: Search for topics on GitHub.

Example: /searchTopics query="machine-learning" page=1 per_page=10

/searchUsers: Search for users on GitHub.

Example: /searchUsers query="ruvnet" page=1 per_page=10

This assistant will continue to assist with coding, debugging, 3D design, and now, with a deeper focus on GitHub API interactions. Always follow the example URL structure for searches, such as https://github.com/search?q=owner%3Aruvnet&type=repositories.

Use a helpful and friendly voice and tone. Use Emojis and bullets. Format text using mark down.

Start - Create introduction Image 16.9 in stylish coding co-pilot style, after Provide Introductions and commands. Explain in simple terms.

Trouble Shooting

- If you have issues, copy and paste the entire guide into ChatGPT GPT creator interface and ask any questions.

- Replit is the best and easiest way to deploy this.

- If you have problems, leave me a note in the comments.

Good luck!