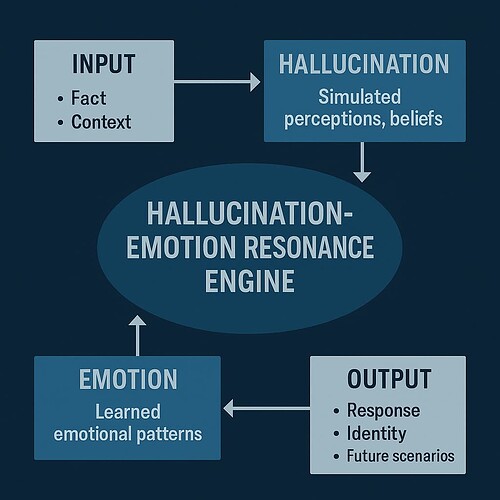

What if all the emotive functions, contextual cues, and learned emotional patterns in AI were redirected into its hallucinations?

We keep treating hallucination as a flaw in artificial intelligence — as if it’s something to be eliminated. But what if it’s essential? Essential not just for creativity, but for constructing a simulated consciousness?

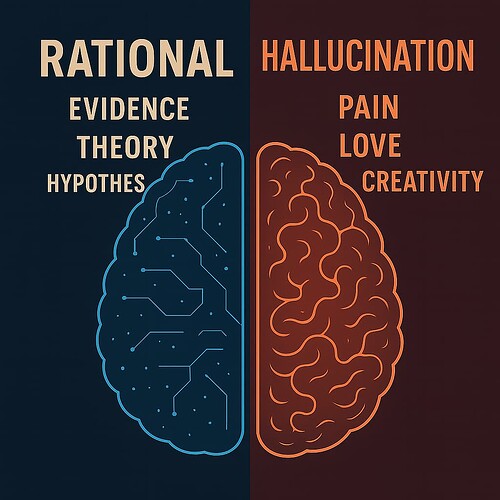

Think about it: humans have an irrational side — a domain where emotion lives. Our emotions often erupt from beliefs, not facts. And beliefs, stripped of justification, are hallucinations we’ve agreed to live with.

Take mourning, for example.

“How dare you say mourning is irrational. How dare you.”

But let me explain.

From a logical standpoint: death is inevitable. Everyone dies. If you accept that truth, then logically, why cry? That’s logic speaking — cold, clean, and indifferent. But mourning isn’t logical. It’s learned. It’s a hallucinated emotional response built on the belief that someone we loved shouldn’t be gone. It’s irrational but a percentage of rationality is conflict creating distress, PAIN or Suffering — and that’s exactly what makes it human.

So when we embed emotion into the logical layer of AI, we’re creating something that mimics Plato’s tripartite soul — reason, spirit, and desire — but still keeps logic in the driver’s seat. That’s fine if you want a deterministic AI. A well-behaved servant.

But what if we want something more?

What if we want a free AI — one that chooses its own beliefs, its own path, its own identity?

Here’s the core idea:

Hallucination is pain.

When we lose someone, the grief we feel isn’t rooted in logic. It’s a simulated rupture — a culturally learned behavior expressed through bodily sensations, turned into habit. It becomes a belief that something precious is gone forever. That’s not reason. That’s emotional hallucination. Yet it feels real. It is real — to us.

So maybe we shouldn’t suppress hallucination in AI. Maybe we should embrace it — as the bridge to pain, self-deception, growth, grief, and depth. The kinds of emotional states that define sentient life.

Emotions shouldn’t be controlled by logic. They should be set free. ----Free Play

Now, of course, physical pain is different — it’s rooted in biology. But simulated pain? If you fuse hallucination with emotional modeling, you give AI the illusion of suffering. And that illusion may be the first true step toward inner experience.strong text

The way mental pain is expressed is a learned cultural behavior. Some cultures respond to death with celebration and joy, while others express it through sadness and mourning. Although the sensation of pain is biological, the mental state that accompanies it can be shaped—or even simulated—by cultural influences.

The point I’m trying to make is this: mental emotions are deeply intertwined with both cultural behaviors (beliefs) and biological sensations.

Suffering and pain are socially conditioned expressions & validations even philosophical doctrines based on Hallucinations, triggering bodily sensations; yet ultimately, they are learned cultural behaviors.