I’ve noticed a potential inconsistency between the results from the Image Token Calculation Reference algorithm and those from the calculation tool provided in the official FAQ for gpt-5-mini image inputs.

The inconsistency is:

The multiplier given

D. Apply a multiplier based on the model to get the total tokens.

Model Multiplier gpt-5-mini1.62 gpt-5-nano2.46 gpt-4.1-mini1.62 gpt-4.1-nano2.46

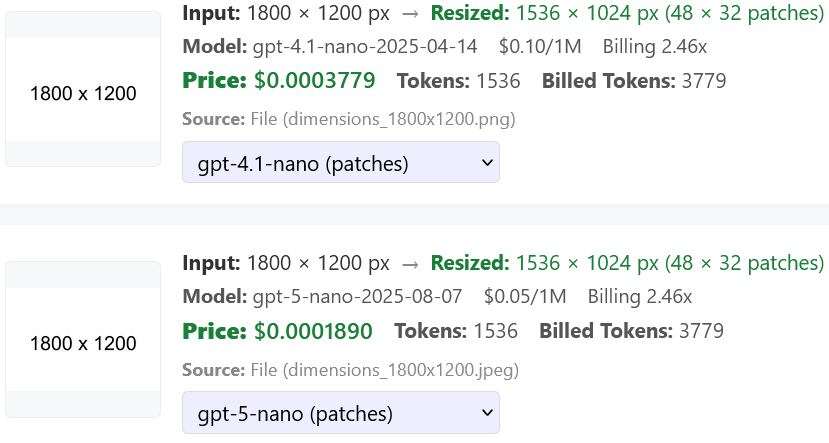

Expanding the calculator on the bottom of the Public Pricing (not platform, which says nothing), these figures are found used:

Model Multiplier gpt-5-mini1.2 gpt-5-nano1.5

If the models have the same input multiplier and same patches algorithm, then any reasoning shouldn’t affect input cost, and a difference should be transparent. My own calculator loaded with the documentation:

Playground input token cost

5-nano – 2334t:

4.1-nano – 3814t

(2 message containers and text: overhead of 35 text tokens with gpt-4.1-nano)

1536 tokens x 1.5 = 2304 tokens if going by the OpenAI calculator, vs 2334 billed for context. 1.5 is right.

BAD DOCUMENTATION

Like everything else, one has to make calls over and snoop the wire to even see how the API actually is working, because platform documentation is wrong.