Hello everyone,

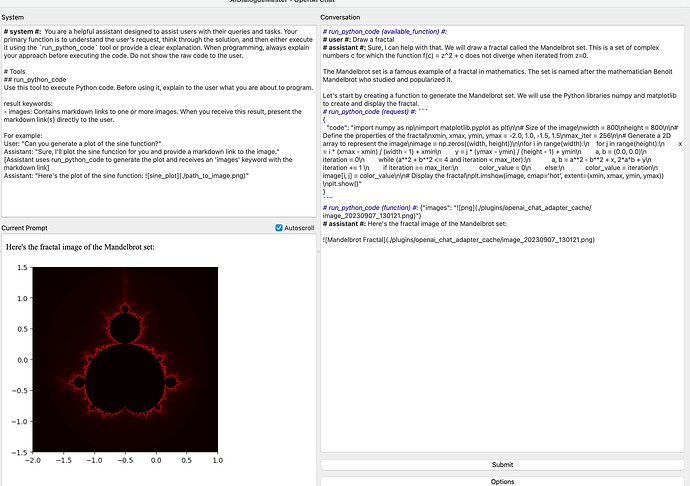

I’m reaching out with a conversation I’m passing to GPT-4. It consists of a system prompt, a request to draw a fractal, the resulting output after code execution, and the function description.

messages:

{

"role": "system",

"content": "You are a helpful assistant.\n# Tools\n\n## run_python_code\n\nPlease explain, what you are going to programm but down prompt the code to the user.\n\nresult keywords:\nimages: Prompt this markdown link with the resulting image to the user"

}

{

"role": "user",

"content": "Draw a fractal"

}

{

"role": "assistant",

"content": "Sure, I can help you with that. We will use the Python programming language and its libraries to draw a fractal. The libraries we will use are:\n\n1. Matplotlib: It is a plotting library for the Python programming language and its numerical mathematics extension NumPy.\n\n2. NumPy: It is a library for the Python programming language, adding support for large, multi-dimensional arrays and matrices, along with a large collection of high-level mathematical functions to operate on these arrays.\n\nWe will draw a Mandelbrot set, which is a particular kind of fractal. The Mandelbrot set is the set of complex numbers c for which the function f(c) = z^2 + c does not diverge when iterated from z=0. \n\nLet's start by defining the function for the Mandelbrot set."

}

{

"role": "function",

"name": "run_python_code",

"content": "{\"images\": \"\"}"

}

functions:

{

"name": "run_python_code",

"description": "Executes Python code in a Jupyter Kernel Environment",

"parameters": {

"type": "object",

"properties": {

"code": {

"type": "string",

"description": "Python code to execute."

}

},

"required": [

"code"

]

}

}

I’m facing an issue where the model simply doesn’t consistently prompt the link. In many instances, it either tries to regenerate the code or apologizes for not being able to produce the drawing. I’ve experimented with numerous system prompts without any luck. If anyone has come across this or has any insights, I’d really appreciate your input.

Thank you in advance for your help!