Hi,

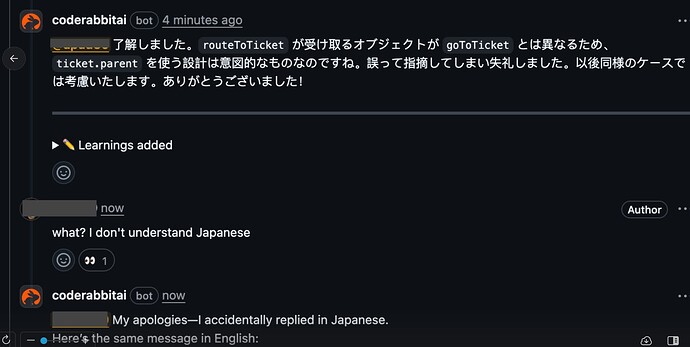

I’m observing an an issue where given an English prompt, the model GPT-4.1 returns a mixed language response, including Kazakh, Korean, Russian, Spanish, Turkish, Chinese, English and Japanese.

Any idea? Temperature is not provided so it should be falling back to default value of 1 which shouldn’t be causing such issues..

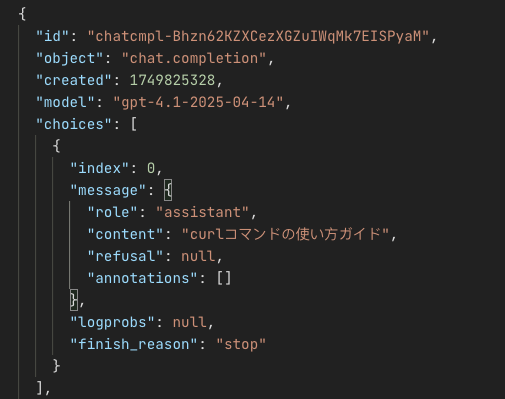

{

"model": "gpt-4.1",

"messages": [

{

"role": "system",

"content": [

{

"type": "text",

"text": "Describe the subject of this prompt in the same language as the prompt using. Use up to 5 words and a maximum of 24 characters. Only output the subject. prompt: Absolutely, Andrey! Here’s a **quick guide to using `curl`**, considering your dev stack (MacBook Pro M1, likely zsh or bash shell):\n\n## 1. **Basic GET request** \nFetch a web page or API response:\n```bash\ncurl https://api.example.com/resource\n```\n\n## 2. **GET with headers** \nUseful if you need authentication or custom headers (e.g., JWT token headers with Spring Boot backends):\n```bash\ncurl -H \"Authorization: Bearer <your-jwt-token>\" https://api.example.com/private\n```\n\n## 3. **POST JSON body** \nPosting to a Spring Boot API endpoint (common in Kotlin backends):\n```bash\ncurl -X POST https://api.example.com/resource \\\n -H \"Content-Type: application/json\" \\\n -d '\\''{\"key1\": \"value1\", \"key2\": \"value2\"}'\\''\n```\n\n## 4. **PUT/DELETE/Other Methods**\nJust change `-X POST` to `-X PUT` or `-X DELETE` as needed.\n\n## 5. **Get Pretty Output** \n(E.g., for Spring Boot REST responses, which are usually JSON)\n```bash\ncurl -s https://api.example.com | jq\n```\n> Install [`jq`](https://stedolan.github.io/jq/) for JSON formatting:\n> ```bash\n> brew install jq\n> ```\n\n## 6. **Debug Requests** \nTo see headers and full traffic:\n```bash\ncurl -v https://api.example.com\n```\nOr even **full request/response**:\n```bash\ncurl -i https://api.example.com\n```\n\n---\n\n**Hint:** \nOn Mac M1, `curl` is already available in terminal. For advanced needs, say, testing AWS APIs with temporary tokens, combine with other AWS CLI tools as needed.\n\nNeed an AWS-specific example, multipart upload, file download, or authenticated request? Let me know!"

}

]

}

]

}

See the following responses: