Hi all,

I would really appreciate the help here. I am using the following code in Python, works well with the gpt-4 model, however, once I use my own babbage-2/davinci fine-tuned model, I get this error message:

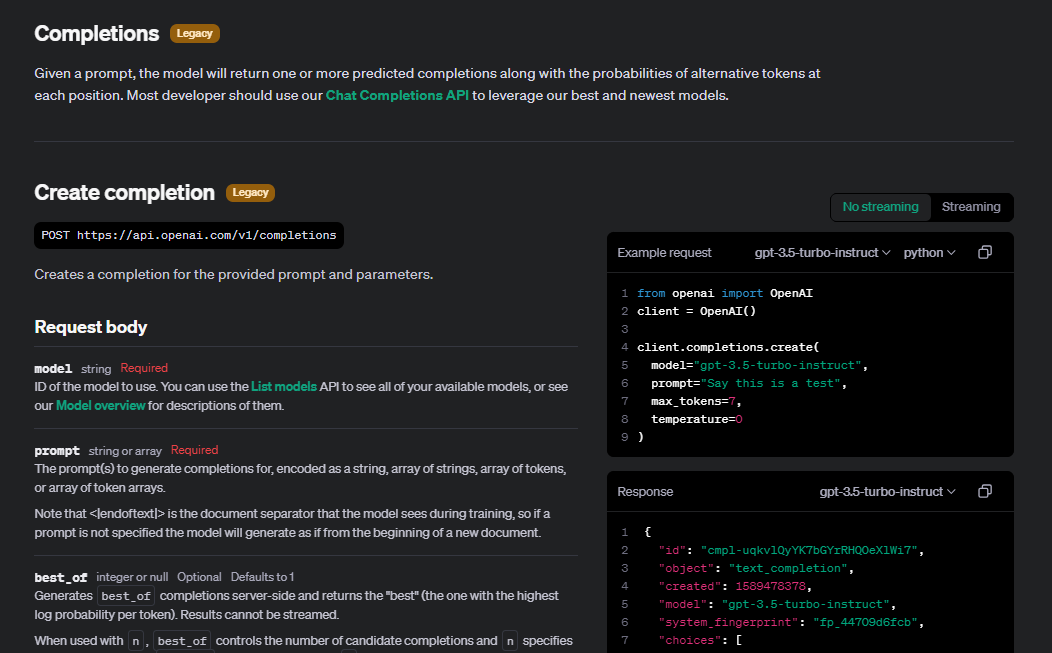

An error occurred while categorizing: Error code: 404 - {'error': {'message': 'This is not a chat model and thus not supported in the v1/chat/completions endpoint. Did you mean to use v1/completions?', 'type': 'invalid_request_error', 'param': 'model', 'code': None}}

Here is the python code in question:

from openai import OpenAI

def categorize_description(description):

"""

This function uses OpenAI to categorize the transaction based on its description.

Args:

description: The description of the transaction.

Output:

category: The category assigned to the transaction.

"""

if not openai_api_key:

st.info("Please add your OpenAI API key to continue.")

st.stop()

client = OpenAI(api_key=os.environ['OPENAI_API_KEY'])

prompt = f"'Categorize the following transaction description: {description}'"

try:

completion = client.chat.completions.create(

model="ft:babbage-002:mycustomtrainedmodelhere",

messages=[

{"role": "system", "content": "You are a helpful assistant"},

{"role": "user", "content": prompt}

],

temperature=0.5,

max_tokens=64,

)

category = completion.choices[0].message

# category = completion['choices'][0]['message']['content']

return category

except Exception as e:

st.error(f"An error occurred while categorizing: {e}")

return "Uncategorized"

I would appreciate the assistance here.

Thank you.