Hi there, good day!

I am quite interested in how fast Groq and Cerebras made their systems. And I want to know if it would be possible for OpenAI to use their compute power to speed up GPT models (in theory).

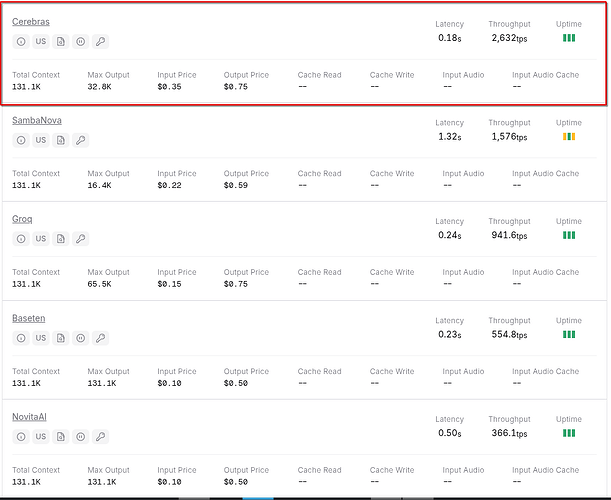

From OpenRouter, we can see a very significant increase in throughput compared to other providers:

GPT OSS 120B:

7x more tokens (compared to Novita).

With Qwen, the gap is 16 times (Compared with Together) (I cannot include more than one img and no links, so use OpenRouter to search for the information please).

So, is GPT not able to run on Cerebras hardware? Am I wrong and missing a critical detail?

Could OpenAI allow us to run GPT on Cerebras similar to how we can run GPT on Azure? Or could they offer the models to us running on Cerebras systems? I would even pay more per token to run it there if we have speed improvements.

There is also Groq, but Cerebras tends to be faster under the same scenarios (I tested 30 prompts 5 times with GPT OSS 120B and 20B).