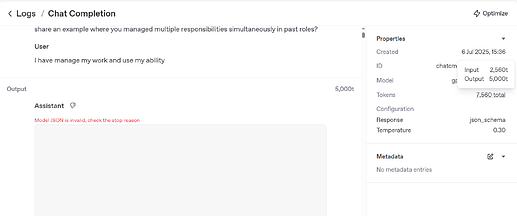

I’m running into an issue with the structured responses schema. It’s a chat application. In most of the cases, I get a response with ~ 100 tokens, but sometimes randomly OpenAI consumes the entire 5000 max_token i have enforced and outputs an invalid JSON(Refer Img to what i see on OpenAI completions logs).

Output Schema:

class ChatOutputSchema(BaseModel):

total_questions_answered: int

end_conversation: bool

response: str

@backoff.on_exception(backoff.expo, openai.RateLimitError, max_time=300)

def get_chat_output(_messages: List[dict]) -> ChatOutputSchema:

openai_client = openai.OpenAI(api_key=settings.OPENAI_API_KEY)

openai_response = openai_client.beta.chat.completions.parse(

model="gpt-4o-2024-08-06",

messages=_messages,

response_format=ChatOutputSchema,

temperature=0.3,

max_tokens=5000,

)

return openai_response.choices[0].message.parsed (edited) ```

Error

Traceback (most recent call last):

File "site-packages/celery/app/trace.py", line 453, in trace_task

R = retval = fun(*args, **kwargs)

File "site-packages/celery/app/trace.py", line 736, in __protected_call__

return self.run(*args, **kwargs)

File "tasks.py", line 293, in chat_task

chat_output_obj = chat(

File "tasks_utils.py", line 567, in chat

return get_chat_output(_messages=messages)

File "site-packages/backoff/_sync.py", line 105, in retry

ret = target(*args, **kwargs)

File "tasks_utils.py", line 558, in get_chat_output

openai_response = openai_client.beta.chat.completions.parse(

File "site-packages/openai/resources/beta/chat/completions.py", line 158, in parse

return self._post(

File "site-packages/openai/_base_client.py", line 1239, in post

return cast(ResponseT, self.request(cast_to, opts, stream=stream, stream_cls=stream_cls))

File "site-packages/openai/_base_client.py", line 1039, in request

return self._process_response(

File "site-packages/openai/_base_client.py", line 1121, in _process_response

return api_response.parse()

File "site-packages/openai/_response.py", line 325, in parse

parsed = self._options.post_parser(parsed)

File "site-packages/openai/resources/beta/chat/completions.py", line 152, in parser

return _parse_chat_completion(

File "site-packages/openai/lib/_parsing/_completions.py", line 72, in parse_chat_completion

raise LengthFinishReasonError(completion=chat_completion)

openai.OpenAIError: Could not parse response content as the length limit was reached - CompletionUsage(completion_tokens=5000, prompt_tokens=2560, total_tokens=7560, completion_tokens_details=CompletionTokensDetails(accepted_prediction_tokens=0, audio_tokens=0, reasoning_tokens=0, rejected_prediction_tokens=0), prompt_tokens_details=PromptTokensDetails(audio_tokens=0, cached_tokens=0))

Any help would be appreciated. Happy to share more details if needed