Hi,

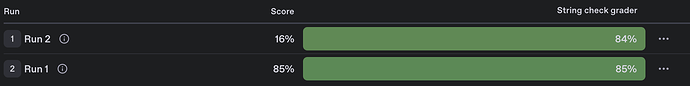

I’ve been running into an issue with the Evaluations tool (https://platform.openai.com/evaluations) lately and am wondering if something changed, or if I’m doing something wrong. I am running a custom evaluation with a CSV file as the dataset. I’m able to create a new evaluation and perform runs as expected. My dataset consists of 100 records. On the first run there’s a reasonable chance that it processes the entire dataset. On subsequent runs it will only process ~10-20% of the dataset. Even on the initial run it will sometimes only process ~10-20%.

I verified our usage tier and we’re well within range. Additionally, this was working as expected roughly 2-3 weeks ago. I don’t receive any visible errors either. Is this expected behavior? If not, are there additional logs I can look at to see why it might be exiting early?

Today I’m going to play with running evals via code instead, in the hopes that it works better.

Thanks!