The AI must also be able to make sense of the choices it has to select from, and they need to have “writeability” and probability that makes them as likely as others if the correct choice.

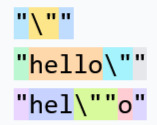

One can immediately imagine an application where desired enums can’t be used:

“You are an AI that reports on string characters that can’t be used as key values in an OpenAI structured JSON schema”;

enum = ["\x00", "\x0a", '"', '\"'] # python

What, you don’t want to send a bunch of unicode code points and see what fails as an enum yourself? Okay, I’ll do a few hundred.

Send a schema

Vary the enum.

# Construct the json_schema with the current test_value as the enum

json_schema = {

"name": "ascii_test",

"description": "A basic structured output response schema for an ASCII test with a fixed value",

"strict": True,

"schema": {

"type": "object",

"required": [

"key"

],

"properties": {

"key": {

"type": "string",

"description": "The enum value under test which constrains the AI to one possible response",

"enum": [

test_value

]

}

},

"additionalProperties": False

}

}

Iterate over all the unicode single bytes (double quotes skipped),:

{

"character": "' '",

"byte_value": 32,

"supported": true

},

{

"character": "'!'",

"byte_value": 33,

"supported": true

},

{

"character": "'\"'",

"byte_value": 34,

"supported": false

},

{

"character": "'#'",

"byte_value": 35,

"supported": true

},

Prove no issue with sending characters of bytes 128-255 as latin1 or cp1252 representations:

[

{

"character": "'}'",

"byte_value": 125,

"supported": true

},

{

"character": "'~'",

"byte_value": 126,

"supported": true

},

{

"character": "\\x7f",

"byte_value": 127,

"supported": true

},

{

"character": "\\x80",

"byte_value": 128,

"supported": true

},...(successes continue)

The only “gotcha” seems to be any quote, or an unescaped linefeed “\x0a”