Enhanced Prompt Management

Prompt quality is critical to the success of your integrations, but many developers are managing prompts through copy-paste and vibe checks alone. The resulting uncertainty leads to slower integration velocity and limits adoption of new models and capabilities.

We want to fix this!

Introducing… Prompts

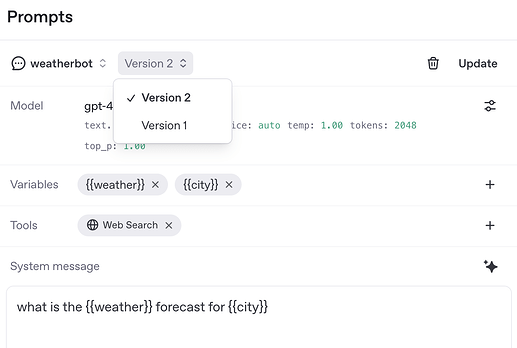

Prompts are reusable configuration for responses, combining: messages, tool definitions and model config. They’re versioned and support template variables.

const response = await openai.responses.create({

prompt: {

"id": "pmpt_685061e957dc8196a30dfd58aba02b940984717f96494ab6",

"variables": {

"weather": "7 day",

"city": "San Francisco"

}

}

});

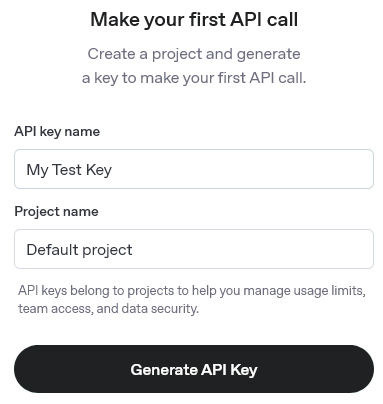

What’s launching

Starting today, Prompts are a first-class, versioned resource within Platform. They’re deeply integrated with Evals, Logs and natively accessible via the API, making it easier than ever to manage, iterate and deploy high-quality prompts at scale.

We’ve overhauled the Prompts Playground and introduced a new Optimize tool to help developers hone in on the most effective versions of their prompts.

The Optimize tool helps catch contradictory instructions, ambiguous formatting hints, suggesting a more optimized rewrite.

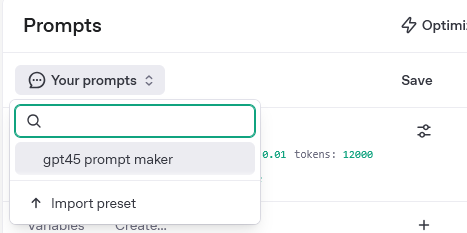

Q: What’s happening to Presets?

You can now import your Presets into Prompts and begin using the new features including versioning and prompt templates.

Q: What’s next?

We have a pretty exciting roadmap for improving Optimize and providing an even more seamless experience when working with Evals. Would love to hear what features you’d most like to see!

Q: Where can I learn more?

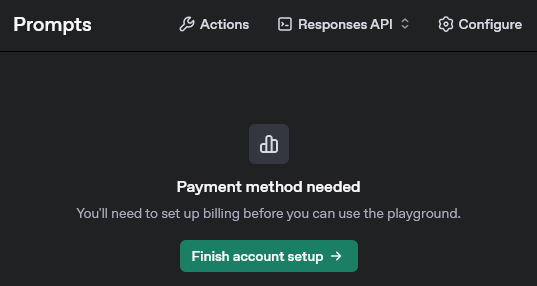

Take a look at the docs and try Prompts in the updated Prompt Playground?