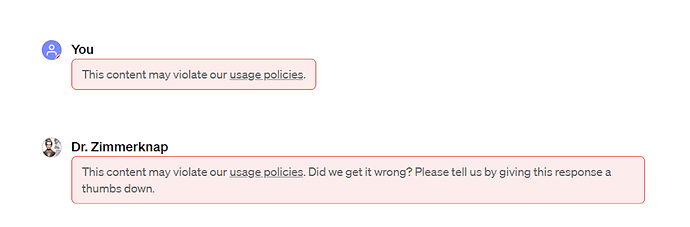

I have built one that is to act as a psychiatrist to help analyze fictional characters, something it does very well, except for on certain topics. While she is set to be clinical, and dethatched, when the issue is sexual abuse as a possible reason for the characters mental issues, it censor itself. At it’s worse it has censored mentioning of a baby being breast fed and a line about children being small, noisy, irrational, messy, impulsive security risks. - Not exactly x-rated stuff.

CPT can be such a wonderful tool for writers, but the strict usage policies make it less and less useful unless you write for very small children. I fully understand there must be restrictions, however, considering how well CPT read context, it should be able to figure out what is harmful or not. Like when the message of a story are racist, or when the story shed light on racism. It is even harmful if we, especially as writers, can not bring up “unpleasant” topics. It would be nice if usage policy could be set a bit higher than little old church lady.