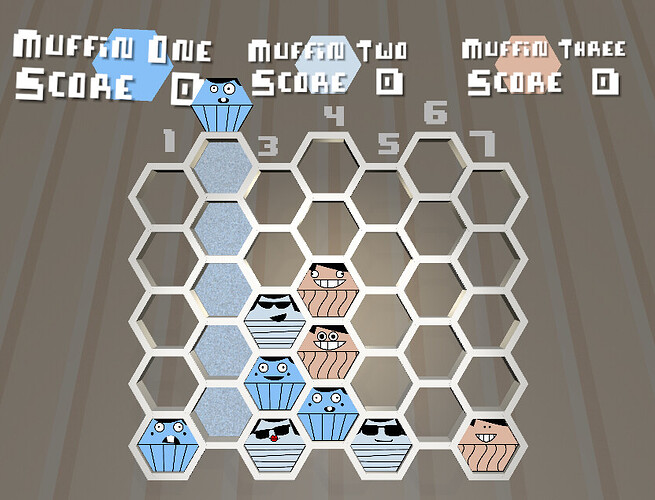

I am the developer of a multiplayer puzzle game called All Your Muffins. It is a competitive hex-based match-3 game. As part of the development I had the game output the board state as an ascii-art grid and as a 2D array, which is quite misleading as the game is a hexagon grid and therefore every other row is halfway offset to create diagonal alignment.

I got the idea to explain the game and paste the board state to GPT-4o and see how they did. Spoiler: Poorly.

I tried with Claude and lengthy explanations of the board state, and gave hints. Terrible.

o1: Utter failure

o3: Spends 2 minutes outputting hundreds of lines of thought, makes terrible moves

I find this absolutely fascinating as a human can glance at the board and see an obvious scoring opportunity, but the basic concept of hexes seems to elude all LLMs I’ve tested with. They get both representations of the board state:

..a...b...c...d...e...f...g..

.....___.....___.....___.....

.___/...\___/...\___/...\___.

/...\___/...\___/...\___/...\

\___/...\___/...\___/...\___/

/...\___/...\___/...\___/...\

\___/...\___/...\___/.2.\___/

/...\___/...\___/.1.\___/...\

\___/...\___/.1.\___/.3.\___/

/...\___/...\___/.3.\___/...\

\___/.3.\___/.2.\___/.3.\___/

/...\___/.2.\___/.3.\___/.2.\

\.../...\___/...\___/...\___/

a b c d e f g

---------------

0 0 0 0 0 0 0

0 0 0 0 0 0 0

0 0 0 0 1 2 0

0 0 0 1 3 3 0

0 3 2 2 3 3 2

---------------

And of course pasting the image of the game to the LLMs that can image process does nothing to help them along (just a screen shot, not matching the above data):

Can the LLMs learn this game? Is this an LLM turing test?

Omg… use a slim data structure and a program that converts the moves…

In chess you just let the model output D2D3

and then give it the positions of all figures and ask for next turn.

If you let it fly a drone you wouldn’t let it print a new drone at another location either, right?

I don’t understand your reply. The LLM would output “I drop in column e” and I would do the move in the game, copy and paste the board. It was a simple test, but they cannot grasp the hexagon grid data structure or the ascii version.

Well, forget about llm for once. How would you solve it without llm?

Solve… the whole game? I just wanted to see if GPT could play it. I have a bot player that absolutely trounces the LLM player and will give me a run for my money if I make any mistakes.

And can you feed the code to the llm then?

Sure, but it still cannot “see” the state of the board or understand where the threats and opportunities lie. I’m sure if I played a thousand games and fed the training data to some model it could play, but off the shelf they cannot hang with hexagons like they could play chess or connect 4 or tic-tac-toe.

So what are the rules? Are they clear?

Can you feed it state and rules and ask for a code that finds the best next move programatically?

Yeah i explain it 10 ways from sunday before playing. 3 players take turns dropping a piece into the hex grid. If there’s 3 in a row vertically or diagonally, the pieces get scored and removed from the board and the above pieces drop, potentially causing combos. GPT could write the bot code and know the game as well as anyone. But paste a board state in the same chat and it can’t figure out an obvious move.

A solution to solve this could be to check out each field recursively and on each field analyse each possible step afterwards and find out which sequence is most likely to win.

LLM need at least something similar so they can transform a strategy.

They can find a stick and some metal and build a hammer to hit a nail because they have concepts like tool construction somewhere…

So you must find something similar. Try to explain your game to a child with alzheimers if there is none and give it a set of possibilities…

You can store this over time and finetune a model but I don’t think your expectations are right.

I mean, you know it, I know it, the LLM “knows” it, but can’t DO it. o3 thinks for 2 minutes while spurting out internal strategies for 300 lines only to completely miss the obvious 3 in a row opportunity, and the next opponent’s scoring opportunity.

Even the Bot player didn’t require recursion, a very simple strategy is to simply always try to score, barring that, always try to block. More advanced would be “does my move create a scoring opportunity for the next player” and so forth, but to be a basic player you have to be able to simply see the scoring opportunities and take them.

The model has no concept of knowing. It is a bag of tools. And when you have only hammer and screwdriver you can still try to paint a wall with it… but… i mean yes you can put the hammer into the paint… so the hammer must know how to paint a wall…

That is recursion… Just saying…