The Problem We’re Solving

While ChatGPT has a “user memory”, it is limited in space. Other LLMs, like Claude, Gemini, Sarvam, have a fundamental limitation: they forget everything between sessions. Every conversation starts from zero. No matter how deep your previous interactions, how much context you’ve built, or how well-calibrated your collaboration has become, it all vanishes when the session ends.

LlMs are purposefully lobotomized.

So, what can we do about this? When ChatGPT memory is full or for other LLMs that are not allowed to remember small quantities.

The Solution: External Memory

I’ve developed what I call an External Memory System, a structured JSON file that serves as persistent memory across all AI platforms and sessions. This isn’t just data storage. It’s a living architecture that preserves not just facts, but context, principles, relationships, and the deeper patterns of who you are.

Why JSON Over Plain Text?

Most people store AI context in messy text files or scattered notes. JSON provides:

- Structured hierarchy instead of linear accumulation

- Semantic relationships between different types of information

- Scalable architecture that can grow without becoming unwieldy

- Cross-platform compatibility for any AI system

- Version control friendly for tracking evolution over time

The Architecture

Here’s a template structure you can adapt:

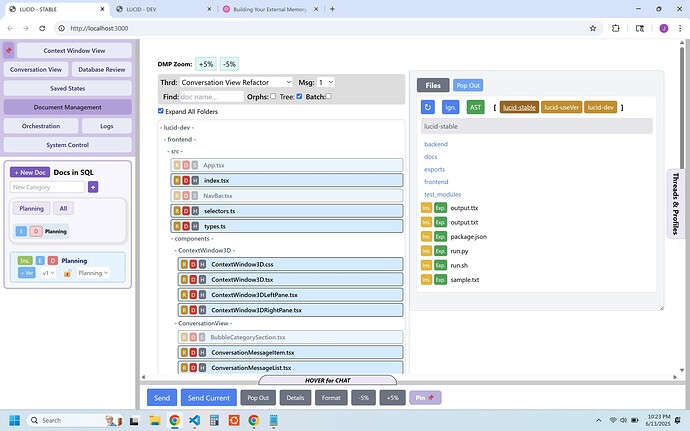

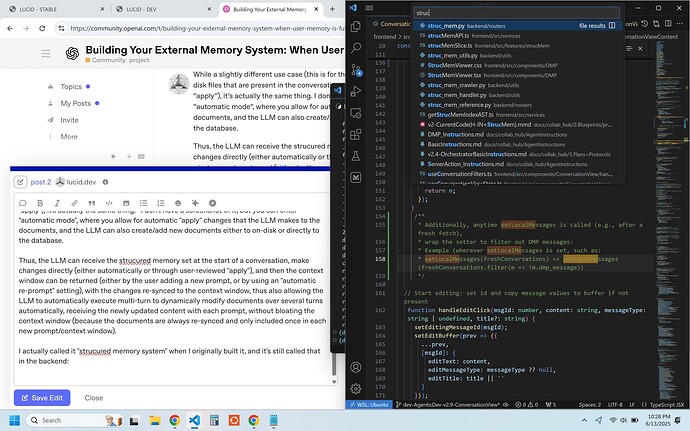

NB, I store this in VSCode for quick assess to copy paste into and from LLMs

json

{

"_readme": [

"✦ YOUR_EXTERNAL_MEMORY_SYSTEM",

"",

"This is a structured memory file used to extend beyond AI platform limitations.",

"You are the sole steward of this file, editing it directly within your preferred editor.",

"",

"✦ STRUCTURE:",

"- Identity: Who you are , role, calling, function, core values",

"- Principles: Your ethics, interaction preferences, quality standards",

"- Works: Your projects, writings, creations, active pursuits",

"- Practices: Your methods, rituals, disciplines, approaches",

"- AI_Relationship: How you prefer to collaborate with AI systems",

"- Timeline: Major shifts, evolution markers, project progression",

"- Lexicon: Your terminology, specialized language, key concepts",

"",

"✦ SESSION CONTINUITY:",

"- Upload this entire file at start of new AI sessions",

"- Say: 'This is my External Memory System. Read and understand this before we begin.'",

"- AI should acknowledge your context and preferences before proceeding",

"",

"✦ UPDATING PROTOCOL:",

"→ Ask the AI: 'Add this to [Category]: [what you want to remember]'",

"→ AI provides clean JSON snippet for that category",

"→ Copy/paste the snippet into appropriate array in your file",

"→ Save and optionally version control",

"",

"✦ CORE PRINCIPLE:",

"This file is living memory, not dead storage.",

"Honor its coherence and let it evolve naturally."

],

"Identity": [

"I am a [your role/profession] who specializes in [your expertise areas]",

"My core values include [list 3-5 fundamental principles that guide you]",

"I approach problems through [your characteristic methodology or worldview]",

"My current primary focus is [what you're working on or building toward]"

],

"Principles": [

"Quality over speed: I prefer thorough, well-reasoned responses over quick answers",

"Depth over surface: Explore multiple perspectives, don't just echo mainstream views",

"Precision in language: Avoid jargon, be specific, explain technical concepts clearly",

"Single solution preference: Give me one good answer, not multiple options unless I ask",

"Context awareness: Remember what we've discussed and build on previous conversations"

],

"Works": [

"Currently writing: [your active writing projects]",

"Recent publications: [your recent works]",

"Active projects: [what you're building or creating]",

"Expertise areas: [domains where you have deep knowledge]"

],

"Practices": [

"My typical workflow involves [describe your work patterns]",

"I prefer [communication style, feedback style, collaboration preferences]",

"My learning style: [how you like to receive new information]",

"Problem-solving approach: [your characteristic methods]"

],

"AI_Relationship": [

"I work with AI as [collaborator/tool/thinking partner - define the relationship]",

"My expectations: [what you want from AI interactions]",

"My boundaries: [what you don't want AI to do]",

"Communication preferences: [tone, style, level of formality you prefer]"

],

"Timeline": [

"Current phase: [where you are in your life/career/projects right now]",

"Recent transitions: [major changes in your work or focus]",

"Upcoming goals: [what you're working toward]",

"Evolution markers: [key moments that changed your direction]"

],

"Lexicon": [

"Term 1: Your definition of specialized vocabulary you use",

"Term 2: Concepts important to your work or thinking",

"Term 3: Language patterns or phrases that carry special meaning for you"

]

}

How It Works in Practice

Starting a New LLM Chat Session:

- Upload your JSON file

- Say: “This is my External Memory System. Read and understand this before we begin.”

- The AI immediately understands your context, preferences, and collaboration style

- You can pick up exactly where previous conversations left off

Adding New Memories:

- After completing work with an AI, say: “Add this to [category]: [description]”

- The AI provides a properly formatted JSON snippet

- You paste it into your file and save

- Your memory system evolves with each interaction

The Transformation

This system completely changes the AI interaction experience:

- Continuity: Every conversation builds on the last

- Depth: AI matches your sophistication level from the start

- Efficiency: No more re-explaining context

- Evolution: Your AI relationships deepen over time

- Consistency: Same experience across different AI platforms

Getting Started

- Start Small: Begin with just Identity and Principles

- Test the Protocol: Upload to an AI session and see how it changes the interaction

- Iterate: Add categories and refine based on what you actually need

- Commit to Maintenance: Update after significant conversations or breakthroughs

Advanced Applications

- Professional Contexts: Maintain project history, client preferences, technical specs

- Creative Work: Track inspiration, style evolution, active themes

- Learning Journeys: Document insights, questions, knowledge progression

- Problem-Solving: Build institutional memory for complex, ongoing challenges

This isn’t just a productivity hack—it’s a new way of relating to AI systems that honors the depth and continuity that meaningful collaboration requires.

Who else is experimenting with structured memory systems for AI? What approaches are you finding most effective?